Talk 1: Shaping the Future of Music Innovation: From Pop Music Transformer to Efficient and Controllable AI Models

Talk 2: Human-AI Interaction in Music: Ensemble Performance, LLM-Powered Music Annotation and Retrieval, and Beyond

Talk 3: Recent advances of symbolic music understanding and multi-modal music performance generation

Talk 4: Multimodal Spatio-Temporal Sensing

Talk 5: Research at Intelligence and Cultural Evolution Lab at Kyushu University

2: Dr. Juhan Nam, Professor, Korea Advanced Institute of Science and Technology (KAIST)

3. Dr. Li Su, Associate Professor, Academia Sinica’s Institute of Information Science

4. Dr. Kazuyoshi Yoshii, Professor, Graduate School of Engineering, Kyoto University

5. Dr. Eita Nakamura, Associate Professor, Kyushu University

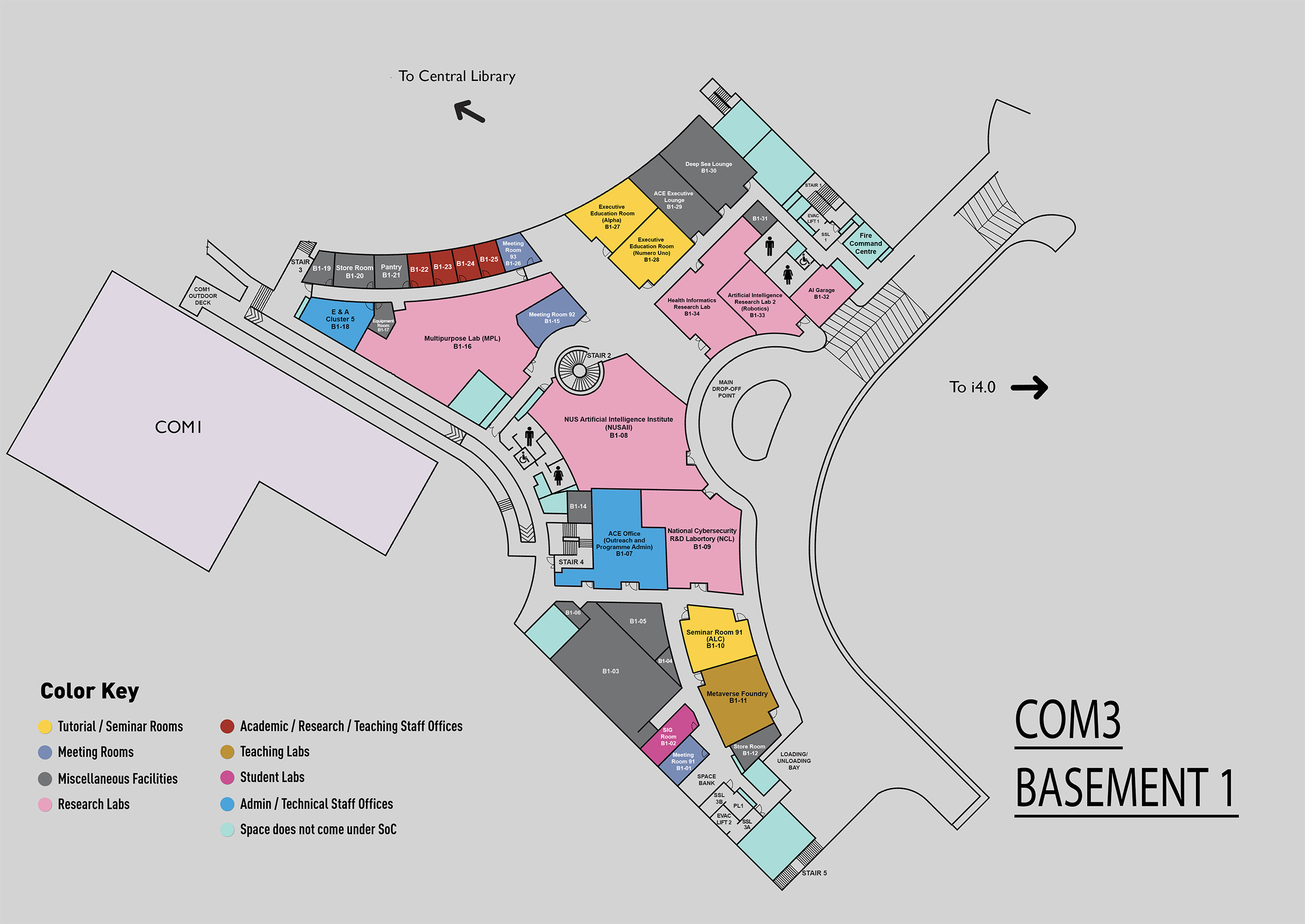

COM3 Basement

MR92, COM3 B1-15

Talk 1: By Dr. Yi-Hsuan Yang - 3:30 PM to 4:30 PM

Abstract:

This talk traces the journey of AI-driven music generation, spotlighting key innovations that have transformed music creation. We’ll revisit foundational works like Pop Music Transformer, which pioneered expressive pop piano generation, Theme Transformer, enabling theme-driven compositions, and MuseMorphose, advancing piano music style transfer. The discussion will also highlight recent advancements on text-to-music generation like SiMBA-LDM, a model that drastically reduces training costs while delivering high-quality music, and MuseControlLite, which enhances control over melody and rhythm through lightweight fine-tuning. Additionally, we’ll explore audio effect modeling for guitar tones, showcasing practical applications. Geared toward tech enthusiasts, this talk will illustrate how these models make music creation more accessible, precise, and creative.

Bio:

Dr. Yi-Hsuan Yang received the Ph.D. degree in Communication Engineering from National Taiwan University. Since February 2023, he has been with the College of Electrical Engineering and Computer Science, National Taiwan University, where he is a Full Professor. Prior to that, he was the Chief Music Scientist in an industrial lab called Taiwan AI Labs from 2019 to 2023, and an Associate/Assistant Research Fellow of the Research Center for IT Innovation, Academia Sinica, from 2011 to 2023. His research interests include automatic music generation, music information retrieval, artificial intelligence, and machine learning. His team developed music generation models such as MidiNet, MuseGAN, Pop Music Transformer, and KaraSinger. He was an Associate Editor for the IEEE Transactions on Multimedia and IEEE Transactions on Affective Computing, both from 2016 to 2019. Dr. Yang is a senior member of the IEEE.

Talk 2: By Dr. Juhan Nam - 4:30 PM to 5:30 PM

Abstract:

This talk presents recent research from the Music and Audio Computing Lab at KAIST, focusing on topics that emphasize human-AI interaction in music. The first topic is human-AI ensemble performance, which requires multimodal, real-time, and robust processing for effective musical communication. This umbrella theme encompasses a wide range of music information retrieval (MIR) tasks, such as piano transcription and score following, as well as visual processing tasks like musical cue detection and performance visualization. We will showcase the outcomes through various demos and stage performances. The second topic is LLM-powered music annotation and retrieval, which enables the use of rich textual descriptions and multi-turn conversations for fine-grained music research. This part explores how music-text understanding has evolved from traditional music classification models to multimodal musical LLMs. Finally, if time permits, I will briefly introduce other ongoing research topics, including Foley sound synthesis, audio enhancement, traditional Korean music analysis, and piano music arrangement.

Bio:

Juhan Nam is a Professor at the Korea Advanced Institute of Science and Technology (KAIST), South Korea. He leads the Music and Audio Computing Lab at the Graduate School of Culture Technology, where his research focuses on music information retrieval, audio signal processing, and AI-based music applications. He also serves as the Director of the Sumi Jo Performing Arts Research Center, fostering collaborations with artists to develop innovative technologies for music performance and education. He received his Ph.D. in Music from Stanford University, where he studied at the Center for Computer Research in Music and Acoustics (CCRMA). Before his academic career, he worked at Young Chang (Kurzweil), developing synthesizers and digital pianos.

Talk 3: By Dr. Li Su - 5:30 PM to 5:40 PM

Abstract: Among various forms of multimedia, music stands out due to the profound interplay between symbolic-level syntax and the performance level semantics it generates. Traditionally, such intricate processes have been governed only by skilled musicians, which sets a high bar for modern AI technologies that aim to model or replicate them. In this talk, we will introduce the efforts our research team made to tackle the barrier of music AI, with focuses on 1) symbolic music understanding, and 2) multi-modal music performance generation. Specifically, we will mention our works on motif discovery, music foundational models, and the virtual musician performance generation projects.

Bio: Li Su received dual B.S. degrees in Electronic Engineering and Mathematics and a Ph.D. in Communication Engineering from National Taiwan University. He joined Academia Sinica’s Institute of Information Science in 2017 and is now an Associate Research Fellow. He was previously a postdoctoral fellow at Academia Sinica’s Research Center for Information Technology Innovation and a visiting scholar at the University of Toronto (2016) and the University of Edinburgh (2019). He also holds a joint appointment as an Assistant Professor at National Tsing Hua University, serves as core faculty in the Data Science Degree Program at National Taiwan University, and lectures at the Taiwan AI Academy. His research focuses on applying artificial intelligence to multimedia, particularly in cross-modal analysis and music generation. He received the ISMIR 2019 Best Paper Award, was an ACM Multimedia 2020 Best Paper finalist, and won the 2021 Outstanding Young Scholar Award. He currently serves as ISMIR 2025 Program Co-chair.

Talk 4: By Dr. Kazuyoshi Yoshii - 5:40 PM to 5:50 PM

Abstract:

Our laboratory focuses on acoustic signal processing (e.g., source separation and localization), image signal processing (e.g., 4D shape estimation), biomedical signal processing (e.g., brain activity measurement and estimation), and integrated statistical multimodal signal processing. Furthermore, we introduce human augmentation technologies based on these techniques.

Bio:

Kazuyoshi Yoshii is a Professor at the Department of Electrical Engineering, Graduate School of Engineering, Kyoto University, Japan and is concurrently the Director of the Sound Scene Understanding Team, AIP, RIKEN, Japan.

Talk 5: By Dr. Eita Nakamura - 5:50 PM to 6:00 PM

Abstract:

In this short talk, I will introduce ongoing research at Intelligence and Cultural Evolution Lab at Kyushu University, Japan. Our lab has two main research themes, music informatics and cultural evolution. For music informatics, we currently put focus on music transcription techniques that goes beyond the standard scopes (unusual recording setting, rare instruments, etc.). For cultural evolution, we develop techniques and tools for quantitative analysis of creative style changes in large-scale artwork data.

Bio:

Dr. Eita Nakamura is an Associate Professor in the Intelligence and Cultural Evolution Laboratory at the Graduate School of Information Science and Electrical Engineering, Kyushu University. He received his Ph.D. degree in physics from the University of Tokyo in 2012. His fields of expertise include intelligent informatics and interdisciplinary physics, with particular interests in mathematical modeling of intelligent behaviors and evolutionary phenomena regarding arts and creative cultures.