Towards Semantically Aligned AI Models: From Symmetries to Provable Guarantees

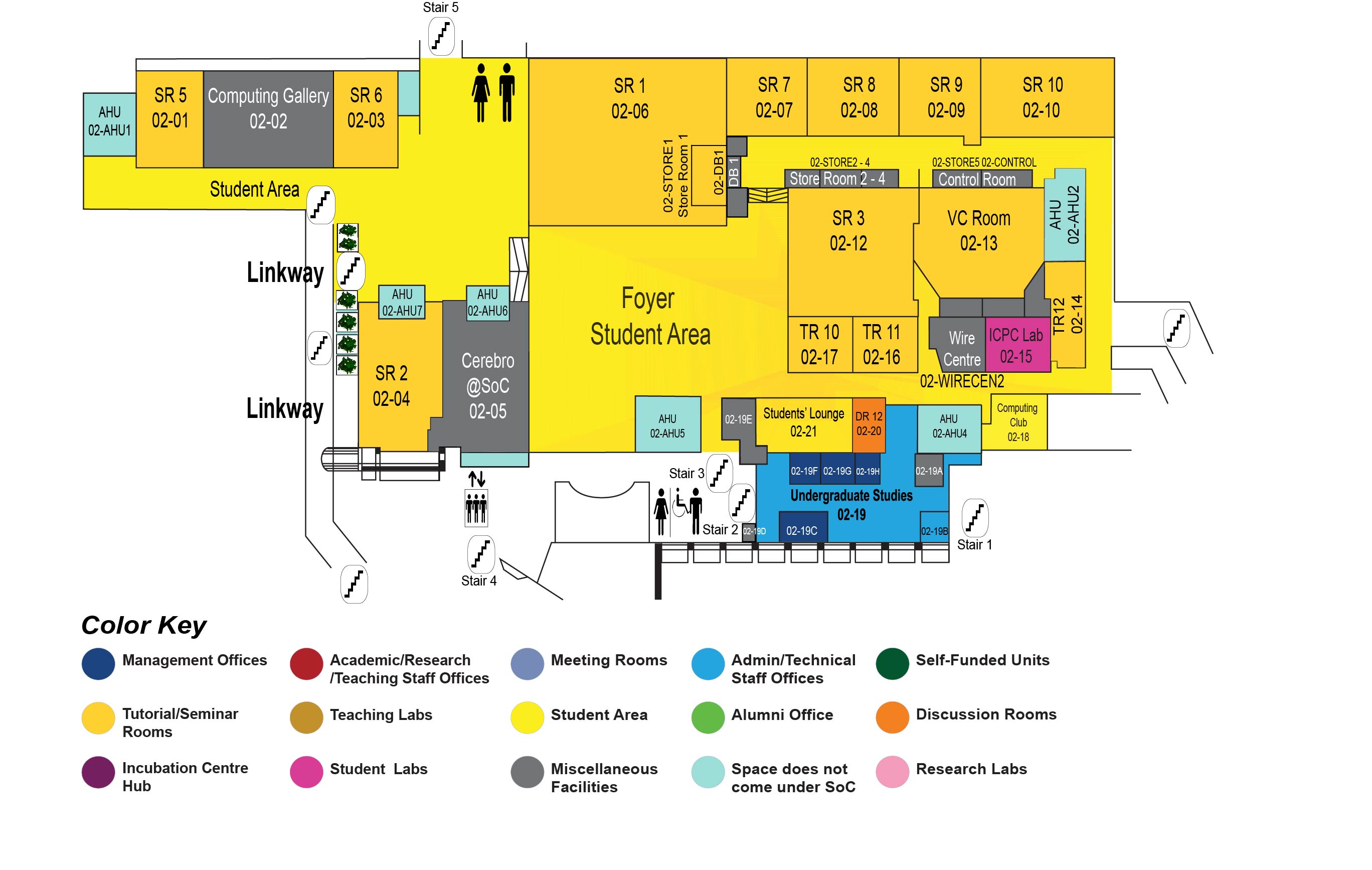

COM1 Level 2

Video Conference Room, COM1-02-13

Abstract:

Abstract: Semantic alignment—ensuring models behave consistently with the intended meaning of inputs—is essential for trustworthy AI. This talk presents two complementary approaches for aligning transformer-based models with formal semantics. First, we introduce SYMC, a symmetry-aware encoder that encodes semantics-preserving code transformations directly into its architecture. By enforcing provable invariance to reordering and structural symmetries through group-theoretic attention, SYMC improves generalization on code analysis tasks—even without pretraining. Second, we present α-β-CROWN, a scalable framework for certifying output bounds over input perturbations in deep networks. Designed for modern architectures including transformers, α-β-CROWN combines tight linear relaxations with efficient branching to provide formal guarantees on model behavior. Together, these two lines of work chart a path toward semantically aligned AI—models that not only learn the right structure, but provably uphold it at inference time.

Bio:

Suman Jana is an associate professor in the department of computer science and the data science institute at Columbia University. His primary research interest is at the intersections of computer security and machine learning. His research has received seven best paper awards, a CACM research highlight, multiple Google faculty fellowships, a JPMorgan Chase Faculty Research Award, an NSF CAREER award, and an ARO young investigator award.