Speech and Music AI: The Current Research Frontier

Xueyao Zhang, The Chinese University of Hong Kong (Shenzhen), China

Junan Zhang, The Chinese University of Hong Kong (Shenzhen), China

Yuancheng Wang, The Chinese University of Hong Kong (Shenzhen), China

Yinghao Ma, Queen Mary University of London, UK

Zachary Novack, UC San Diego, USA

Scott H. Hawley, Belmont University, USA

Rafael Ramirez, Universitat Pompeu Fabra, Spain

Gus Xia, MBZUAI, United Arab Emirates

Junyan Jiang, MBZUAI, United Arab Emirates

Yuxuan Wu, MBZUAI, United Arab Emirates

Xinyue Li, MBZUAI, United Arab Emirates

Xubo Liu, Meta, UK

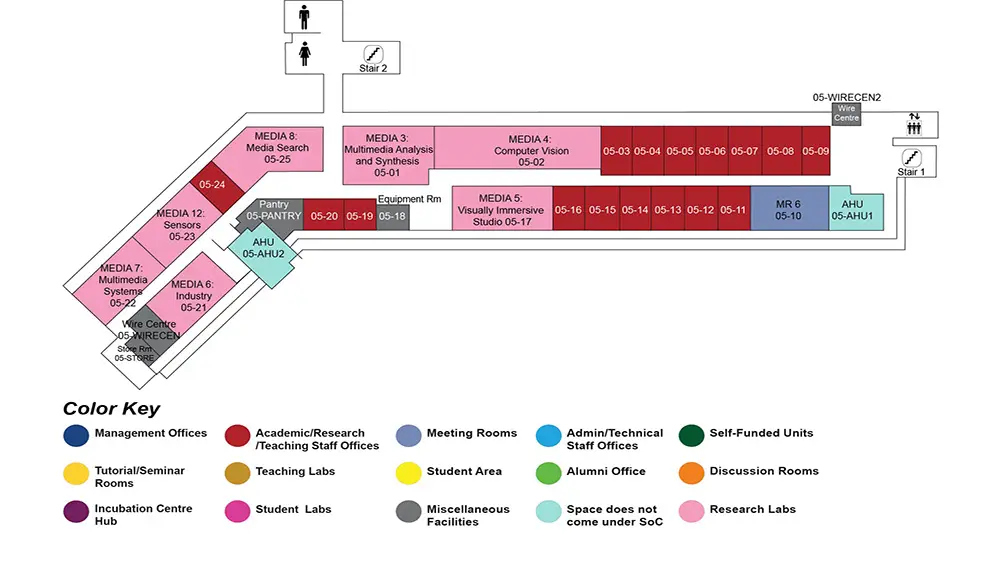

AS6 Level 5

MR6, AS6-05-10

Talk 1: Recent Advances of Speech Generation and DeepFake Detection

Abstract: This talk will cover the recent research activities by the Amphion team led by Prof. Zhizheng Wu. The talk will focus on spoken language understanding, speech generation, unified and foundation models for speech synthesis, voice conversion, and speech enhancement. The talk will be accompanied by three highlight talks of Prof. Wu’s PhD students who presented their work at ICLR2025 in Singapore.

Bio: Prof. Wu Zhizheng is currently an Associate Professor at The Chinese University of Hong Kong, Shenzhen. He holds the position of Deputy Director at the Shenzhen Key Laboratory of Cross-Modal Cognitive Computing. Prof. Wu has been consistently listed in Stanford University’s “World’s Top 2% Scientists” and has received multiple Best Paper Awards. He earned his Ph.D. from Nanyang Technological University and has held research and leadership roles at internationally renowned institutions, including Meta (formerly Facebook), Apple, the University of Edinburgh, and Microsoft Research Asia. Prof. Wu has initiated several influential open-source projects, such as Merlin, Amphion, and Emilia, which have been adopted by over 700 organizations worldwide, including OpenAI. Notably, Amphion has topped GitHub’s trending list multiple times, while Emilia has become the most popular audio dataset (Most Liked) on HuggingFace. He also initiated and organized the first ASVspoof Challenge and the first Voice Conversion Challenge and served as the organizer of the Blizzard Challenge 2019, a prestigious international speech synthesis competition. Currently, Prof. Wu serves on the editorial boards of IEEE/ACM Transactions on Audio, Speech and Language Processing and IEEE Signal Processing Letters, and is the General Chair of the IEEE Spoken Language Technology Workshop 2024.

Talk 2: Extending LLMs for Acoustic Music Understanding — Models, Benchmarks, and Multimodal Instruction Tuning

Abstract: Large Language Models (LLMs) have transformed learning and generation in text and vision, yet acoustic music—an inherently multimodal and expressive domain—remains underexplored. In this talk, I present recent progress in leveraging large-scale pre-trained models and instruction tuning for music understanding. I introduce MERT, a self-supervised acoustic music model with over 50k downloads, and MRABLE, a universal benchmark for evaluating music audio representations.I also present MusiLingo, a system that aligns pre-trained models across modalities to support music captioning and question answering. To address the gap in evaluating instruction-following capabilities, I propose CMI-Bench, the first benchmark designed to test models' ability to understand and follow complex music-related instructions across audio, text, and symbolic domains. I conclude by discussing open challenges in responsible deployment of generative music AI.

Bio: MA Yinghao is a Ph.D. student in the Artificial Intelligence and Music (AIM) program at the Centre for Digital Music (C4DM), Queen Mary University of London, under the supervision of Dr. Emmanouil Benetos and Dr. Chris Donahue. His research explores the intersection of music understanding and large-scale pre-trained models, focusing on multimodal learning, instruction tuning, and model evaluation. He co-developed MERT, a self-supervised model for acoustic music understanding, and MRABLE, a benchmark for evaluating universal music audio representations. More recently, he introduced CMI-Bench for music instruction-following and MusiLingo, a multimodal alignment system for music-language tasks. Beyond research, he co-founded the Multimodal Art Projection (MAP) community and was a student conductor at the Chinese Philharmonic Orchestra, Peking University. His long-term goal is to establish foundational models for AI music understanding while addressing fairness, safety, and creative integrity in generative systems.

Talk 3: Flocoder: a latent flow-matching model for symbolic music generation and analysis

Abstract: We present work in progress on an open source framework for latent flow matching to provide generative MIDI outputs for inpainting tasks such as continuation and/or melody or accompaniment generation. This is a reframing of a prior diffusion-based system ("Pictures of MIDI", arXiv:2407.01499) for more efficient inference. A further goal is to constrain the quantized latent representations to correspond to repeated musical motifs, allowing the embeddings to be used for motif analysis. This is all presented in a new open-source framework called "flocoder" to which interested students are invited to contribute!

Bio: Scott H. Hawley is Professor of Physics and Senior Data Fellow at Belmont University in Nashville Tennessee. He left his first career doing High Performance Computing for astrophysics research and has worked on machine learning applications for music production workflows for over a decade. He was formerly a Technical Fellow at Stability AI and co-developer of the Stable Audio text-to-audio system, the only commercial diffusion model trained on licensed music data, delivering 48kHz in stereo. He is a noted science communicator, having co-authored "the most popular Acoustics Today Article of 2020," and recently was awarded Best Blog Post of ICLR 2025 for his tutorial, "Flow With What You Know." Scott also serves as Head of Research for Hyperstate Music AI, a startup developing a songwriting and music production "coaching" system with co-founders Jonathan Rowden (former co-founder of GPU Audio), multi-platinum producer/songwriter Louis Bell (Post Malone, Taylor Swift, Justin Bieber), and expert systems expert Joe Miller, who helped create the day-trading expert systems for Bridgewater Associates, the largest hedge fund in the USA.

Talk 4: AI-Enhanced Music Learning

Abstract: Learning to play a musical instrument is a difficult task that requires sophisticated skills. This talk will describe approaches to designing and implementing new interaction paradigms for music learning and training based on state-of-the-art AI techniques applied to multimodal (audio, video, and motion) data.

Bio: Dr. Rafael Ramirez (Prof, PhD) is a Tenured Associate Professor and Leader of the Music and Machine Learning Lab at the Engineering Department, Universitat Pompeu Fabra, Barcelona. He obtained his BSc in Mathematics from the National Autonomous University of Mexico, and his MSc and PhD in Artificial Intelligence and PhD in Computer Science from the University of Bristol, UK. He studied Classical Violin and Guitar at the National School of Music in Mexico, and since then he has played with different music groups in Europe, America and Asia. His research interests include music technology, artificial intelligence, and their application to learning, health and well-being. He has published more than 120 research articles in peer-reviewed international Journals and Conferences, and directed international multicenter research projects on music and AI.

Talk 5: Presto! Distilling Steps and Layers for Accelerating Music Generation

Abstract: Despite advances in diffusion-based text-to-music (TTM) methods, efficient, high-quality generation remains a challenge. We introduce Presto!, an approach to inference acceleration for score-based diffusion transformers via reducing both sampling steps and cost per step. To reduce steps, we develop a new score-based distribution matching distillation (DMD) method for the EDM-family of diffusion models, the first GAN-based distillation method for TTM. To reduce the cost per step, we develop a simple, but powerful improvement to a recent layer distillation method that improves learning via preserving hidden state variance. Finally, we combine our improved step and layer distillation methods together for a dual-faceted approach. We evaluate our step and layer distillation methods independently and show each yield best-in-class performance. Furthermore, we find our combined distillation method can generate high-quality outputs with improved diversity accelerating our base model by 10-18x (32 second output in 230ms, 15x faster than the comparable SOTA model) -- the fastest high-quality TTM model to our knowledge.

Bio: Zachary Novack is a PhD in the Computer Science and Engineering department at the University of California San Diego, advised by Dr. Julian McAuley and Dr. Taylor Berg-Kirkpatrick. His research focuses on controllable and efficient music/audio generation, as well as audio reasoning in LLMs. His long-term goal is to design bespoke creative tools for musicians and everyday users alike with adaptive control and real-time interaction, collaborating with top industry labs such as Adobe Research, Stability AI, and Sony AI. Zachary's work has been recognized at numerous top-tier AI conferences, including DITTO (ICML 2024 Oral), Presto! (ICLR 2025 Spotlight), CoLLAP (ICASSP 2025 Oral), and hosted the ISMIR 2024 Tutorial on Connecting Music Audio and Natural Language. Outside of academia, Zachary is active within the southern California marching arts community, working as an educator for the 11-time world class finalist percussion ensemble POW Percussion.

Talk 6: Current Research at the Music X Lab

Abstract: In this talk, Gus will provide a brief overview of research at the Music X Lab, highlighting key projects at the intersection of music and artificial intelligence. He will also share reflections on the evolving landscape of Music AI in the age of large language models (LLMs), with a focus on the unique role and value of academic research in shaping its future. The talk will be accompanied by three highlight talks of Prof. Xia’s students who presented their work at ICLR2025 in Singapore.

Bio: Gus Xia is an Associate Professor of Machine Learning at MBZUAI, focusing on designing intelligent systems to enhance human musical creativity and expression. He holds a Ph.D. in Machine Learning from Carnegie Mellon University and has prior academic experience as a Global Network Assistant Professor in Computer Science at NYU Shanghai, with affiliations at NYU Tandon, the Center for Data Science, and the Music and Audio Research Laboratory.

Talk 7: Natural Language-Driven Audio Intelligence for Content Creation

Abstract:In the digital age, audio content creation has transcended traditional boundaries, becoming a dynamitic field that fuses technology, creativity, and user experience. This talk will discuss the recent advances in natural language-driven audio AI technologies that reshapes human interaction with audio content creation. In this talk, we first introduce the work on language-queried audio source separation (LASS), which aims to extract desired sounds from audio recordings using natural language queries. We’ll present AudioSep - a foundation model we proposed for separating speech, music and sound events using natural language queries. We will then discuss our first work on language-modelling & latent diffusion-based (AudioLDM) approaches for audio generation. Finally, we will introduce WavJourney, a Large Language Model (LLM) based AI agent for compositional audio creation. WavJourney is capable of crafting vivid audio storytelling with personalized voices, music, and sound effects simply from text instructions. We will further discuss the potential of our proposed compositional approach for audio generation, showing our experimental

findings and state-of-the art results we’ve achieved on text-to-audio generation tasks.

Short Bio:Xubo Liu is a research scientist at Meta GenAI LLaMA team, where he is working on speech and audio capabilities for llama multimodal models. He was the tech lead research scientist at Stability AI, where he leads the efforts in generative AI for audio and speech research and applications. He completed his PhD at the Centre for Vision, Speech, and Signal Processing (CVSSP) at the University of Surrey, UK. His PhD worked on multimodal learning for audio and language, focusing on the understanding, separation, and generation of audio signals in tandem with natural language. He has co-authored over 70 papers in top conferences such as ICLR, CVPR, ICML, AAAI, ACL, EMNLP, ICASSP, Interspeech, TASLP etc. He has organized the “Multimodal Learning for Audio and Language” special session at EUSIPCO 2023 and the “Generative AI for Media Generation” special session at MLSP 2024. He led the organization of the “Language-Queried Audio Source Separation” challenge on DCASE 2024. He received a research gift grant from Google Research for his work on sound separation with texts.