From Filtering to Fingerprints: Constructing Pretraining Datasets for LLMs and Measuring Biases in the Data

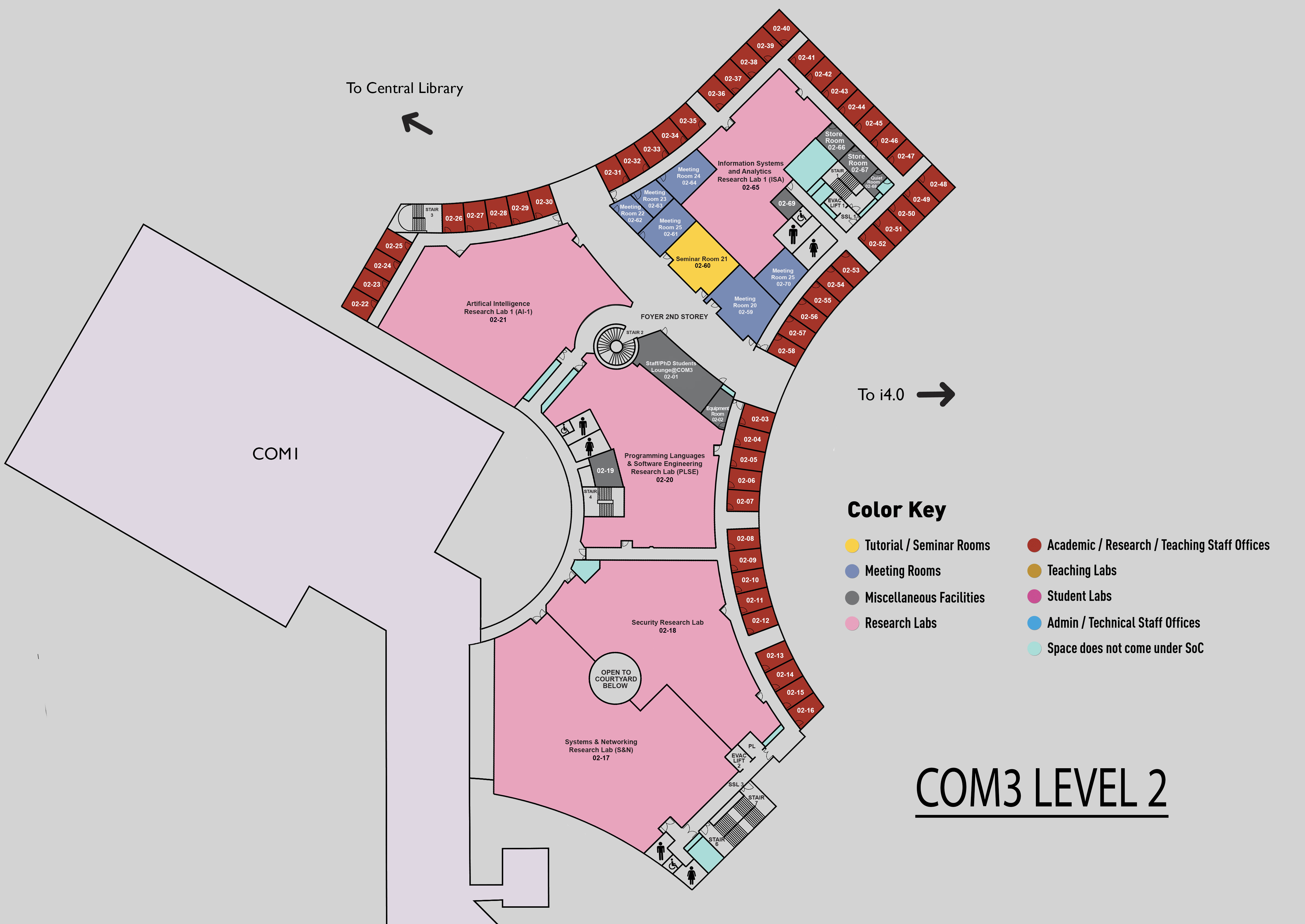

COM3 Level 2

SR21, COM3 02-60

Abstract:

In this talk, we first discuss how pre-trained datasets for LLMs are sourced from the web through heuristic and machine learning based filtering techniques. We then investigate biases in pretraining datasets for large language models (LLMs) through dataset classification experiments. Building on prior work demonstrating the existence of biases in popular computer vision datasets, we analyze popular open-source pretraining text datasets derived from CommonCrawl including C4, RefinedWeb, DolmaCC, RedPajama-V2, FineWeb, and others. Despite those datasets being obtained with similar filtering and deduplication steps, LLMs can classify surprisingly well which dataset a single text sequence belongs to, significantly better than a human can. This indicates that popular pretraining datasets have their own unique biases or fingerprints.

Biography:

Reinhard Heckel is a Professor of Machine Learning (Tenured Associate Professor) at the Department of Computer Engineering at the Technical University of Munich (TUM), and adjunct faculty at Rice University, where he was an assistant professor of Electrical and Computer Engineering from 2017-2019. Before that, he was a postdoctoral researcher in the Berkeley Artificial Intelligence Research Lab at UC Berkeley, and before that a researcher at IBM Research Zurich. He completed his PhD in 2014 at ETH Zurich and was a visiting PhD student at Stanfords University’s Statistics Department.Reinhard’s work is centered on machine learning, artificial intelligence, and information processing, with a focus on developing algorithms and foundations for deep learning, particularly for medical imaging, on establishing mathematical and empirical underpinnings for machine learning, and on the utilization of DNA as a digital information technology.