Algorithmic Improvements for Differentially Private Learning

COM1 Level 3

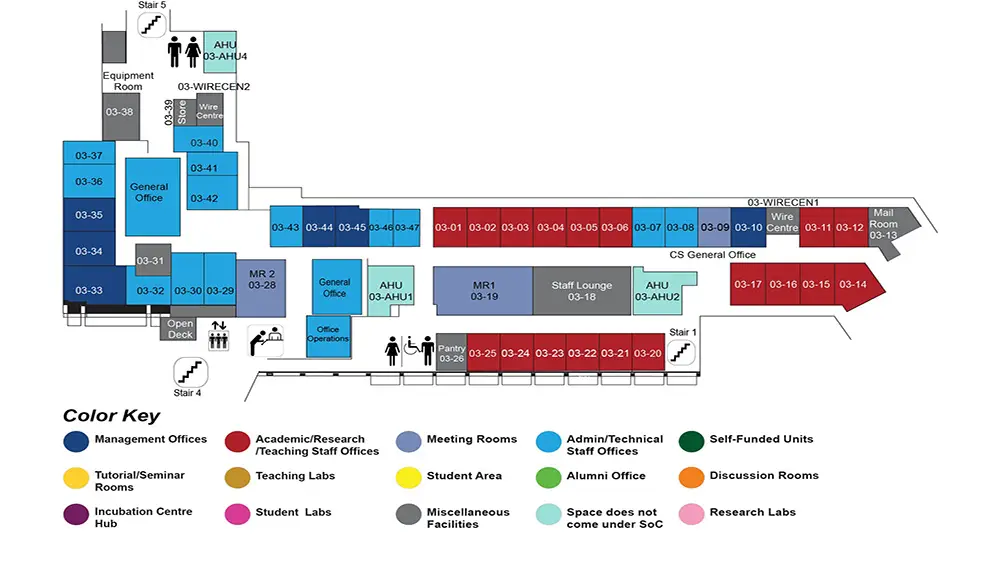

MR1, COM1-03-19

Abstract:

Neural networks (NNs) have become integral to numerous applications in healthcare, social media, and beyond. However, when trained on sensitive data without adequate privacy protections, they pose significant risks, as adversaries can exploit model outputs to infer private information. Differential privacy (DP) provides a rigorous framework for mitigating these risks, ensuring that learning algorithms produce models that preserve privacy while maintaining utility. Despite its promise, integrating DP into neural networks presents substantial challenges due to the diversity of data structures, such as Euclidean and graph data, and the complexity of corresponding architectures, including convolutional neural networks (CNNs) and graph convolutional networks (GCNs). These differences necessitate specialized algorithmic improvements to balance privacy and utility effectively. In this thesis proposal, we develop three novel differentially private learning algorithms to address these challenges: DPIS, GCON, and GNDP.

First, we propose DPIS, a novel algorithm for differentially private learning from Euclidean data based on importance sampling (IS). By incorporating importance sampling into the differentially private stochastic gradient descent (DP-SGD) framework, DPIS reduces sampling variance and mitigates the noise required for privacy, leading to improved model accuracy. Although applying IS in the non-private setting has been well-studied in the machine learning literature, integrating IS into the private SGD is non-trivial. DPIS addresses the challenge through novel mechanism designs, fine-grained privacy analysis, efficiency enhancements, and adaptive gradient clipping optimization.

Second, we propose GCON, a novel algorithm for learning from graph data with edge-level differential privacy. Unlike existing approaches that modify adjacency matrices or intermediate features, GCON preserves the integrity of graph convolution operations by leveraging objective perturbation. Our analysis derives tight, closed-form sensitivity bounds, quantifying how edge modifications influence model parameters while ensuring privacy guarantees.

Third, we plan to propose GNDP, a general algorithm for learning from graph data with node-level differential privacy. Existing node-private methods often suffer from overestimated sensitivities and restricted neighborhood propagation, leading to degraded utility. To address these limitations, GNDP would introduce a refined message-passing mechanism with tight sensitivity analysis, enhancing both privacy and model performance.