1) Communication-efficient distributed optimization algorithms - From 10-11am

2) UNSURE: Unknown Noise level Stein's Unbiased Risk Estimate - From 11am-12pm

2) Julián Tachella, CNRS Research Scientist at ENS de Lyon, France - From 11am-12pm

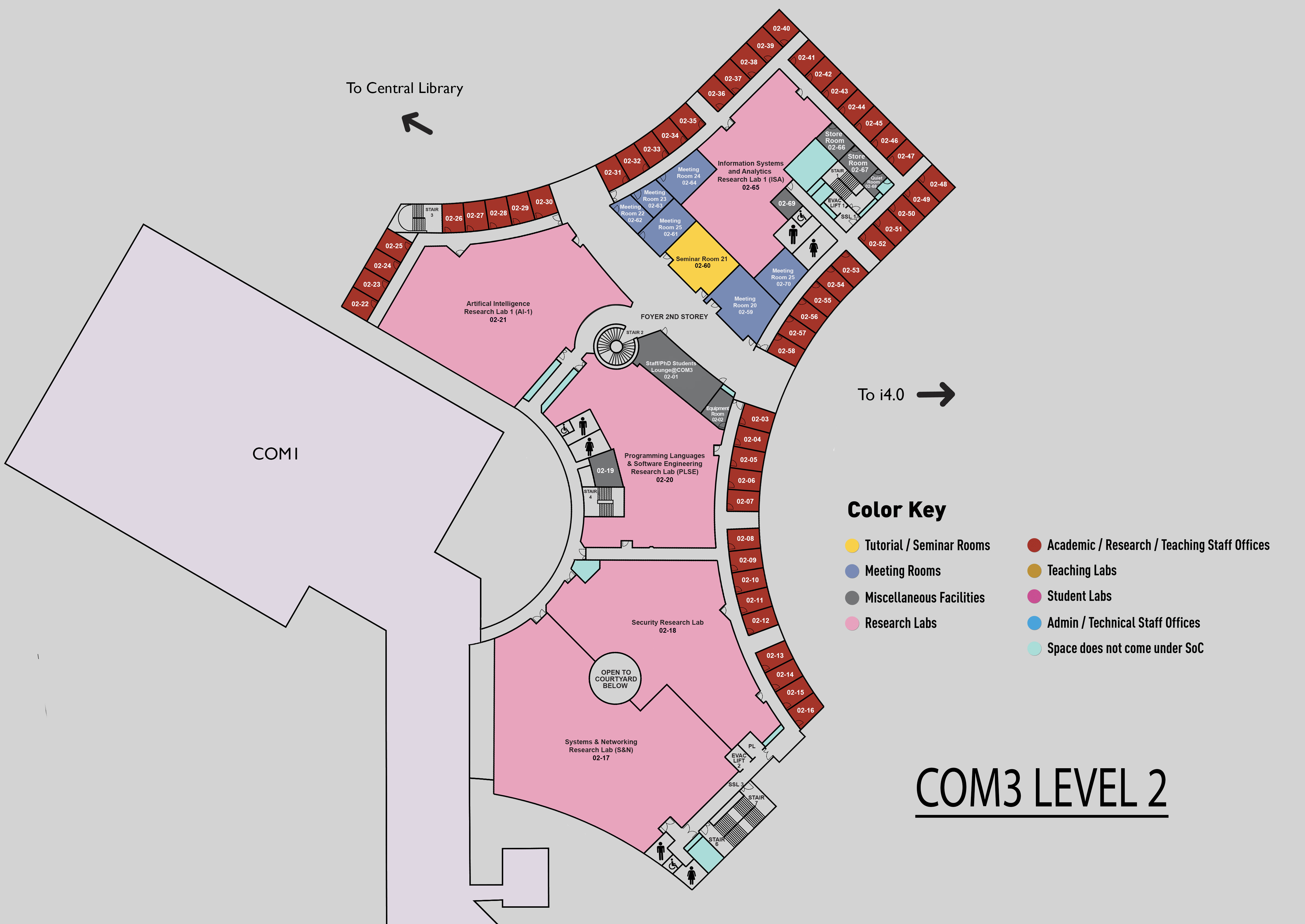

COM3 Level 2

MR21, COM3 02-61

1) Abstract: From 10am - 11am

In distributed optimization and machine learning, a large number of machines perform computations in parallel and communicate back and forth with a server. In particular, in federated learning, the distributed training process is run on personal devices such as mobile phones. In this context, communication, that can be slow, costly and unreliable, forms the main bottleneck. To reduce communication, two strategies are popular: 1) local training, that consists in communicating less frequently; 2) compression. Also, a robust algorithm should allow for partial participation. I will present several randomized algorithms we developed recently, with proved convergence guarantees and accelerated complexity. Our most recent paper “LoCoDL: Communication-Efficient Distributed Learning with Local Training and Compression,” will be presented at the International Conference on Learning Representations (ICLR) 2025, as Spotlight.

Bio:

Laurent Condat is a Senior Research Scientist at King Abdullah University of Science and Technology (KAUST), Saudi Arabia. He got his PhD in 2006 from Grenoble Institute of Technology, Grenoble, France. After a 2 year postdoc in Munich, Germany, he was recruited as a permanent researcher by the CNRS in 2008. He spent 4 years in the GREYC, Caen, and 7 years in GIPSA-Lab, Grenoble before joining King Abdullah University of Science and Technology (KAUST). His research interests include optimization (deterministic and stochastic algorithms, convex relaxations) and applications to machine learning, signal and image processing. (https://lcondat.github.io/)

2) Abstract: From 11am - 12pm

Recently, many self-supervised learning methods for image reconstruction have been proposed that can learn from noisy data alone, bypassing the need for ground-truth references. Most existing methods cluster around two classes: i) Stein's Unbiased Risk Estimate (SURE) and similar approaches that assume full knowledge of the noise distribution, and ii) Noise2Self and similar cross-validation methods that require very mild knowledge about the noise distribution. The first class of methods tends to be impractical, as the noise level is often unknown in real-world applications, and the second class is often suboptimal compared to supervised learning. In this talk, I will present a theoretical framework that characterizes this expressivity-robustness trade-off and propose a new approach based on SURE, but unlike the standard SURE, does not require knowledge about the noise level. I will also show that the proposed estimator outperforms other existing self-supervised methods on various imaging inverse problems.

Bio:

Julian Tachella is a research scientist at the French National Centre for Scientific Research CNRS, working at the Sisyph laboratory, École Normale Supérieure de Lyon (Lyon, France). His research lies at the intersection of signal processing and machine learning. He is particularly interested in the theory of imaging inverse problems and applications in computational imaging. He is also a lead developer of the deep inverse, a library for solving inverse problems with deep learning. (https://tachella.github.io/)