Brain-inspired sparse network science for next generation efficient and sustainable AI

COM1 Level 3

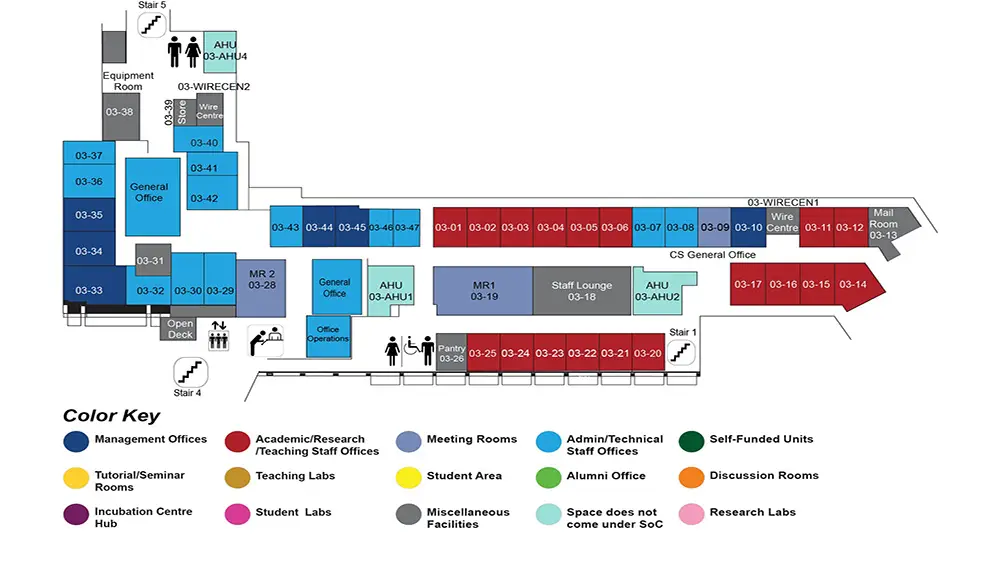

MR1, COM1-03-19

Abstract:

Artificial neural networks (ANNs) power modern AI but are inefficient compared to the brain’s sparse low-energy architecture. At the Center for Complex Network Intelligence (CCNI) in Tsinghua, we study brain-inspired principles to design more efficient AI, focusing on connectivity sparsity, morphology, and neuro-glia coupling.

This talk introduces the Cannistraci-Hebb Training soft rule (CHTs), a brain-inspired, gradient-free method that predicts sparse connectivity based solely on network topology, achieving ultra-sparse networks (~1% connectivity) that outperform fully connected models. We also present a neuromorphic dendritic network model with silent synapses, which excels in visual motion perception tasks and highlights the potential of bio-inspired AI for energy efficiency and transparency.

Bio:

Carlo Vittorio Cannistraci is a theoretical engineer and computational innovator. He is a Chair Professor in the Tsinghua Laboratory of Brain and Intelligence (THBI). He directs the Center for Complex Network Intelligence (CCNI) in THBI, which aims to create pioneering algorithms at the interface between information science, physics of complex systems, complex networks and machine intelligence, with a focus in brain-inspired computing for efficient AI and big data analysis. These computational methods are applied to precision biomedicine, neuroscience, social and economic science.