Scalable and Efficient AI: From Supercomputers to Smartphones

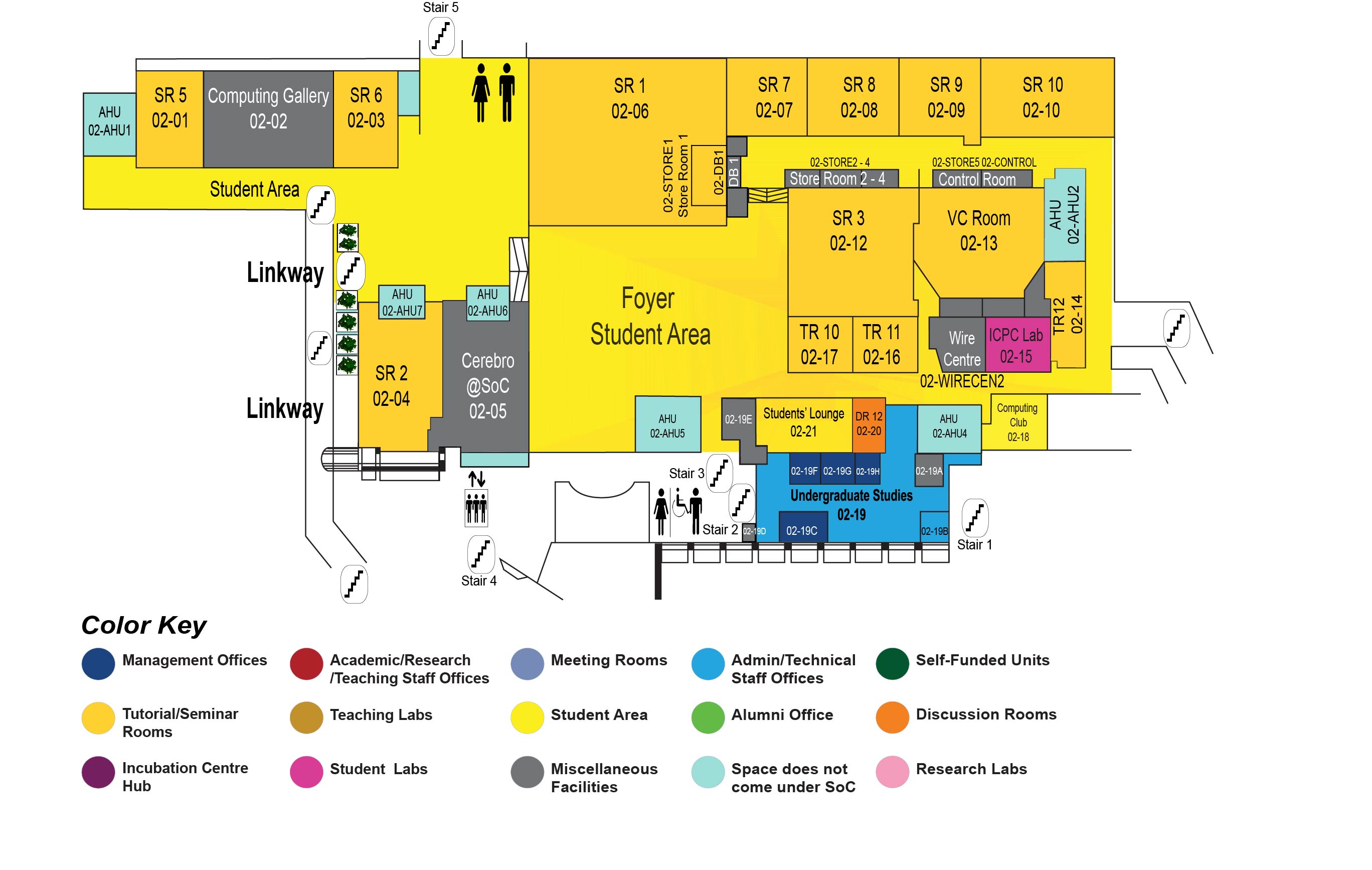

COM1 Level 2

SR2, COM1-02-04

Please register at the following link: https://forms.office.com/r/Ub0WPTntQZ

Refreshments (desserts and beverages) will be served

Abstract:

Billion-parameter artificial intelligence models have proven to show exceptional performance in a large variety of tasks ranging from natural language processing, computer vision, and image generation to mathematical reasoning and algorithm generation. Those models usually require large parallel computing systems, often called "AI Supercomputers", to be trained initially. We will outline several techniques ranging from data ingestion, parallelization, to accelerator optimization that improve the efficiency of such training systems. Yet, training large models is only a small fraction of practical artificial intelligence computations. Efficient inference is even more challenging - models with hundreds-of-billions of parameters are expensive to use. We continue by discussing model compression and optimization techniques such as fine-grained sparsity as well as quantization to reduce model size and significantly improve efficiency during inference. These techniques may eventually enable inference with powerful models on hand-held devices. All this will even be more useful as we are entering the Age of Computation where we are reaching the limits of human-generated data and lean towards synthetic data and agent interactions to solve complex tasks. We will also outline several approaches into this direction.

Bio:

Torsten Hoefler is a full professor at ETH Zurich where directs the Scalable Parallel Computing Laboratory (SPCL). He is also the Chief Architect for Machine Learning at the Swiss National Supercomputing Center and a Long-term Consultant to Microsoft in the areas of large-scale AI and networking. He received his PhD degree in 2007 at Indiana University and started his first professor appointment in 2011 at the University of Illinois at Urbana-Champaign.

Torsten is an ACM Fellow, IEEE Fellow, and Member of Academia Europaea. He received the ACM Gordon Bell Prize in 2019. He is the the youngest recipient of the IEEE Sidney Fernbach Award, the oldest career award in High-Performance Computing. He was the first recipient of the ISC Jack Dongarra Award in 2023. He has received many other career awards such as ETH Zurich's Latsis Prize in 2015, the SIAM SIAG/Supercomputing Junior Scientist Prize in 2012, the best student award of the Chemnitz University of Technology in 2005, the IEEE TCSC Young Achievers in Scalable Computing Award in 2013, the IEEE TCSC Award of Excellence in 2019, and both the Young Alumni Award 2014 and the Distinguished Alumni Award in 2023 from Indiana University.

He has published more than 300 papers in peer-reviewed international conferences and journals and co-authored the the MPI 3 specification. He has received six best paper awards at the ACM/IEEE Supercomputing Conference in 2010, 2013, 2014, 2019, 2022, and 2023 (SC10, SC13, SC14, SC19, SC22, SC23). Other best paper awards include IPDPS'15, ACM HPDC'15 and HPDC'16, ACM OOPSLA'16, and other conferences. Torsten was elected into the first steering committee of ACM's SIGHPC in 2013 and he was re-elected in 2016, 2019, and 2022. His Erdos number is two (via Amnon Barak) and he is an academic descendant of Hermann von Helmholtz.

Torsten has served as the lead for performance modeling and analysis in the US NSF Blue Waters project at NCSA/UIUC. Since 2013, he is professor of computer science at ETH Zurich and has held visiting positions at Argonne National Laboratories, Sandia National Laboratories, and Microsoft in Redmond.

Dr. Hoefler's research aims at understanding the performance of parallel computing systems ranging from parallel computer architecture through parallel programming to parallel algorithms. He is also active in the application areas of Weather and Climate simulations as well as Machine Learning with a focus on Distributed Deep Learning. In those areas, he has coordinated tens of funded projects and both an ERC Starting Grant and an ERC Consolidator Grant on Data-Centric Parallel Programming.