Non-stationary Processing in Deep Networks for Ecient Multimedia Applications

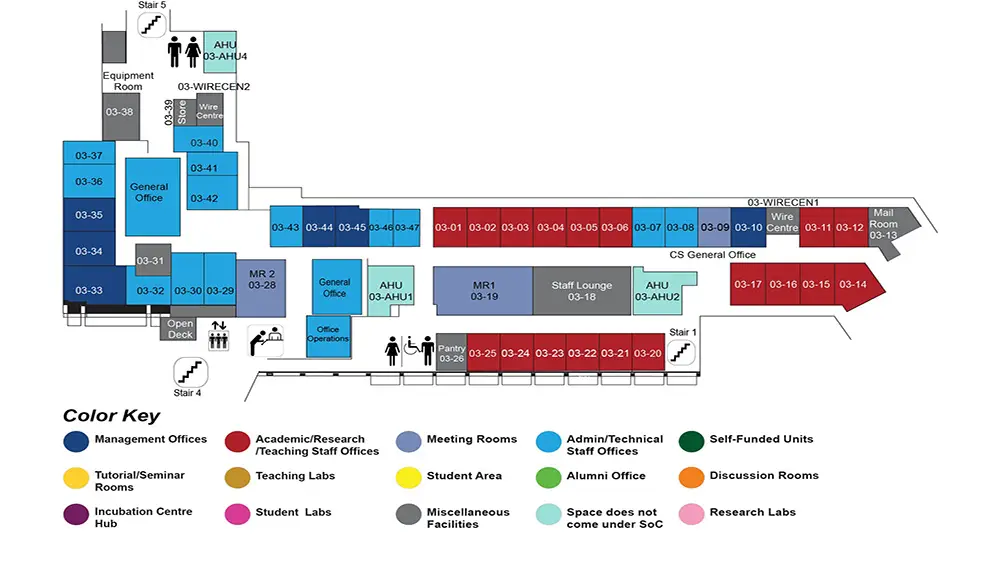

COM1 Level 3

MR1, COM1-03-19

Abstract:

Deep neural networks (DNNs) have emerged as a transformative force across a wide array of multimedia applications, encompassing areas such as computer vision, audio processing, and natural language processing. The architectural foundation of most DNNs involves convolutional or linear operations that uniformly process features across different spatial or temporal locations, as well as across various samples. This stationarity often leads to sub-optimal computational efficiency since different samples and distinct regions within a single sample may exhibit vastly diverse feature and degradation characteristics. The non-adaptiveness of the processing kernels with regards to non-stationary feature characteristics can result in sub-optimal outputs and wasted computational resources due to the convolution process that assumes a stationary kernel. This problem will cascade down the subsequent stages of processing thereby resulting in poor unsatisfactory performance. To address these challenges, we propose the integration of non-stationary feature processing mechanisms within deep neural networks to enhance computational efficiency and improve the accuracy of the feature response. We explore incorporating non-stationarity from multiple perspectives: (1) Non-stationary with respect to scales, through which we enhance feature learning across multiple spatial and temporal receptive fields; (2) Non-stationary across samples in the data distribution, whereby kernel parameters are dynamically generated for each individual sample based on its unique input features; and (3) Non-stationary among spatial and temporal locations, allowing for the dynamic generation of variable parameters tailored to specific local regions within the data. We have conducted extensive experimental evaluations across a wide range of tasks, including image classification, restoration, enhancement, video action recognition, speech dereverberation and denoising. The empirical results substantiate that our non-stationary feature processing not only significantly boosts model performance but also enhances computational efficiency. The results underscore the potential of non-stationary processing techniques in refining the functionality of deep neural networks, presenting a step forward in the development of more sophisticated and efficient neural models for complex multimedia processing tasks.