Principled Analysis of Model Explanations

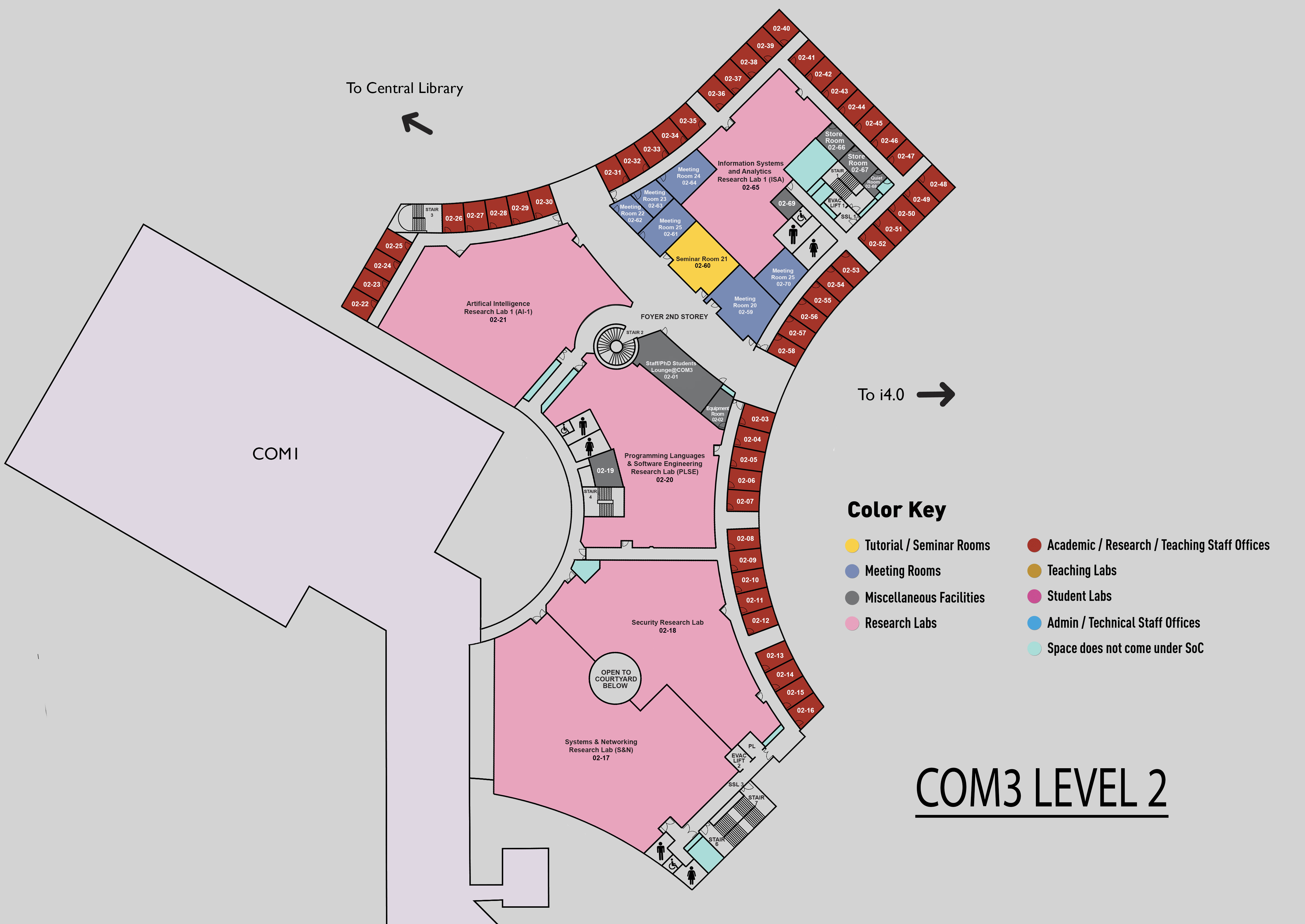

COM3 Level 2

SR21, COM3 02-60

Abstract:

Recent advancements in machine learning have sparked interest in understanding model decision-making through explanation methods, either by interpreting black-box models or designing inherently transparent algorithms. However, choosing the most suitable explanation for a given application remains challenging due to the difficulty of comparing methods. This thesis addresses this challenge by focusing on high-level properties and axioms to assess explanations without the need for direct evaluation.

First, we use axiomatic characterization to derive influence measures for linear and high-dimensional feature attributions. Our results include a new axiomatization for a generalization of the Banzhaf index.

Second, we quantitatively explore properties related to the trustworthiness of explanations. We develop techniques to quantify privacy risks of explanations and study the spatial robustness of saliency maps. Our findings reveal how certain explanation methods may inadvertently expose sensitive information and highlight how explanation’s robustness issues are tied to CNN architectures.

Third, we explore individualization, a property difficult to formalize but valuable for human verification. We propose an interactive framework to extend one-shot explanations and incorporate explainer uncertainty into optimization. An experimental study compares diversity-based and interactive approaches.

In sum, this thesis advances the understanding of model interpretability by combining formal analysis, quantification, and user-centered approaches to explanation.