Energy efficient spiking neural networks and their applications

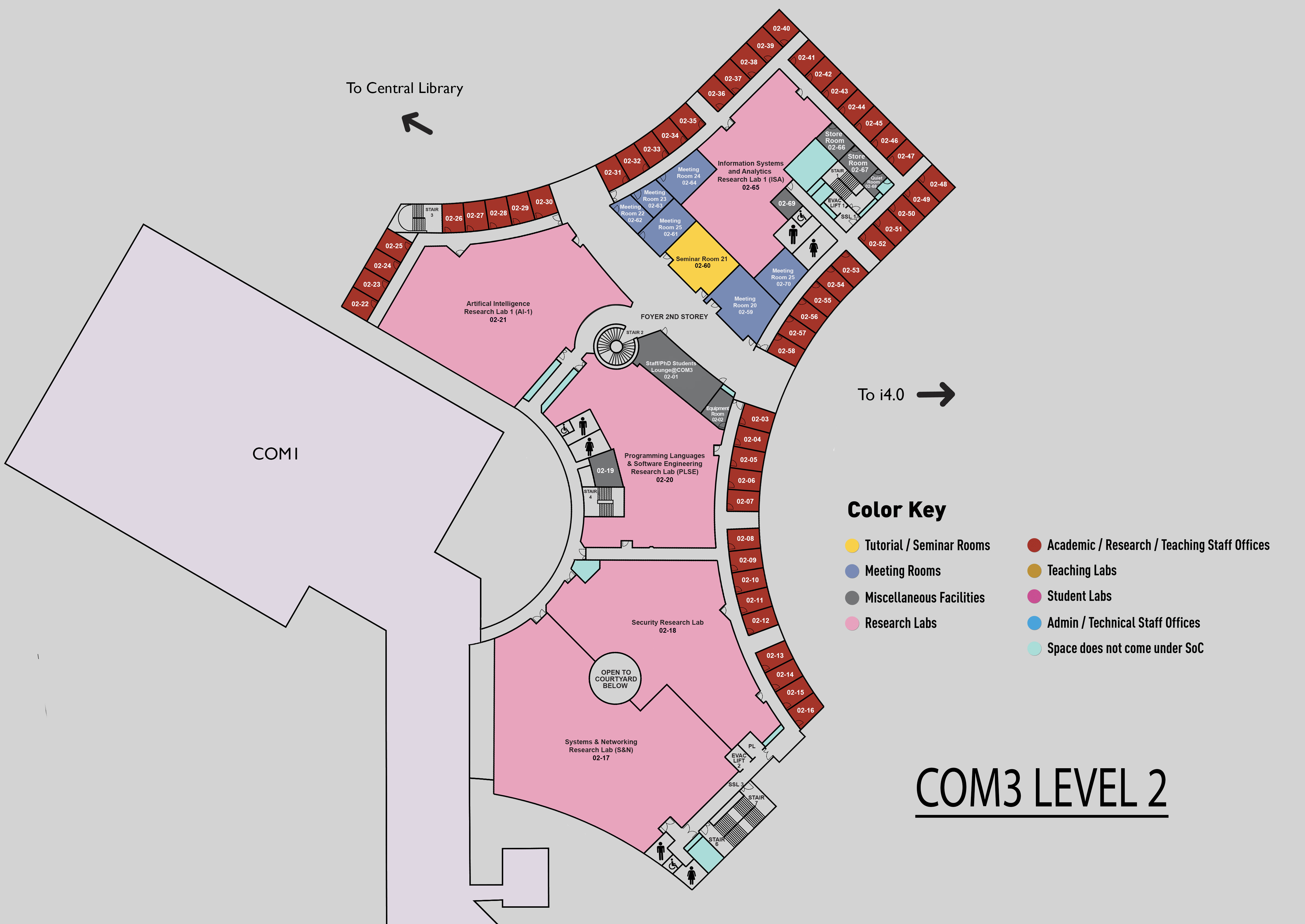

COM3 Level 2

SR21, COM3 02-60

Abstract:

Spiking Neural Networks (SNNs) have emerged as a promising solution for energy-constrained platforms. They are particularly energy-efficient because they replace weight multiplications with addition operations. However, SNNs face unique challenges in training due to their discrete activations. A popular method is to first train an equivalent Convolutional Neural Network (CNN) using traditional backpropagation and then transfer the weights to the SNN. Unfortunately, this often results in significant accuracy loss, especially in deeper networks. This thesis addresses these challenges, offering novel methods to minimize the gap between CNNs and SNNs. Our proposed CQ training and its advanced version, CQ+ training, ensure minimal accuracy loss during CNN to SNN conversion. Benchmark tests on datasets like MNIST, CIFAR-10, CIFAR-100, and ImageNet demonstrate the effectiveness of these methods, showing near-zero conversion losses and state-of-the-art accuracies. With CQ training, using a 7-layer VGG-* and a 21-layer VGG-19 on the CIFAR-10 dataset, we achieved 94.16% and 93.44% accuracy, respectively, in the equivalent SNNs. We also demonstrate low-precision weight compatibility for the VGG-19 structure, achieving 93.43% and 92.82% accuracy with quantized 9-bit and 8-bit weights, respectively, without retraining. With the advanced CQ+ method, the same VGG-* model achieved 95.06% accuracy on CIFAR-10. The accuracy drop from converting the CNN to an SNN is only 0.09% when using a time step of 600. For CIFAR-100, we achieved 77.27% accuracy using the same VGG-* structure with a time window of 500. We also successfully transformed popular CNNs, including various ResNet, MobileNet v1/2, and Densenet models, into SNNs with near-zero conversion accuracy loss and time window sizes smaller than 60.

Current state-of-the-art conversion schemes, including CQ and CQ+, only enable SNNs to achieve accuracies comparable to CNNs when using large window sizes. Therefore, we initially examines the information loss incurred during the conversion from pre-existing ANN models to standard rate-encoded SNN models. Building on these insights, we introduce VR-encoding, a suite of techniques designed to mitigate information loss during conversion, thereby achieving state-of-the-art accuracies for SNNs with significantly reduced latency. With VR-encoding, our method achieved a Top-1 SNN accuracy of 98.73% (using a single time step) on the MNIST dataset, 76.38\% (with 8 time steps) on the CIFAR-100 dataset, and 93.71% (8 time steps) on the CIFAR-10 dataset.

After minimizing the time window size, we further evaluated the energy consumption of our SNNs against optimized ANNs to determine if SNNs truly achieve better energy efficiency. Many studies on SNNs focus primarily on counting additions to estimate energy use, often neglecting significant overheads such as memory accesses and data movement operations. This can distort perceptions of efficiency. Consequently, we performed an in-depth comparison of energy consumption between ANNs and SNNs from a hardware perspective. We developed precise formulas to measure energy consumption across different architectures, including classical multi-level memory hierarchies, commonly used neuromorphic dataflow architectures, and our newly proposed spatial-dataflow architecture. Our results reveal that SNNs must meet stringent conditions related to time window size T and sparsity s to match the accuracy and outperform the energy efficiency of ANNs. For instance, with the VGG16 model at a fixed T of 6, the sparsity rate must surpass 92% to realize energy savings across the majority of the architectures studied.

We then selected EEG and ECG classification as practical applications. For EEG classifications, using a 3-layer pretrained SNN, running on the DEAP dataset, we achieved an accuracy of 78.87% and 76.5% for valence and arousal dimensions, respectively. By training a model based on one dimension and fine-tuning on another, we even achieve higher accuracy, 82.75% for the valence and 84.22% for the arousal. As far as we know, our results yield the smallest SNN with the highest accuracy for this task. The energy power of our SNNs for valence and arousal dimensions is 13.8% that of our CNN-based solutions. For ECG, we introduced a specialized ASIC design tailored for SNNs, optimized for ultra-low power wearable devices. Using the MIT-BIH dataset, our SNN achieved a state-of-the-art accuracy of 98.29%, the highest among similar SNN systems, while consuming only 31.39 nJ per inference and using a mere 6.1 µW of power—making it the most accurate and energy-efficient model in its class. Additionally, we investigated the trade-offs between energy consumption and accuracy for SNNs compared to quantized ANNs, offering valuable insights and recommendations for deploying SNNs effectively.