Efficient and Effective Bandit Algorithms

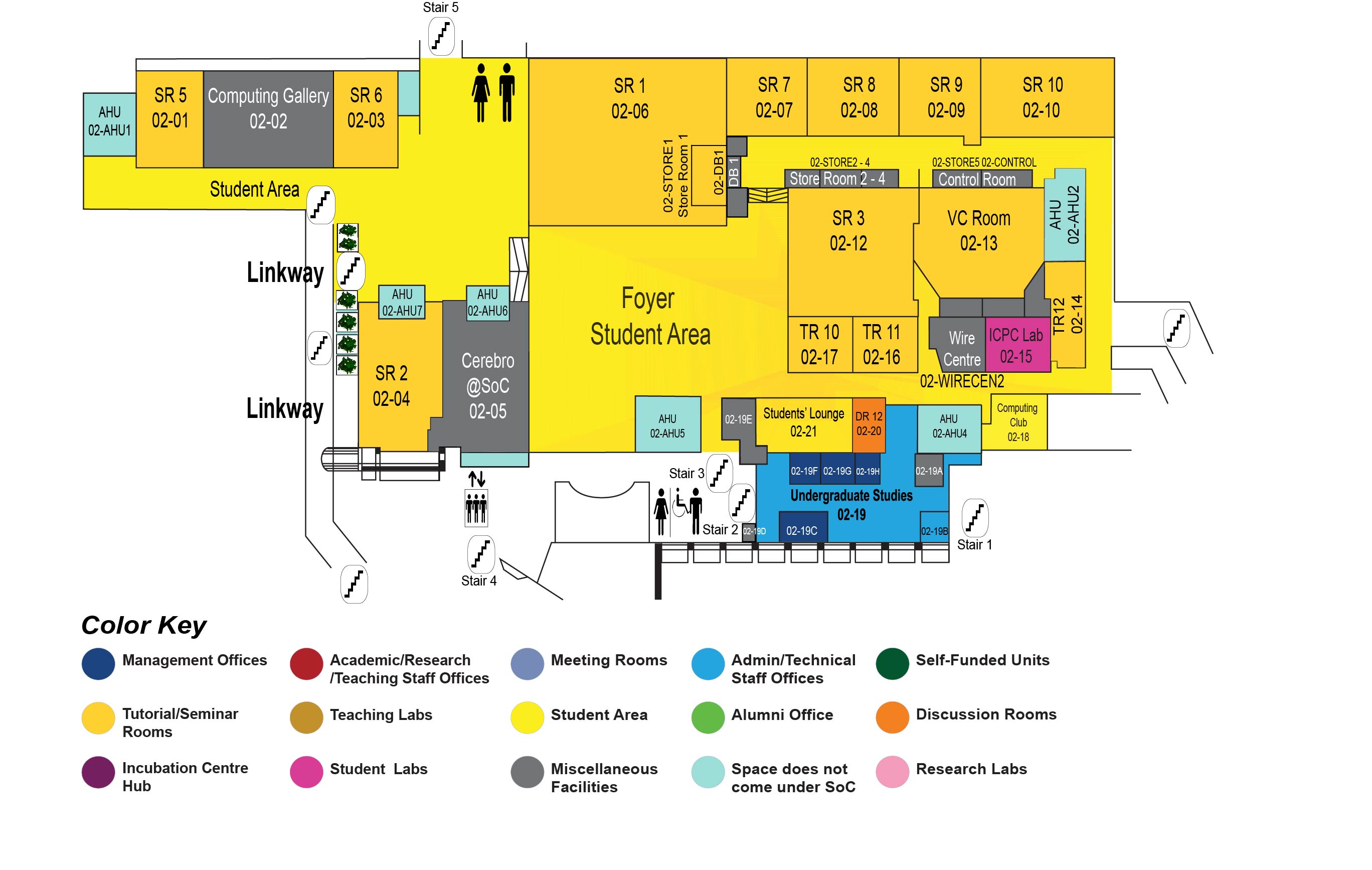

COM1 Level 2

SR7, COM1-02-07

Abstract:

The multi-armed bandit (MAB) problem provides an elementary model for exploration-exploitation tradeoffs and finds many real applications such as medical trials, crowdsourcing, and marketing.

The field of MAB boasts a vast array of algorithms, consistently aimed at developing optimal solutions. In theory, an ideal bandit algorithm should possess problem-independent regret bounds (also known as worst-case or minimax optimal), while also enjoying good problem-dependent regret bounds. Practically, it is desirable for these algorithms to demonstrate both low regret and high computational efficiency in experiments.

On the path towards the optimal solution, we observed that three important problems remained open:

1. Thompson Sampling: Thompson sampling is a classic approach for bandit problems that is known to be empirically efficient and has been widely adopted in practice; however, whether it can achieve the optimal worst-case regret is a long-standing open problem.

2. Batched Bandit: One of the most important issues in machine learning is to make learning scalable. A natural way to speed up the learning process is to learn the objective function in parallel. The fundamental question in batched bandit is: How can we make use of information parallelism while efficiently balancing the exploration-exploitation trade-off?

3. Streaming Bandit: Due to applications with massive data, an important problem in bandit is learning with limited memory. In streaming bandit, only the arm stored in the memory can be evaluated. The question here is to minimize the sample costs/regret with the limited memory constraint.

In this thesis, we study the three problems outlined above. We propose optimal solutions for these problems, and empirical evaluations (for Thompson Sampling and batched bandits) confirm the efficiency and optimality of the proposed algorithms.