Out-Of-Distribution Learning For Robust Vision Perception

COM1 Level 3

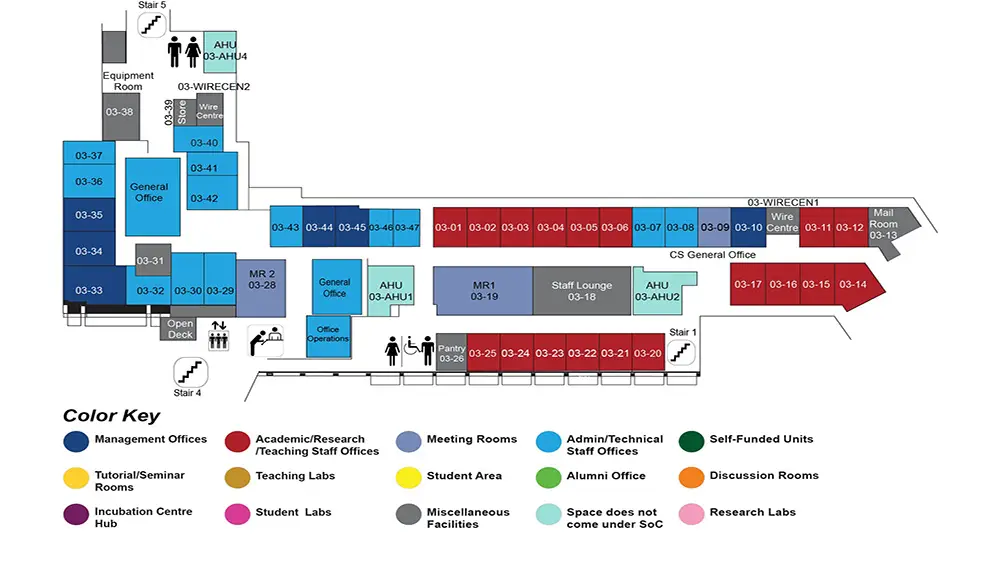

MR1, COM1-03-19

Abstract:

Despite significant advancements, most deep learning models are built with a closed-world assumption. This means that the models are expected to encounter only the data they were trained on. However, the real world is open and unpredictable. The data are often heterogeneous and subject to distribution changes, and characterized by out-of-distribution (OOD) samples. This nature presents substantial challenges for intelligent systems, which would need to effectively handle diverse OOD inputs. This thesis thus focuses on data-efficient OOD learning aiming to appropriately handle data that significantly deviates from its training set.

We first study the utilization of OOD data to improve generalization on the target task, i.e. domain adaptation. Due to the high annotation cost, researchers have turned their attention to the unsupervised DA (UDA) setting. In addition to having labeled source data, there is also access to unlabeled data from the target domain for training in UDA. To mitigate the domain misalignment problem from cross-domain OOD data, we propose a classaware UDA method with optimal assignment and a pseudo-label refinement strategy.

While recent DA advancements are notable, many approaches are designed to be task-specific. Furthermore, they presume the prior label distributions of both domains to be identical. As a result, these methods may not be applicable in many real-world scenarios, particularly when label shift occurs, i.e. having imbalanced cross-domain OOD data. To deal with domain shift and label shift in a unified way, we propose a non-parametric generative classifier to learn non-bias target embedding distributions. Our approach is effective under various DA settings in the presence of label distribution shift.

Another crucial challenge is the deployment of real-world robotic systems in dynamic environments where domains changes continually. To tackle nonstationary OOD data, we propose a continual test-time adaptation (TTA) framework, which empowers robots to quickly adapt to new environments and enhances performance over time. Our model improves model generalization by consolidating information across domains with short-term and long-term semantic memories.

The above-mentioned OOD data are assumed to come from a closed set of categories, while samples can come from an open world. To formulate the open-world OOD problem in 3D scenes, we propose a 3D open-vocabulary segmentation model by utilizing freely available image-caption pairs from the internet. Based on 3D Gaussian Splatting, our model demonstrates the ability to reconstruct and segment any objects in 3D with high visual quality and efficiency.