Grasp, Feel, Act: Towards Object Manipulation Using Vision And Touch

COM3 Level 2

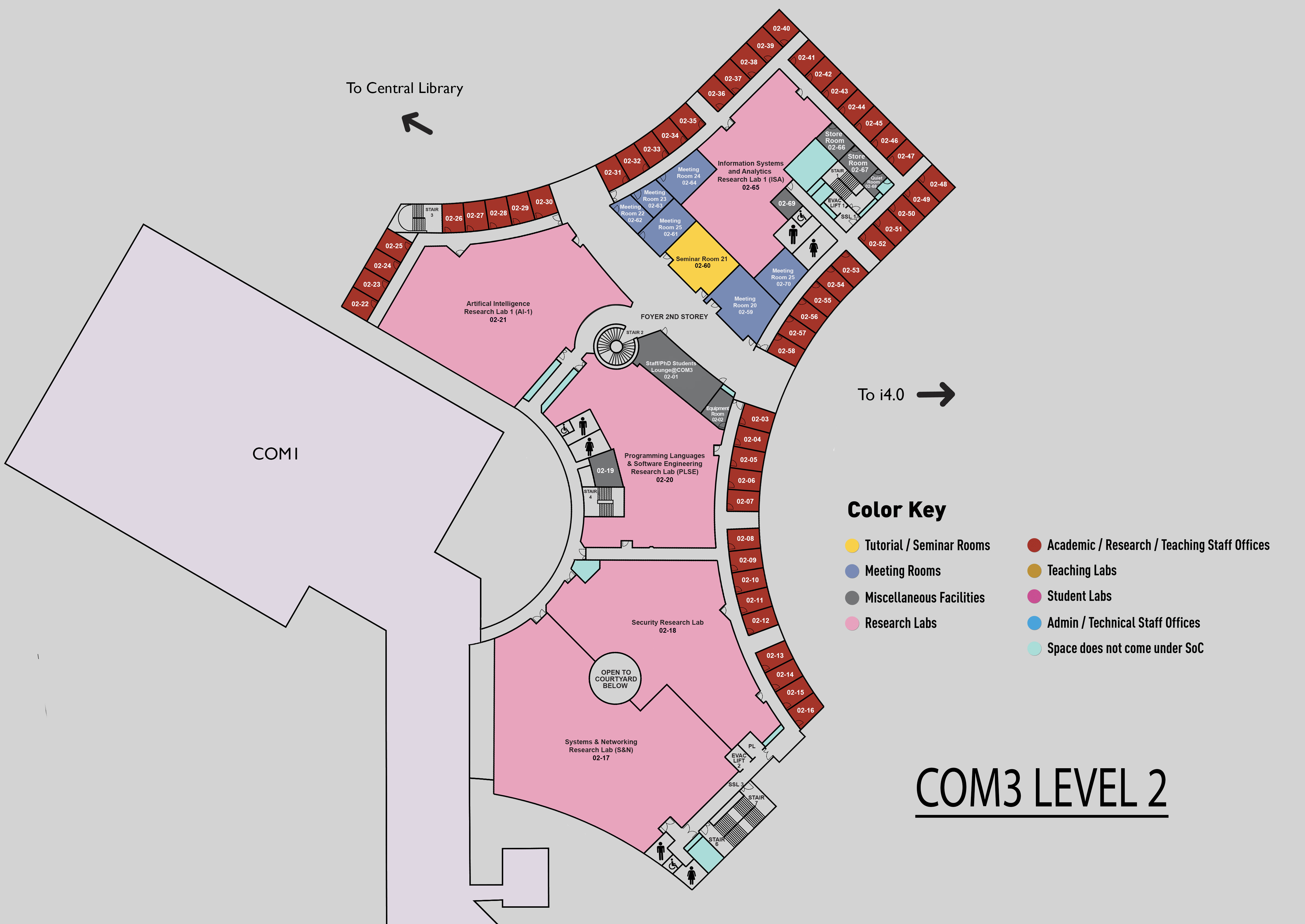

MR25, COM3 02-70

Abstract:

Manipulating objects in an unstructured, complex environment such as human homes is challenging. Thus, dissecting this problem and laying a clear path to successfully manipulating objects is essential. In this thesis, we investigate different aspects of object manipulation. It is centered around three main aspects of robotics: grasp, feel, and act.

The first part of the thesis focuses on the grasp, in which the robot observes the environment through vision modality and reasons about grasps. We introduce Grasp Ranking and Criteria Evaluation ( GRaCE) — a modular probabilistic grasp synthesis framework. This novel approach employs hierarchical rule-based logic and a rank-preserving utility function to optimize grasps based on various criteria such as stability, kinematic constraints, and goal-oriented functionalities. Additionally, we propose GRaCE-OPT, a hybrid optimization strategy combining gradient-based and gradient-free methods to navigate the complex, non-convex utility function effectively. Experimental results in both simulated and real-world scenarios show that GRaCE requires fewer samples to achieve comparable or superior performance relative to existing methods. The modular architecture of GRaCE allows for easy customization and adaptation to specific application needs.

The second part of the thesis investigates the feel, in which we discuss physical feedback that the robot senses after it performs grasping in two settings: Direct and Indirect Contact. We introduce an event-driven visual-tactile perception model for Direct Contact settings with a multi-modal spike-based learning. Our proposed Visual-Tactile Spiking Neural Network (VT-SNN) enables fast perception when coupled with event sensors. We evaluate our visual-tactile system (using the NeuTouch and Prophesee event camera) on two robot tasks: container classification and rotational slip detection. We observe good accuracies on both tasks relative to standard deep learning methods. We have made our visual-tactile datasets freely available to encourage research on multi-modal event-driven robot perception, which is a promising approach towards intelligent power-efficient robot systems.

For Indirect Contact settings, we explore Extended Tactile Perception, which is motivated by observing human tactile feedback. Humans display the remarkable ability to sense the world through tools and other held objects. For example, we can pinpoint impact locations on a held rod and tell apart different textures using a rigid probe. In this work, we consider how to enable robots to have a similar capacity, i.e., to embody tools and extend perception using standard grasped objects. We propose that vibrotactile sensing using dynamic tactile sensors on the robot fingers, along with machine learning models, enables robots to decipher contact information that is transmitted as vibrations along rigid objects. We report on extensive experiments using the BioTac micro-vibration sensor and an event- based dynamic sensor, the NUSkin, capable of multi-taxel sensing at 4 kHz. We demonstrate that fine localization on a held rod is possible using our approach (with errors less than 1 cm on a 20 cm rod). Next, we show that vibrotactile perception can lead to reasonable grasp stability prediction during object handover and accurate food identification using a standard fork. We find that multi-taxel vibrotactile sensing at a sufficiently high sampling rate led to the best performance across the various tasks and objects. Taken together, our results provide evidence and guidelines for using vibrotactile perception to extend tactile perception, leading to enhanced competency with tools and better physical human-robot interaction.

The last part of the thesis focuses on the act, in which we explore the various Impedance Controllers to act intelligently given the tactile feedback. The main idea of the controllers that we propose is to model compliance through a variable impedance, virtual force, and equilibrium point decomposition. We have experimented with two challenging real-world tasks: skin swabbing and PCR swab collection. We are able to achieve around 70% success rate across all tasks using proposed controllers in a controlled laboratory setup. Moreover, our initial findings indicate that the Compliant Equilibrium Point Decomposition controller performs smoothly across both tasks, confirming the generality of the proposed controller.