Talk 1: Private Prompt Learning for Large Language Models

Talk 2: Memorization and Individualized Privacy in Machine Learning Models

Franziska Boenisch, Tenure Track Faculty Member, CISPA Helmholtz Center for Information Security

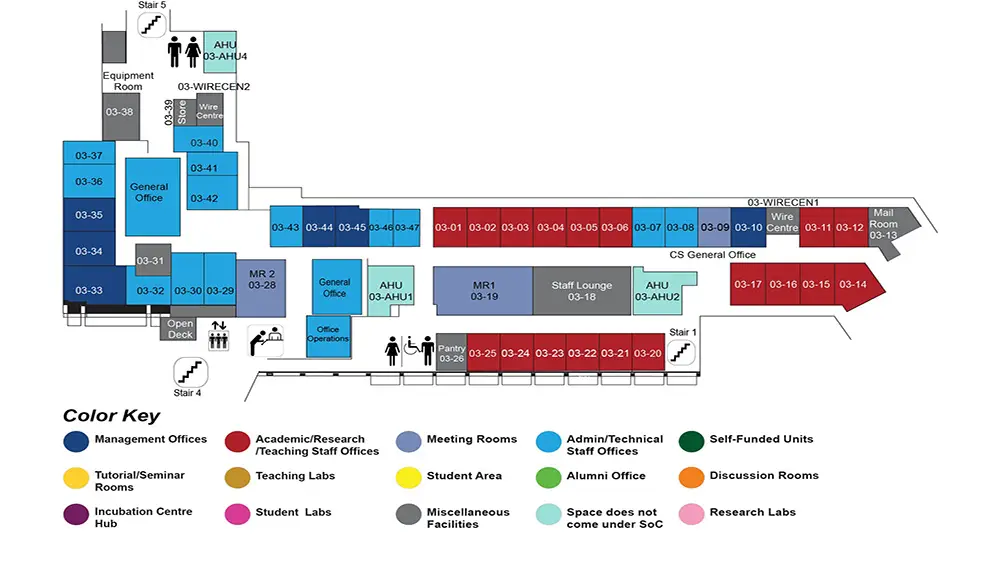

COM1 Level 3

MR1, COM1-03-19

Talk 1: Adam Dziedzic

Abstract: Large language models (LLMs) are excellent in-context learners. However, the sensitivity of data contained in prompts raises privacy concerns. Our work first shows that these concerns are valid: we instantiate a simple but highly effective membership inference attack against the data used to prompt LLMs. To address this vulnerability, one could forego prompting and resort to fine-tuning LLMs with known algorithms for private gradient descent. However, this comes at the expense of the practicality and efficiency offered by prompting. Therefore, we propose to privately learn to prompt. We first show that soft prompts can be obtained privately through gradient descent on downstream data. However, this is not the case for discrete prompts. Thus, we orchestrate a noisy vote among an ensemble of LLMs presented with different prompts, i.e., a flock of stochastic parrots. The vote privately transfers the flock’s knowledge into a single public prompt. We show that LLMs prompted with our private algorithms closely match the non-private baselines.

Paper:

NeurIPS2023: https://openreview.net/forum?id=u6Xv3FuF8N

Bio: Adam is a Tenure Track Faculty Member at CISPA Helmholtz Center for Information Security, co-leading the SprintML group. His research is focused on secure and trustworthy Machine Learning as a Service (MLaaS). Adam designs robust and reliable machine learning methods for training and inference of ML models while preserving data privacy and model confidentiality. Adam was a Postdoctoral Fellow at the Vector Institute and the University of Toronto, and a member of the CleverHans Lab, advised by Prof. Nicolas Papernot. He earned his PhD at the University of Chicago, where he was advised by Prof. Sanjay Krishnan and worked on input and model compression for adaptive and robust neural networks. Adam obtained his Bachelor's and Master's degrees from the Warsaw University of Technology in Poland. He was also studying at DTU (Technical University of Denmark) and carried out research at EPFL, Switzerland. Adam also worked at CERN (Geneva, Switzerland), Barclays Investment Bank in London (UK), Microsoft Research (Redmond, USA), and Google (Madison, USA).

Talk 2: Franziska Boenisch

Abstract: In this talk, I will cover my two latest lines of work on individualized privacy for supervised machine learning and memorization in self-supervised learning (SSL). Privacy preservation in supervised learning is required to protect leakage of sensitive information from the trained models. The common approach to implement privacy is to integrate differential privacy (DP) into the training procedure. Standard DP sets one privacy budget for the entire training set, independent of the preferences and requirements of individual data points. We argue that this approach is limited because different individuals may have different privacy requirements. Building on the standard algorithm for privacy-preserving ML, we propose the Individualized DPSGD algorithm that does not only allow to respect individuals’ privacy preferences, but also enables to leverage training data more efficiently—thereby yielding better ML models. For SSL, the leakage of sensitive information from the encoders is not as well understood as in supervised learning. To analyze the privacy implications in a more structured way, we propose the first definition of SSL memorization. Based on this definition, we evaluate memorization in various SSL frameworks and for various encoder architectures. We identify which individual input samples are most prone to memorization, and, thereby, more exposed to privacy risks.

Papers:

NeurIPS2023: https://proceedings.neurips.cc/paper_files/paper/2023/hash/3cbf627fa24fb6cb576e04e689b9428b-Abstract-Conference.html

ICLR2024: https://openreview.net/pdf?id=KSjPaXtxP8

Bio: Franziska is a tenure-track faculty at the CISPA Helmholtz Center for Information Security where she co-leads the SprintML lab. Before, she was a Postdoctoral Fellow at the University of Toronto and Vector Institute advised by Prof. Nicolas Papernot. Her current research centers around private and trustworthy machine learning. Franziska obtained her Ph.D. at the Computer Science Department at Freie University Berlin, where she pioneered the notion of individualized privacy in machine learning. During her Ph.D., Franziska was a research associate at the Fraunhofer Institute for Applied and Integrated Security (AISEC), Germany. She received a Fraunhofer TALENTA grant for outstanding female early career researchers, the German Industrial Research Foundation prize for her research on machine learning privacy, and the Fraunhofer ICT Dissertation Award 2023.