Revealing Privacy Risks in Privacy-Preserving Machine Learning: A Study of Data Inference Attacks

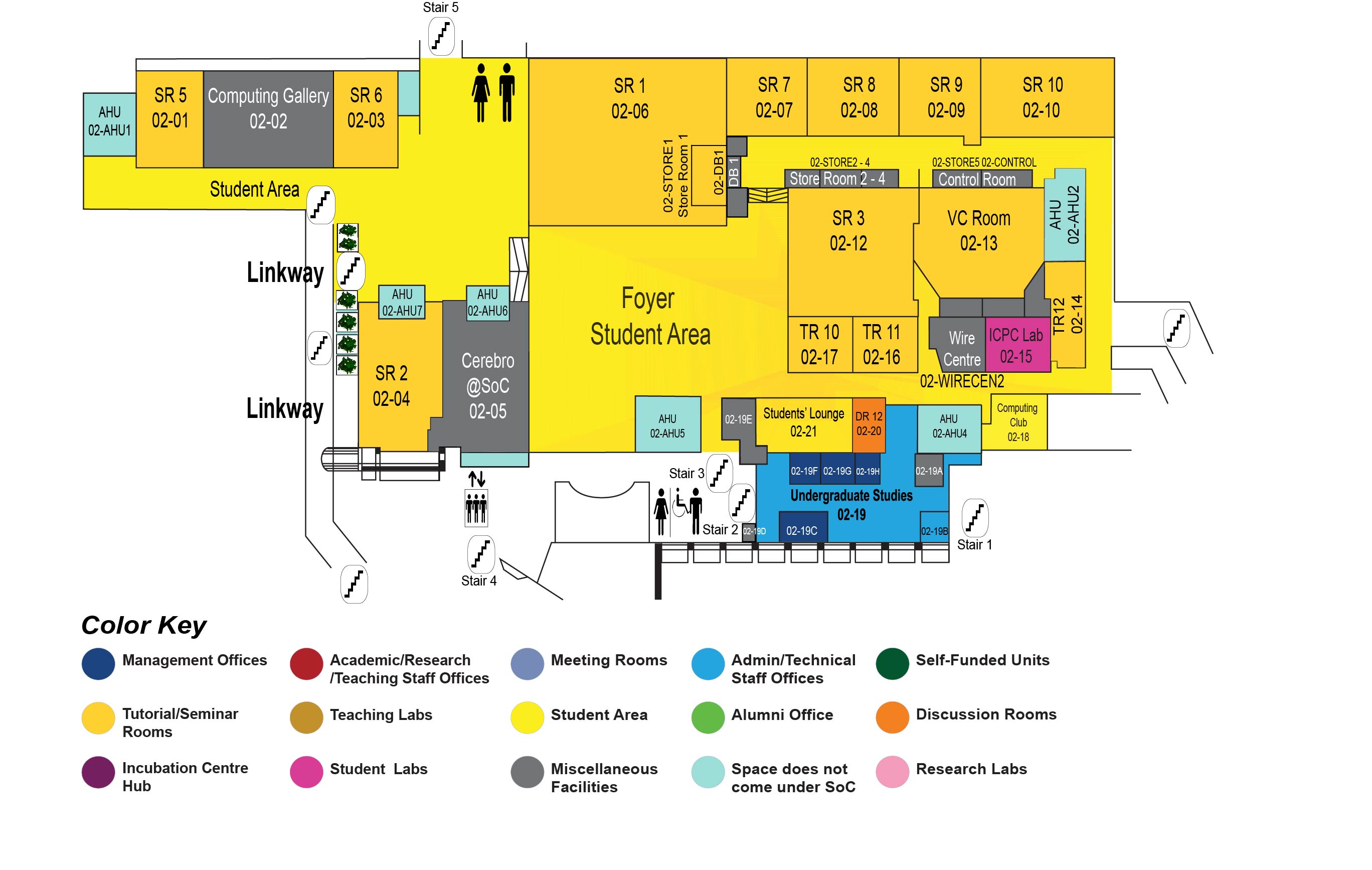

COM1 Level 2

SR5, COM1-02-01

Abstract:

The success of modern machine learning (ML) models heavily relies on access to large amounts of training data. However, obtaining sufficient data is challenging for institutions like hospitals and banks, where data privacy is critical. To address this, significant research has focused on various stages of ML, including data preprocessing, model training, model explanation, and model sharing, to leverage isolated datasets for model training while maintaining privacy. This thesis examines privacy-preserving methods across these stages and assesses their privacy risks through data inference attacks.

First, we study the data preprocessing method called InstaHide, which corrupts visual features of training images using non-linear transformations to allow centralized training of private data. Despite the transformations, we demonstrate that the encoded images still reveal information about the original images. We develop a fusion-denoising network to reconstruct these original images.

Second, we investigate the privacy risks in vertical federated learning (VFL). VFL combines intermediate outputs from local models trained by different parties into a global model, aiming to preserve privacy since private data is not directly shared. However, using the memorization nature of ML models, we design inference attacks showing that final federated models, including logistic regression, decision trees, and neural networks, can still leak private training data.

Third, we explore the privacy risks of model explanation methods using Shapley values. Shapley value-based model explanation methods are widely used by ML service providers like Google, Microsoft, and IBM. Through feature inference attacks, we show that private model inputs can be reconstructed from Shapley value-based explanation reports.

Finally, we examine the privacy risks in generative model sharing, especially with diffusion models. Generative models can be used to share learned patterns from local datasets among different parties to bridge isolated data islands. However, this can introduce privacy and fairness risks. Our attacks demonstrate that the model receiver can uncover sensitive feature distributions in the model sharer's dataset, and the sharer can manipulate the receiver's downstream models by altering the diffusion model's training data distribution.