On Error Bounds for Kernel-Based Optimization and Integration with Black-Box Queries

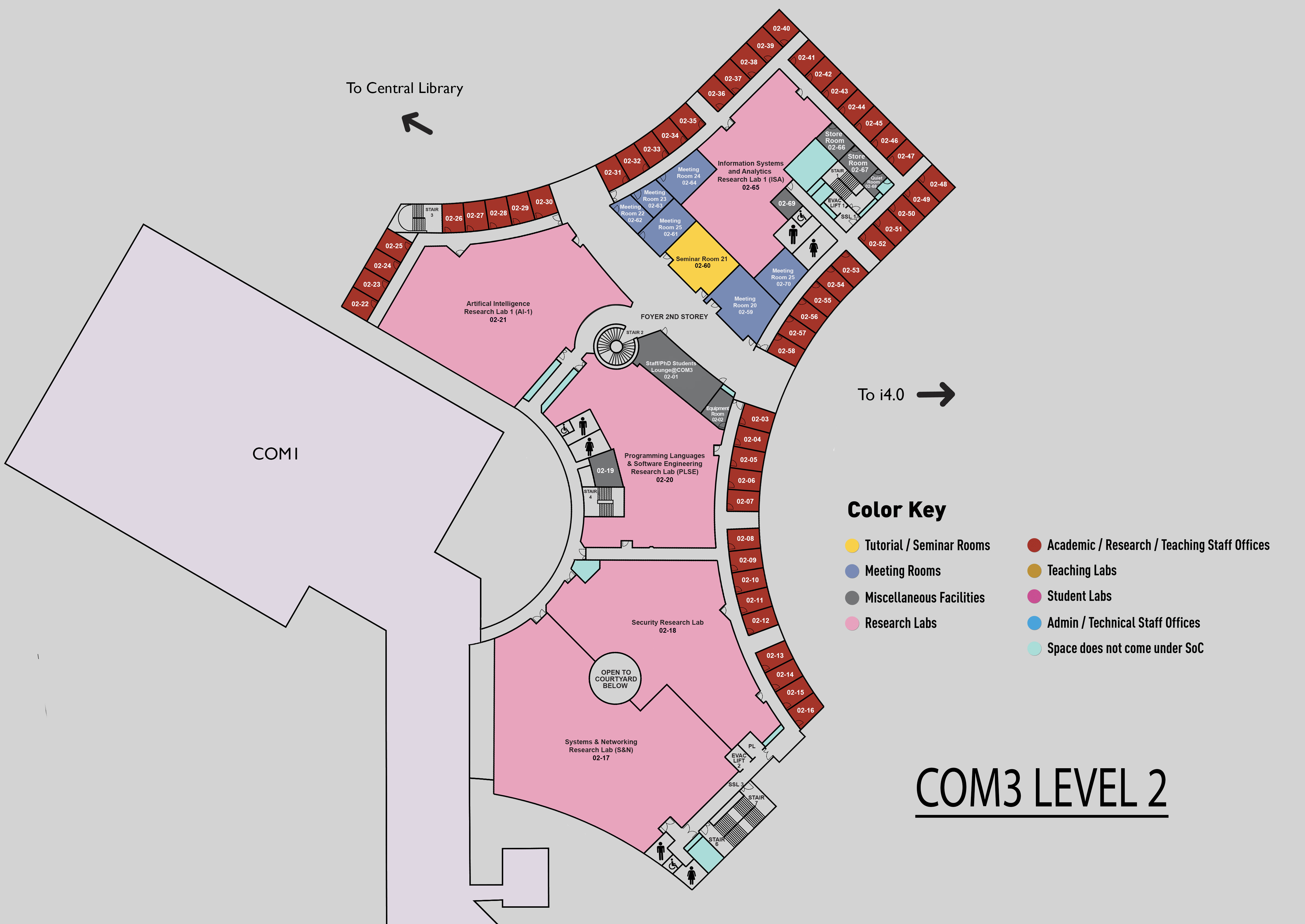

COM3 Level 2

MR25, COM3 02-70

Abstract:

In the field of machine learning, the estimation or optimization of black-box functions using bandit feedback is a critical problem. This involves sequentially selecting actions to minimize the losses (regrets), possibly in large continuous action spaces. In this seminar, we will focus on reproducing kernel Hilbert spaces (RKHS) as the assumed space for the target function, which is a practical and versatile assumption compared to other constraints such as convexity. This problem setting is also known as kernel-based Gaussian process (GP) bandits, where a GP prior is placed over the unknown function and updated based on point query results.

This seminar will address two key chanllenges in GP bandits: computing the integral and finding the optimal value. These two problems, known as Bayesian quadrature (BQ) and Bayesian optimization (BO) respectively, differ significantly in their focus. Specifically, BO is concerned with the exploration and exploitation of narrow peaks hidden in mostly flat domains. In contrast, BQ aims to estimate the overall characteristics of the target function, assigning "equal" importance to every data point. While this distinction is intuitive, it is of interest to explore their theoretical gaps or even connections. To this end, we investigate the asymptotic limits of regret bounds for these problems, including lower bounds, upper bounds for new algorithms, and various problem settings.

Additionally, we analyze the regret bounds for another crucial numerical problem, the normalizing constant (NC), parameterized by temperature, and requiring a multiplicative guarantee. We show interesting connections between BO, BQ and NC. In particular, the difficulty level of NC varies between BQ and BO with respect to the temperature: as the temperature approaches zero, the problem is similar to BO, while as the temperature approaches infinity, the problem is similar to BQ.

Overall, this seminar covers the understanding of regret bounds for kernel-based GP bandit problems, providing both theoretical and practical insights into their performance in various settings, with an emphasis on function optimization and numerical integration tasks.