Algorithms for Differential Privacy under Distributed Settings

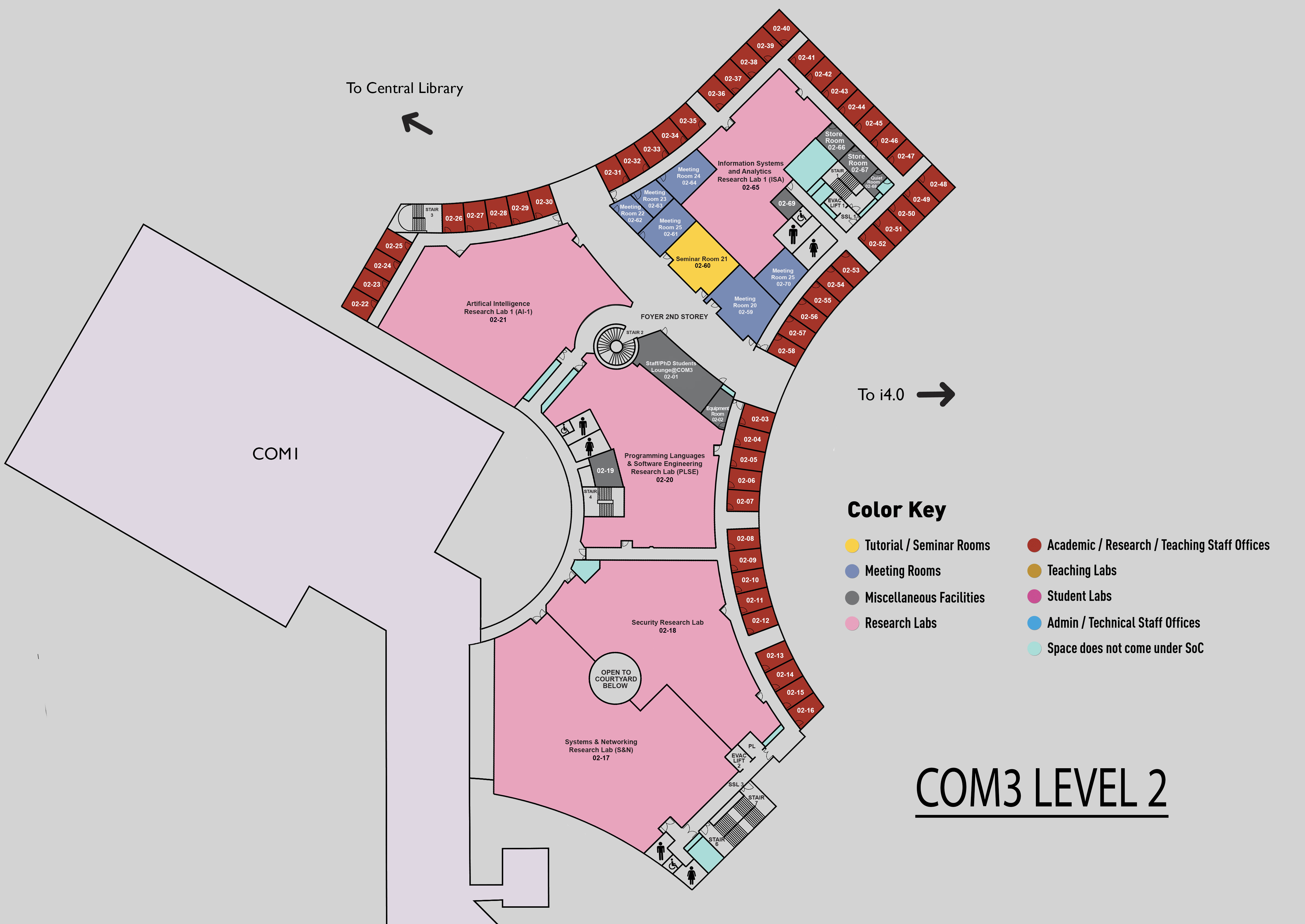

COM3 Level 2

SR21, COM3 02-60

Abstract:

Privacy is a central concern in data analysis and machine learning. As a strong information-theoretic framework, differential privacy (DP) has become the state-of-the-art definition for protecting individual privacy. The classical applications and research of DP have focused on the centralized setting, where a trusted curator has access to the sensitive data. In practice, sensitive data is usually distributed among multiple parties who do not trust each other, which makes it more difficult to protect the privacy of the data while achieving a decent level of utility. This motivates the studies presented in this thesis, DP algorithms under distributed settings.

The first work focuses on data stream publication under the well-studied local differential privacy framework, where no party trusts any party. Instead of injecting independent perturbations into all data items of the stream held by a single party, we propose to inject correlated Gaussian noises into the adjacent data items of a data stream, which are assumed to be close (or enforced to be). Our mechanism, named CGM, carefully exploits the closeness constraint and provably achieves much better privacy-utility trade-offs.

The second work focuses on the problem of federated learning under distributed differential privacy, where parties utilize some cryptography protocols to collaboratively enhance the model utility of local DP. We propose a generic perturbation mechanism run on the party side, called the Skellam Mixture Mechanism (SMM). We prove that our mechanism, when combined with SecAgg, achieves comparable performance as the Gaussian noise mechanism of central DP, under the R\'enyi DP framework. On benchmark applications such as mean estimation and stochastic gradient descent, SMM also outperforms existing works in terms of the utility-privacy-communication trade-off.

Finally, we extend the distributed DP framework, which was originally studied for horizontally partitioned data, to vertically partitioned databases. We point out that most existing DP solutions for vertically partitioned data either rely on unrealistic threat models or are application-dependent and do not generalize. Consequently, practitioners are left with rather basic approaches with suboptimal model utility. To fill this gap, we propose two solutions called the Skellam Scaling Mechanism (SSM) and the Skellam Replication Mechanism (SRM). We formally prove the correctness of both solutions, and provide a rigorous analysis and experimental results showing that they are able to match the privacy-utility trade-offs in the centralized setting.