Privacy Preservation in Android Ecosystem

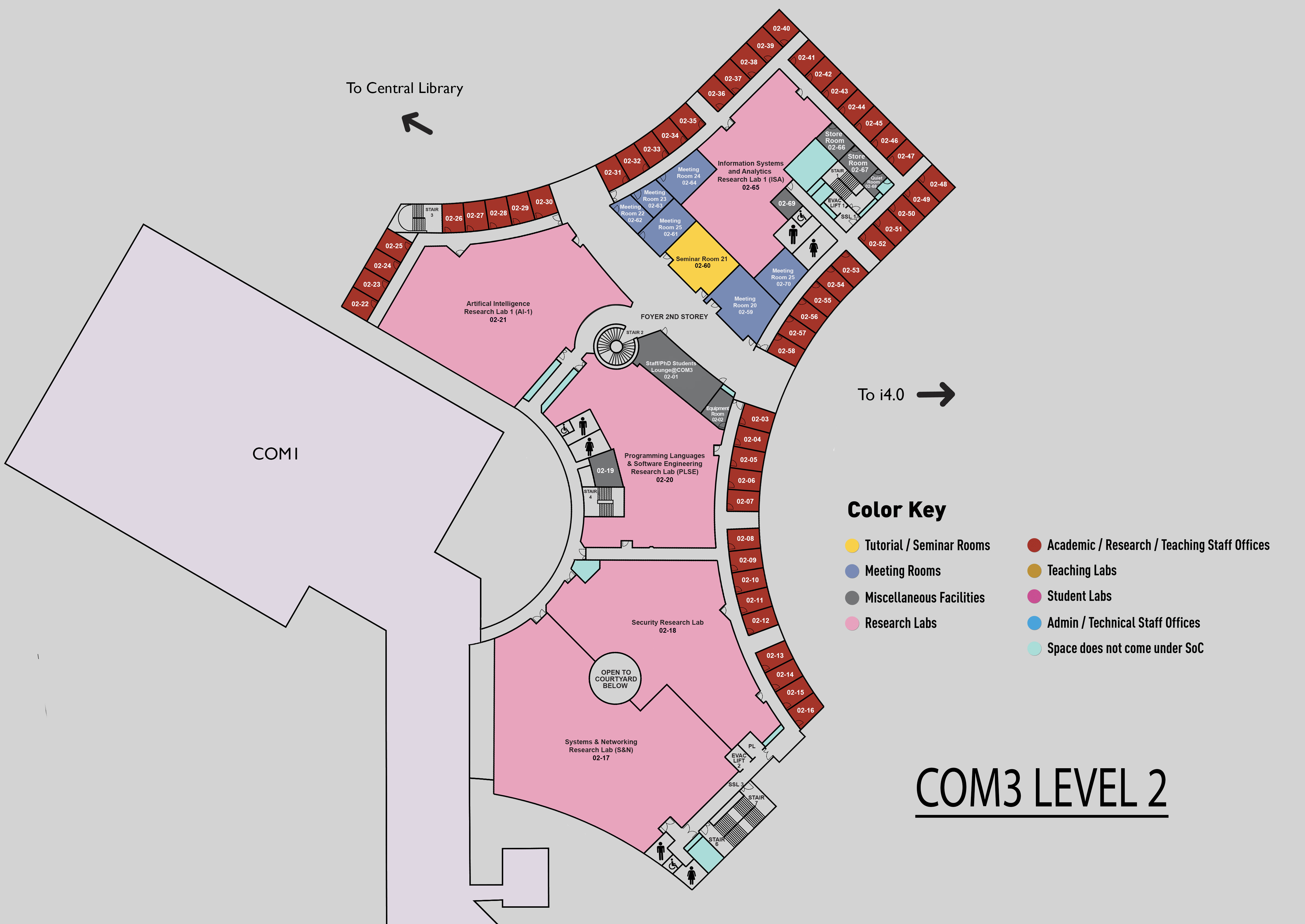

COM3 Level 2

SR21, COM3 02-60

Abstract:

Nowadays, people heavily rely on their personal mobile devices in their daily lives. These devices offer a wide range of functionalities that significantly enhance users’ productivity. However, this convenience comes at the cost of a substantial amount of personal data being accessed and utilized for analytical and advertising purposes. The protection of user privacy has become a long-standing concern along with the evolution of mobile devices. Especially, the introduction of data regulations around the world, such as the European Union’s General Data Protection Regulation (GDPR), have brought privacy preservation to the forefront of public attention like never before. Whether our mobile devices adequately safeguard our privacy remains an open question in the research community.

In this thesis, we focus on the Android ecosystem and study the landscape of privacy preservation of Android smartphones through three aspects, namely the operating system (OS) level, software development kit (SDK) level, and application level. For each level, we investigate if users’ privacy is comprehensively protected, share our insights into the status quo of privacy preservation, and provide our solution to strengthen existing privacy protection mechanisms. First, we investigate user privacy protection at the OS level. More specifically, how the OS protects the users’ unresettable identifiers (UUIs) from third-party apps’ illegal access. We conduct a systematic study on the effectiveness of the UUI safeguards on Android smartphones including both Android Open Source Project (AOSP) and Original Equipment Manufacturer (OEM) models. To facilitate our large-scale study, we propose a set of analysis techniques that discover and assess UUI access channels. Our approach features a hybrid analysis that consists of static program analysis of Android OS and forensic analysis of OS images to uncover access channels that may open an attacking opportunity. Our study reveals that UUI mishandling pervasively exists, evidenced by 51 unique vulnerabilities found (8 listed by CVE).

Second, we study privacy protection at the SDK level, aiming to gain insights into the landscape of privacy protection by Android third-party SDKs. Our privacy compliance assessment covers 158 SDKs from two release platforms. Our analysis leverages a large language model to interpret the SDK’s privacy policies and adopts static taint analysis to scrutinize their data handling practices. As a result, we identify 338 cases of privacy exposure from the collected SDKs, and reveal that approximately 37% of the examined SDKs are indulging in over-collecting users’ privacy data and 88% over-claims their access of sensitive data, largely due to a lack of proper development guidelines and inadequate management of the release platforms. In addition, we conduct a longitudinal analysis to explore the trend of privacy collection behaviours of these SDKs. Our results demonstrate a worrying phenomenon that no significant improvement is observed in terms of excessive data collection and sharing by these SDKs, even after the enactment of the GDPR. In light of our findings from the first two studies, we propose a few recommendations and mitigation strategies for manufacturers, developers, and authorities to suppress potential privacy leakage.

Finally, we move our focus to the application level. We consider machine learning (ML) as one of the most popular data-hungry applications in nowadays world, and therefore, focus on federated learning (FL). FL is a typical ML paradigm that enables multiple lightweight devices to collaborate on complex learning tasks, demonstrating many promising use cases on mobile devices such as personalized input methods and healthcare services. It offers a novel solution to protect the confidentiality of users’ own datasets, however, is prone to Byzantine adversaries. Existing mitigating strategies either request users’ compromise in partially sharing their datasets or face practicality challenges, for example, knowing the exact number of malicious participants. For those reasons, we study the Byzantine-resilient FL without sharing participants’ private data and propose FLAP, a post-aggregation model pruning technique to enhance the Byzantine robustness of FL. Our approach aims to effectively disable the malicious and dormant components in the learned models. Our empirical evaluation demonstrates the effectiveness of FLAP in various settings. It reduces the error rate by up to 10.2% against the state-of-the-art adversarial models. Moreover, FLAP also manages to increase the average accuracy by up to 22.1% against different adversarial settings, mitigating the adversarial vii impacts while preserving learning fidelity.