Towards Intelligent Understanding of Table-Text Documents

COM3 Level 2

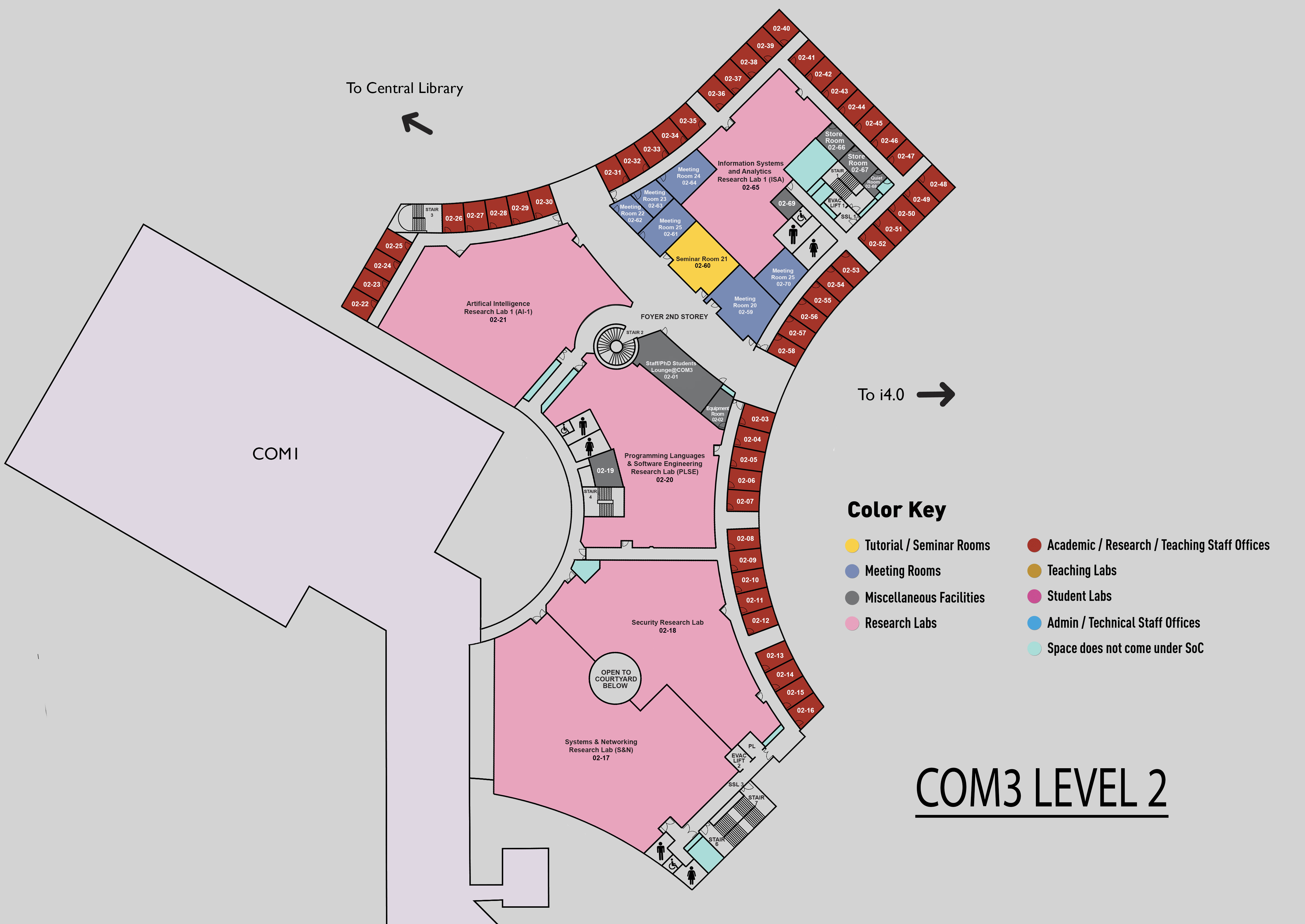

SR21, COM3 02-60

Abstract:

Table-text documents that contain both tabular and textual data are quite pervasive in the real world, such as SEC filings, academic papers and medical reports, in which the table and the text are often semantically related or complementary to each other. To comprehend and analyze these documents with artificial intelligence (AI) has been a problem of great practical significance, yet is largely under-explored. In this thesis, we target at enabling machines to read and comprehend such hybrid data, and develop a series of question answering (QA) models that are capable of answering various questions based on these hybrid data. One key to solving the problem is to develop discrete reasoning capability of a model, as our targeted data often contain plentiful numbers. The model needs to extract values from different parts of the document and then conduct reasoning with the obtained values to derive the answer. This thesis features a series of four works, where two benchmark datasets are built to facilitate the research on this topic and four distinct models are developed to consistently lift the comprehension capability over the hybrid tabular and textual data.

First, consider that there is no available dataset that focuses on the intelligent understanding of real-world hybrid tabular and textual data, the first tabular and textual QA dataset named TAT-QA is built. In TAT-QA, each table and its associated paragraphs are sampled from financial reports and the QA pairs are generated by expert annotators in the finance domain. Based on TAT-QA, we propose a novel TagOp model as a strong baseline method. Taking as input the given question, table and associated paragraphs, TagOp first applies sequence tagging to extract relevant cells from the table and relevant spans from the text as the evidence. Then it applies symbolic reasoning over the evidence with a set of aggregation operators to arrive at the final answer. As the initial pioneering work on intelligent understanding of real-world hybrid tabular and textual data, TAT-QA primarily focuses on well-structured tables and manually selected paragraphs from the original documents (i.e., financial reports), where the problem is greatly simplified.

Second, the TAT-QA dataset is extended to a TAT-DQA dataset, which is a Document Visual Question Answering (VQA) dataset and aims to answer questions over the original visually-rich table-text documents. This setting is closer to the real-world case and much more challenging. To address this challenge, we develop a novel Document VQA model named MHST. It first adopts a “Multi-Head” classifier to predict the answer type, based on which different strategies are applied to infer the final answer, i.e., extraction or reasoning. It is worth noting that MHST achieves SOTA results on TAT-QA and serves as a strong baseline on TAT-DQA.

Third, we seek to further lift the comprehension capabilities of the QA model over TAT-DQA by exploiting the element-level semantics in the problem context with graph structures. In particular, given a visually-rich table-text document and its relevant question, four types of elements are identified: Question, Block, Quantity, and Date. A Doc2SoarGraph model is developed to model the differences and correlations of the four types of elements with semantic-oriented hierarchy graph structures, taking each element as one node. Then, the most question-relevant nodes are selected from the graph and different reasoning strategies are applied over the selected nodes to derive the final answer based on the answer type.

Fourth, we harness the strong multi-step reasoning capabilities of large language models (LLMs) like GPT-4 to solve the problem. In this work, we first abstract a Step-wise Pipeline for tabular and textual QA challenge, which consists of three key steps, including Extractor, Reasoner and Executor. We initially design an instruction to validate the pipeline on GPT-4, demonstrating promising results. However, utilizing an online LLM like GPT-4 incurs various challenges, such as high cost, latency, and data security risks. We are thus motivated to specialize smaller LLMs in this task. Particularly, we develop a TAT-LLM model by fine-tuning LLaMA 2 with the training data generated automatically from existing expert-annotated table-text QA datasets following the Step-wise Pipeline. We conduct experiments on three table-text QA datasets and the results show that our TAT-LLM model can outperform all compared models, including the previous best fine-tuned models and very large-scale LLMs like GPT-4.

In summary, this thesis has initialized and contributed to the research towards the intelligent understanding of real-world table-text documents through addressing question answering tasks. We contribute two expert-annotated table-text QA datasets, namely TAT-QA and TAT-DQA. We make them publicly available, and host leaderboards and a competition to encourage further research in this area. Based on the constructed datasets, we propose four novel models to address the challenges, which demonstrate remarkable effectiveness in understanding hybrid tabular and textual data in extensive experiments. Since our initial research in this area, a significant number of studies have been conducted following our series of work, resulting in the development of more than 20 unique models for tabular and textual QA. As of today, our publications have received over 180 citations.