Combining Planning and Learning to Improve Decision Making

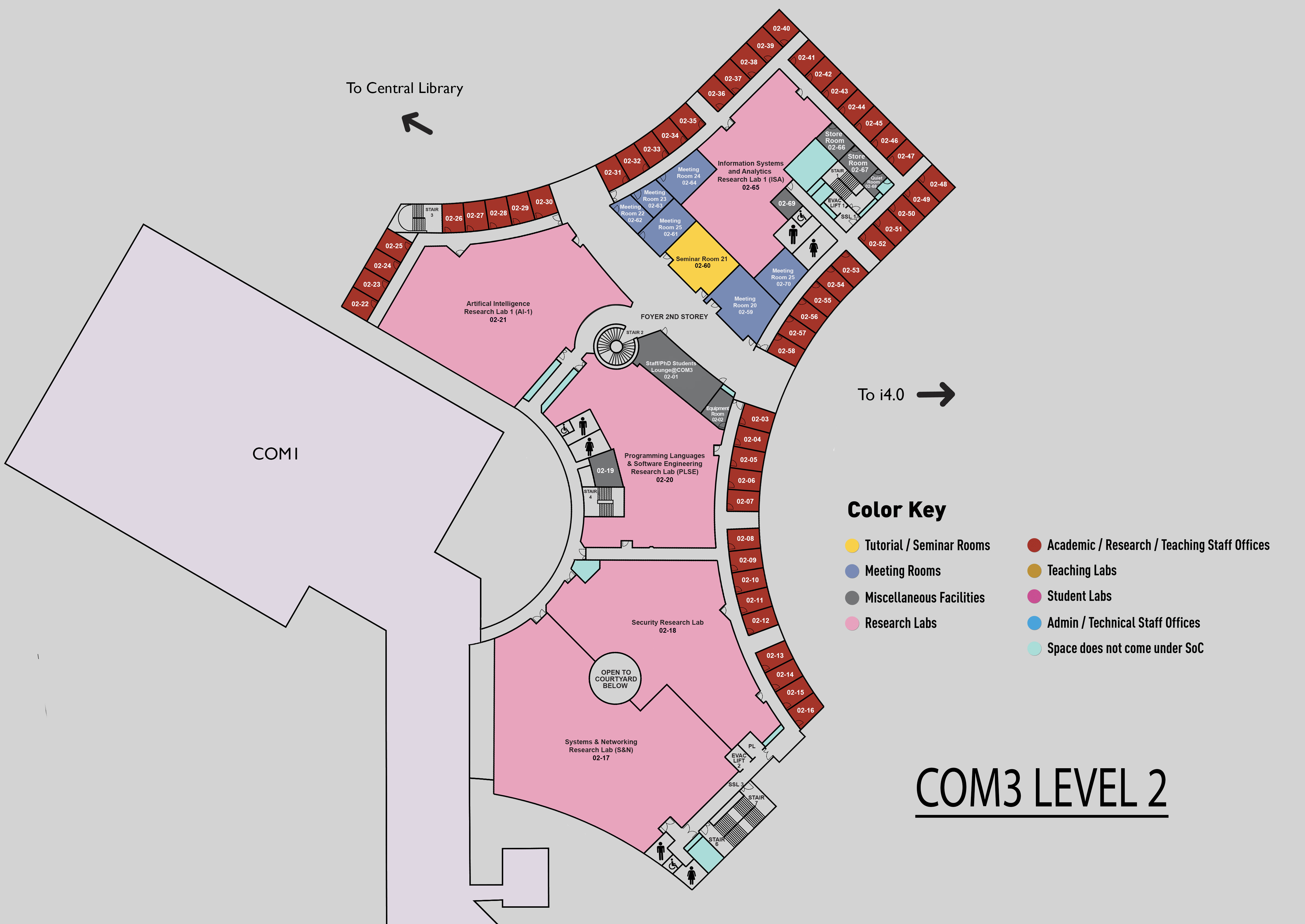

COM3 Level 2

SR21, COM3 02-60

Abstract:

In sequential decision-making problems, the goal is to develop agents that interact with environments and maximise cumulative rewards. Popular online planning algorithms, like Monte-Carlo Tree Search, can handle large-scale decision making problems, but are limited by their reliance on accurate world models and reduced effectiveness under constrained online time or search budgets. Deep Reinforcement Learning (DRL) offers a potential solution by approximating value or policy functions directly without relying on world dynamics. Despite this, reinforcement learning's sample inefficiency and the need for extensive environmental interactions limit its wider applicability.

This work presents novel strategies addressing two specific scenarios. The first involves situations where the agent has access to an accurate world model but is restricted by a limited online computation budget. Conventional tree-based online search algorithms work reasonably well in practice but fail to effectively utilise the information gathered from similar states. Conversely, online learning can interpolate information among similar states; Policy Gradient Search is a practical algorithm based on this process, however, it lacks an explicit exploration mechanism. These limitations affect the performance of the agent, especially under limited online computation budget scenario. To address these concerns, we introduce Exploratory Policy Gradient Search (ExPoSe), an efficient and effective online search algorithm that leverages gradient and tree-based online search methods. ExPoSe enables efficient information interpolation across the state space by directly updating the search policy parameters. Further, it maintains a tree structure, which helps in incorporating a robust exploration mechanism during the online search process and exploiting subtree information at each node in the search tree effectively.

The second scenario addresses situations where the agent does not have access to an accurate world model and must learn its policy from a limited offline collection of demonstrations. In these situations, deep neural network based policies often underperform due to their sample inefficiency and poor generalisation capabilities. Alternatively, we can learn a world model from the limited data and utilise it with online search. However, performance deteriorates due to compounding errors caused by inaccuracies in the learnt world model. In response to these challenges, we propose Tree Search Network (TSN), a novel neural network design that integrates the algorithmic inductive bias of a best-first tree search algorithm into the network architecture. Additionally, it utilises a learnt world model to dynamically construct a computation graph by following a best-first search algorithm. It jointly optimises the world model with the search algorithm, enabling learning of a robust world model that is helpful in the search process. Furthermore, TSN applies a self-supervised consistency loss to the world models to bolster overall consistency throughout the search process.

However, the loss function in TSN is discontinuous in the network's parameter space because TSN is an approximation of a full tree search and may construct different search trees for small parameter variations, affecting the continuity of its output and posing challenges for gradient-based optimisation. To address this issue, we present Differentiable Tree Search Network (D-TSN), an evolution of TSN that resolves its continuity issues. D-TSN expands a search tree for a set number of steps before backing up the expanded nodes, outputting the Q-values. The unique feature in D-TSN is its stochastic tree expansion policy that creates a distribution over potential leaf nodes for expansion using the learned world model. This approach enables optimisation of the expected loss function which is continuous in the network's parameter space. However, the resulting loss function has high variance that we address by framing tree expansion as another decision-making problem. We apply variance reduction techniques from policy gradient literature and reformulate the loss function with lower variance.

This work makes significant contributions to deterministic decision-making problems by introducing strategies that leverage the benefits of both planning and learning paradigms. The proposed methods offer fresh perspectives for policy learning and online search, enhancing the performance of intelligent agents in complex, real-world scenarios.