Rigorous Security Analysis for Machine Learning Systems

Dr Kuldeep Singh Meel, Associate Professor, School of Computing

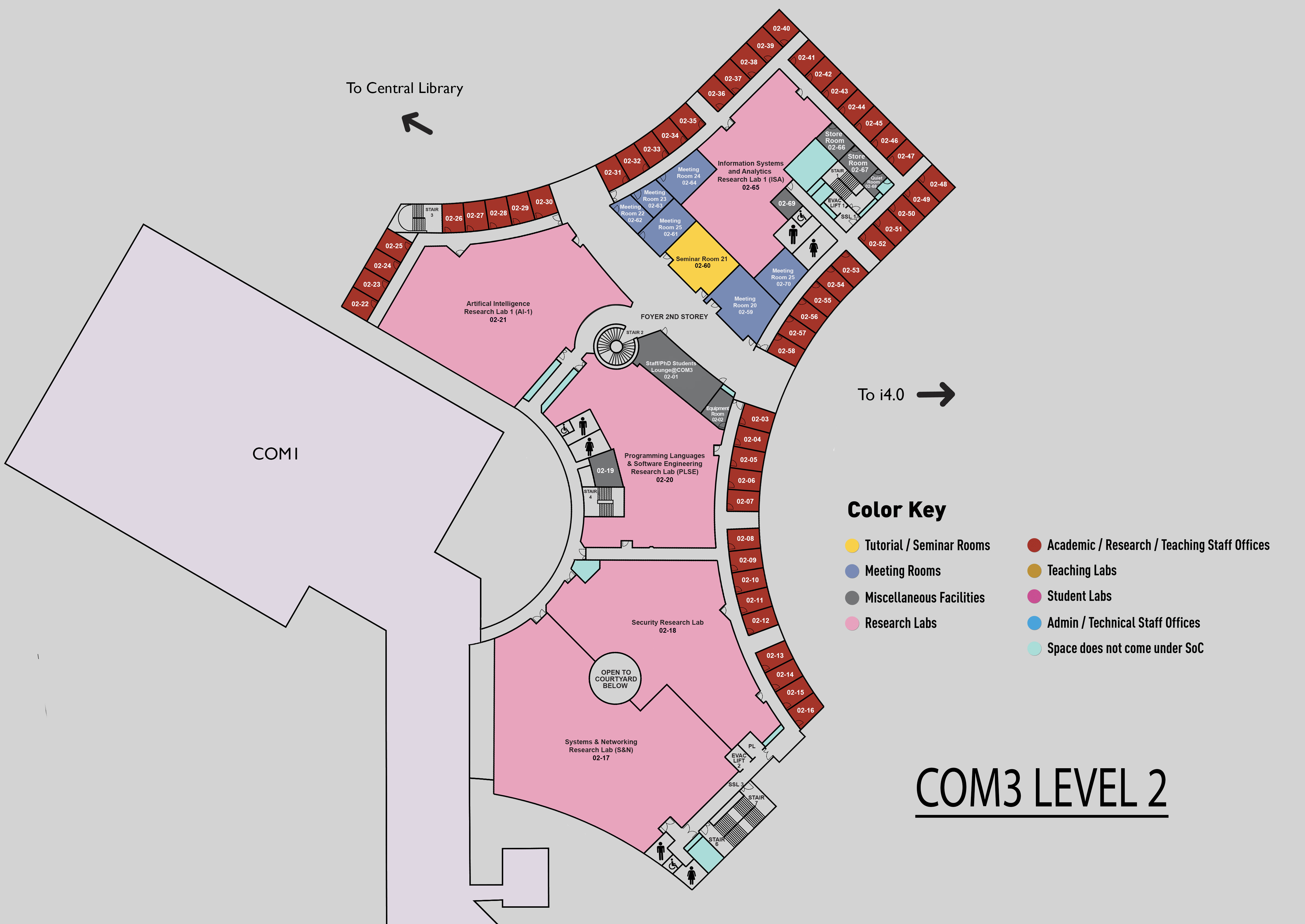

COM3 Level 2

SR21, COM3 02-60

Abstract:

Machine learning security is an emerging area with many open questions lacking systematic analysis. In this thesis, we identify three new algorithmic tools to address this gap: (1) algebraic proofs; (2) causal reasoning; and (3) sound statistical verification.

Algebraic proofs provide the first conceptual mechanism to resolve intellectual property disputes over training data. We show that stochastic gradient descent, the de-facto training procedure for modern neural networks, is a collision-resistant computation under precise definitions. We formalize the collision-resistance property as a decisional convergence query. These results open up connections to lattices, which are mathematical tools used for cryptography presently.

We introduce causal queries that enable studying the interaction between different variables that arise naturally as part of the training process and a given adversary. We propose causal models to investigate the relationship between generalization-related variables and empirical privacy attacks and defenses, whose connections have been hypothesized in prior work.

Finally, we devise statistical verification procedures with ‘probably approximately correct’ (PAC)-style soundness guarantees for two flavors of counting queries. We show that counting queries enable checkable properties of robustness, fairness and susceptibility to trojan attacks.