Analytic Continual Learning: Revolutionizing Continual Learning with A Fast and Non-forgetting Closed-Form Solution

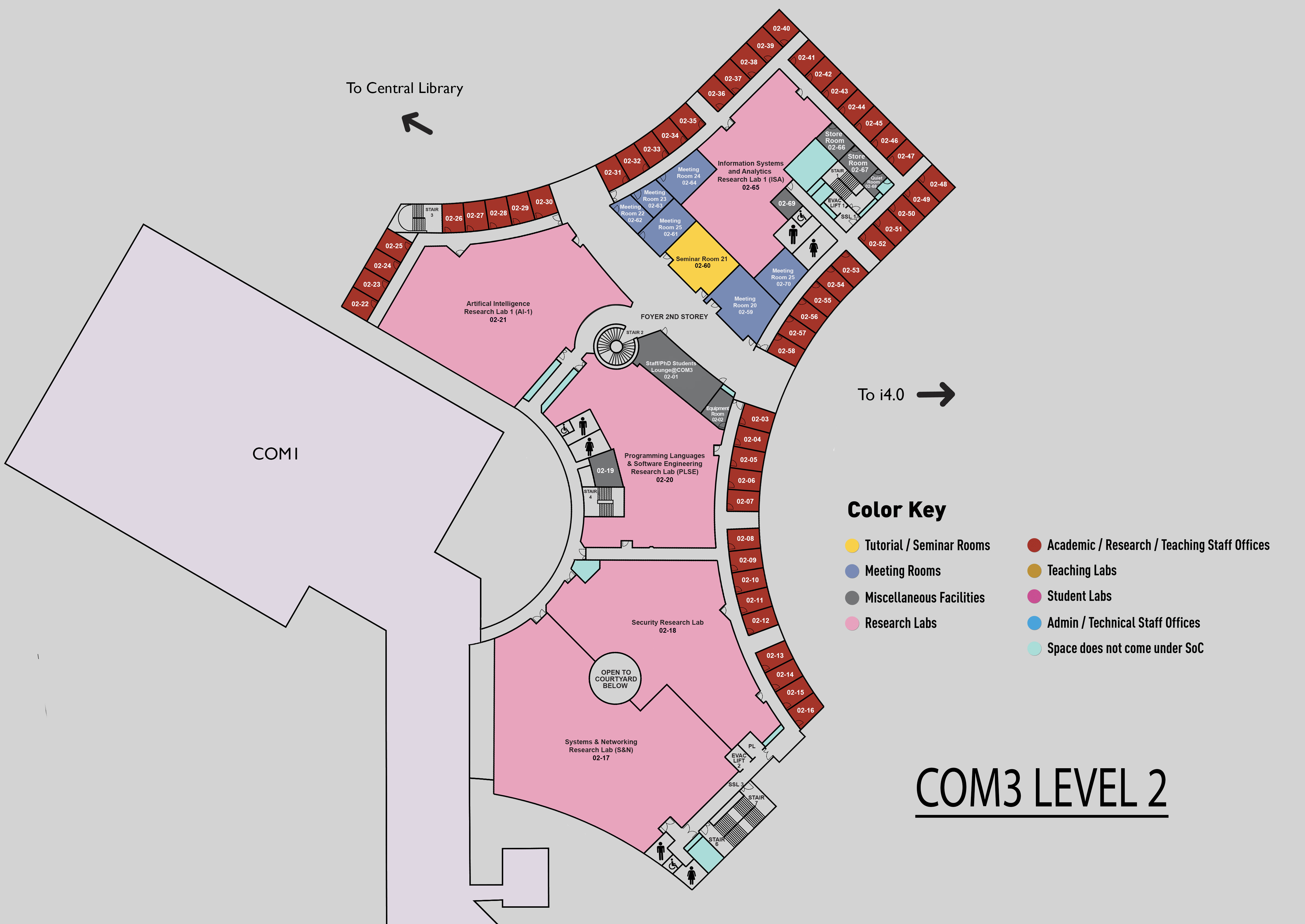

COM3 Level 2

MR21, COM3 02-61

Abstract: Continual learning allows models to continually obtain knowledge in different phases. This learning agenda resembles the human learning process and contributes to the accumulation of machine intelligence. However, the continual learning suffers from the issues of 1) catastrophic forgetting, where models rapidly lose previously learned knowledge when acquiring new information, and 2) privacy invasion, where storing data for replaying during continual learning is not allowed in some scenarios. In this talk, we introduce a new branch of continual learning, namely Analytic Continual Learning. This branch extends the recursive least-squares to the class-incremental learning and achieves equivalent results with jointly retraining ones (training continually == training joint!). Apart from addressing the catastrophic forgetting and data privacy problems, it allows a one-epoch training paradigm, and can achieve a 10-100 times speedup in continual learning training.

Bio: Huiping Zhuang received the B.S. degree in optical information science and Technology, and the M.Eng. degree in control theory and control engineering from the South China University of Technology, Guangzhou, China, in 2014 and 2017, respectively. He obtained the Ph.D. degree with the School of Electrical and Electronic Engineering, Nanyang Technological University, Singapore, in 2021. He is now an associate professor in Shien-Ming Wu School, South China University of Technology. He served as a special issue editor for the Journal of Franklin Institute (JCRQ1), co-chair of WSPML2023. He has published papers in ICML, NeurIPS, CVPR, AAAI, IEEE Trans NNLS, IEEE Trans SMC-S, IEEE Trans CSVT, IEEE Trans MM, etc. His current research interests cover Deep Learning, Continual Learning, Large Models.