Non-Parametric 3D Hand Shape Reconstruction From Monocular Image

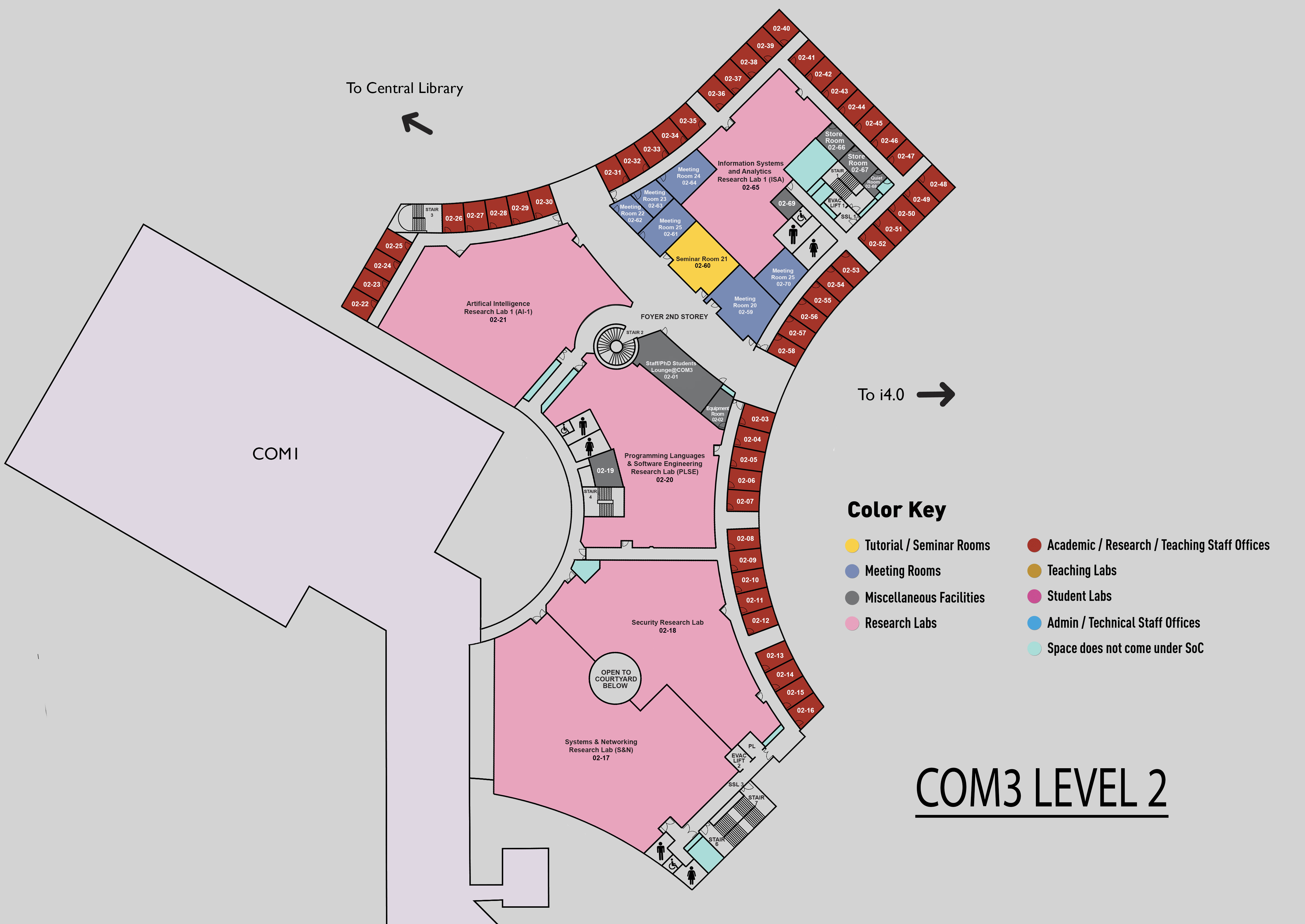

COM3 Level 2

SR21, COM3 02-60

Abstract:

3D hand shape estimation from monocular RGB input recovers the hand surface locations in 3D space. This area of computer vision is critical due to its numerous applications, such as human-computer interaction, augmented and virtual reality applications. Recent advances in deep learning have led to significant progress in hand shape estimation. Most existing works for 3D hand pose and shape estimation from the single monocular image rely on a parametric hand model, MANO. However, the parametric MANO model inherently differs from the real hand surface, also requiring a large number of annotations. We turn to explore kinds of other non-parametric methods to mitigate the above problem and reduce the reliance on data annotations. In this thesis, we explore kinds of 3D representation information i.e., point cloud, UV map, and integrate the parametric and non-parametric models, for 3D hand shape reconstruction.

We first propose a non-parametric approach using point cloud representation and a unique point cloud reconstruction technique for determining the full hand shape from RGB images. Our approach employs a combined local and global representation for learning and outperforms state-of-the-art approaches for 3D hand pose estimation while producing high-quality point clouds. Unlike existing methods that map visual features to the parameters of a parametric model, our approach directly reconstructs the hand surface without needing a hand model.

Beyond hand shape estimation lies the task of interacting with objects, which involves predicting 3D hand shape while accounting for realistic hand-object interactions. This task is crucial for the human-computer interaction system. However, accurately estimating 3D hand shapes amidst heavy object occlusions while also balancing realistic hand-object interactions with precise 3D hand shapes presents a significant challenge. To address these issues, we propose a UV-map-based pipeline for 3D hand reconstruction that employs a non-parametric hand shape representation, UV Map. Our approach simultaneously estimates UV coordinates, hand texture, and contact maps in UV space, which allows for dense contact modeling and improves reconstruction while remaining efficient. We also notice that template-based methods have become remarkably accurate. Nonetheless, these approaches are contingent upon having pre-defined object mesh models, making them unsuitable for reconstructing unobserved objects. Template-free methods, on the other hand, do not rely on specific object models but tend to produce less realistic object shapes. We then introduce a novel category-level 3D hand-object reconstruction framework that incorporates shape priors for previously unseen objects. Specifically, our proposed model utilizes an initial object template mesh model and RGB input to learn knowledge about object deformation and pose through the supervision of mask and depth maps as supervision.

Previous works on 3D hand shape reconstruction can be categorized into two directions: parametric model-based approaches and non-parametric model-based approaches. While non-parametric model-based approaches such as direct mesh fitting are highly accurate, their reconstruction meshes are often plagued with artifacts and do not resemble plausible hand shapes. On the other hand, parametric approaches are based on abstract PCA space and fall short in terms of reconstruction shape accuracy when compared to non-parametric methods. As such, there is a trade-off between the two model-based approaches. In this thesis, we aim to address this issue by proposing a pipeline that effectively integrates both parametric and non-parametric mesh models for accurate and plausible 3D hand reconstruction. We also introduce a VAE correction module that bridges the gap between non-parametric and MANO 3D poses. Additionally, we propose a novel weakly-supervised pipeline that leverages 3D joint labels to train the network to learn 3D shapes.