Deep Learning for Musical Creativity

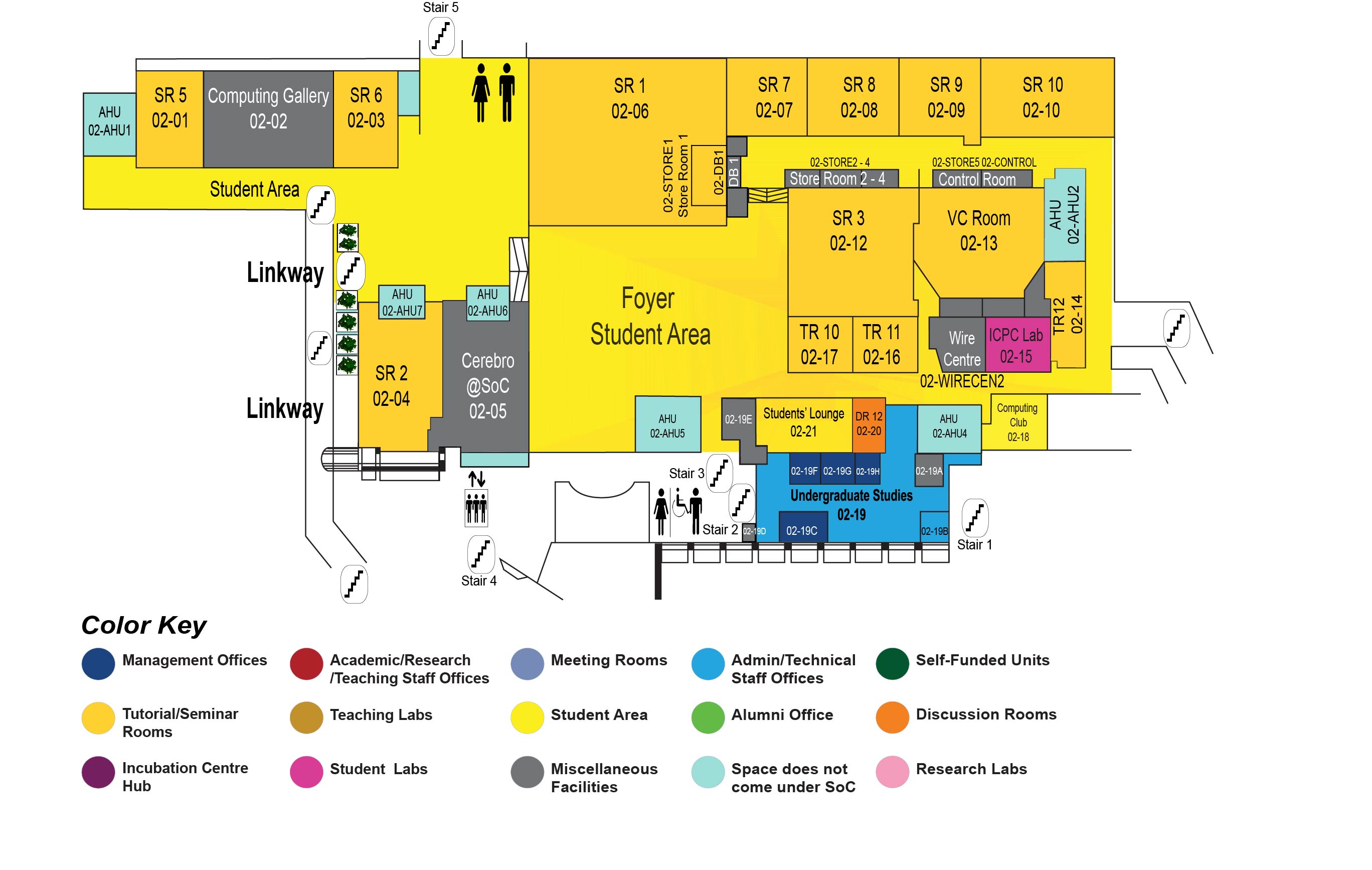

COM1 Level 2

Video Conference Room, COM1-02-13

Abstract: Advances in generative modeling have opened up exciting new possibilities for music making. How can we leverage these models to support human creative processes? First, I’ll illustrate how we can design generative models to better support music composition and performance synthesis. Coconet, the ML model behind the Bach Doodle, supports a nonlinear compositional process through an iterative block-Gibbs like generative procedure, while MIDI-DDSP supports intuitive user control in performance synthesis through hierarchical modeling. Second, I’ll propose a common framework, Expressive Communication, for evaluating how developments in generative models and steering interfaces are both important for empowering human-ai co-creation, where the goal is to create music that communicates an imagery or mood. Third, I’ll introduce the AI Song Contest and discuss some of the technical, creative, and sociocultural challenges musicians face when adapting ML-powered tools into their creative workflows.

Looking ahead, I’m excited to co-design with musicians to discover new modes of human-ai collaboration. I’m interested in designing visualizations and interactions that can help musicians understand and steer system behavior, and algorithms that can learn from their feedback in more organic ways. I aim to build systems that musicians can shape, negotiate, and jam with in their creative practice.

Biography: Anna Huang is a research lead on the Magenta project at Google DeepMind, spearheading efforts in Generative AI for Musical Creativity. She also holds a Canada CIFAR AI Chair at Mila—the Québec Artificial Intelligence Institute. Her research is at the intersection of machine learning and human-computer interaction, with the goal of supporting music making and more generally the human creative process.

She is the creator of the ML model Coconet that powered Google’s first AI Doodle, the Bach Doodle. In two days, Coconet harmonized 55 million melodies from users around the world. In 2018, she created Music Transformer, a breakthrough in generating music with long-term structure, and the first successful adaptation of the Transformer architecture to music. Her ICLR paper is currently the most cited paper in music generation. She was a judge then organizer for the AI Song Contest for three years. She was a guest editor for ISMIR’s flagship journal, for TISMIR's special issue on AI and Musical Creativity. This Fall, she will be joining Massachusetts Institute of Technology (MIT) as faculty, with a shared position between Electrical Engineering and Computer Science (EECS) and Music and Theater Arts (MTA).