Forensic Analysis and Defense for Machine Learning Model in Black-boxes

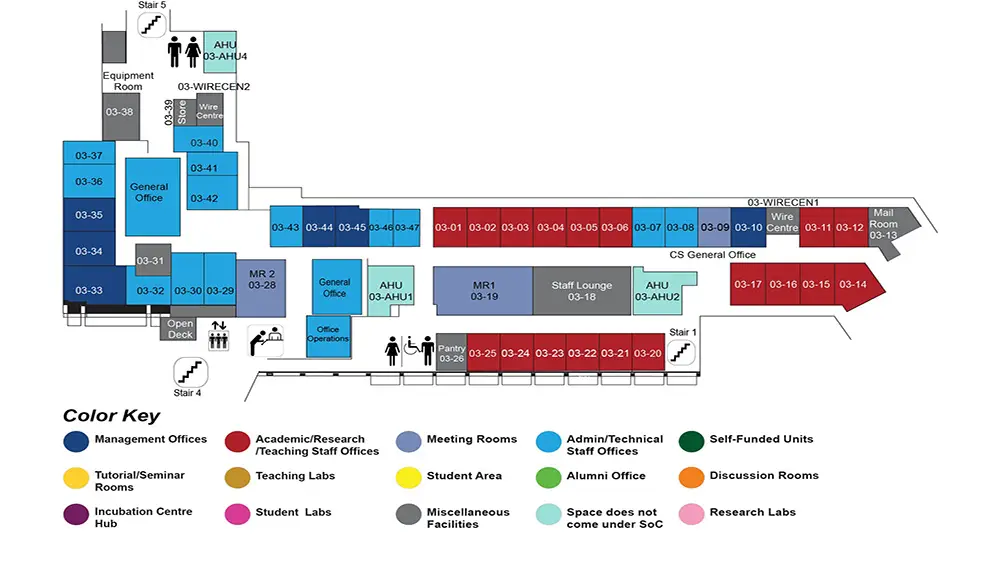

COM1 Level 3

MR1, COM1-03-19

Abstract:

With the progress in modern learning theories and advancement of general-purpose computing on graphics processing units, machine learning models are now more powerful than ever. Machine learning models, especially deep neural networks, have achieved excellent performance in tasks such as image classification, object detection and natural language processing. As machine learning models become widely deployed in many areas, there are also rising security concerns. This thesis studies two aspects: forensic analysis and adversarial defenses.

In the first half of this thesis, we take the perspective of a forensic investigator who wants to investigate some suspicious models. While existing method such as membership inference, model cloning and model inversion could provide useful information of a target model, they often rely on the answer to the fundamental question: “What is the model’s data domain?” We propose a framework to determine the data domain of an unknown black-box model. This framework fills in the gap for many existing methods and makes them more effective. We also study another related problem in forensics and propose a method which is able to extract the common component shared among a set of black-box target models.

The second half of this thesis studies adversarial defenses. An adversarial attack adds a small perturbation to a clean sample and makes it misclassified by a victim model. Here, we take the perspective of a defender or protector seeking to safeguard against adversarial attacks. Defending against such attacks has proven to be challenging in general settings. Some believe the fundamental difficulty in defending against attacks lies in the intriguing properties of the model itself. Rather than directly countering attacks, we propose the use of "attractors" designed to confuse adversaries and disrupt the attack process, thereby making generated adversarial samples easily detectable. Moreover, we apply this attractor concept within a seller-buyer distribution setting, where we distribute different model copies to different users to hinder the replication of adversarial samples across multiple copies. In a noteworthy manner, our findings suggest that achieving a high level of robustness against adversarial attacks is possible without the necessity of resolving complex fundamental issues related to model structures or learning theories.