Personalized Visual Information Captioning

Dr Mohan Kankanhalli, Provost'S Chair Professor, School of Computing

COM1 Level 3

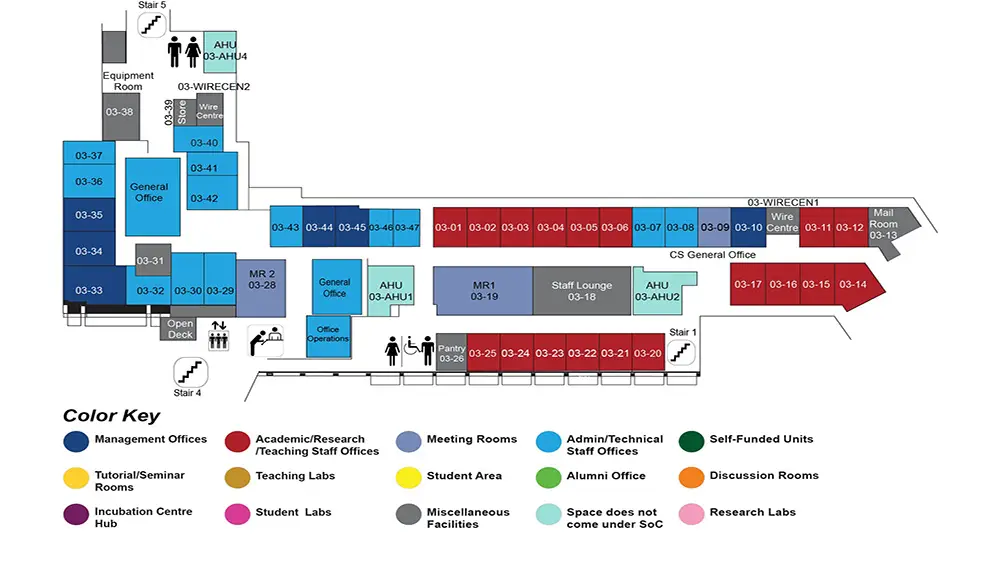

MR1, COM1-03-19

Abstract:

Visual information captioning task, which automatically translates images or videos into natural language descriptions, has drawn increasing attention in the computer vision and multimedia communities. Recently, with the rapid development of deep learning, the encoder-decoder based framework has achieved comparable performance in visual information captioning. However, most of the models focus on understanding the visual content in an objective and neutral manner, while the diverse expressions are often ignored. Indeed, imitating human beings to generate diverse and personalized visual information captions can largely improve the user experience in related applications such as visual intelligent chat-bots, vision content searching, etc. Therefore, in this thesis, we investigate the problem of personalized visual information captioning. We work on the personalized image captioning and personalized video captioning with user information and prior knowledge. The user information refers to the age, gender, prior knowledge level, etc; and the prior knowledge refers to the named entities (e.g. famous persons, objects and places) that appear in the image or video. We also work on the few-shot learning that can automatically and efficiently recognize named entities in visual information.

Personalized image captioning aims to imitate human beings to generate personalized image captions that describe the image content and meanwhile reflecting the annotator's personal style. Our study is based on the intuition that different people will generate a variety of image captions for the same scene, as their prior knowledge and opinion about the scene may differ. In particular, we first perform a series of human studies to investigate what influences human description of a visual scene. We identify three main factors: a person's knowledge level of the scene, opinion on the scene, and gender. Based on our human study findings, we propose a novel human-centered algorithm that is able to generate personalized image captions with both human factors and provided prior knowledge.

Personalized video captioning translates video data to natural language described in different personal styles. It is a more challenging task as the video data contains not only spatial but also temporal information. There may be different human factors that impact people to generate captions for videos compared with images. Thus, we collect the Personalized Video-to-Text (PVTT) dataset, statistical analyses on PVTT identify annotators' gender, age, induced emotions, and prior knowledge of the videos jointly impact video captioning. Based on the findings in our human study, we design a novel personalized caption generator (PCG) that takes a video clip and the aforementioned four human factors as input and generates personalized captions.

In order to automatically detect named entities from visual information, we study the few-shot learning (FSL) problem. FSL is able to learn novel categories with limited samples. We first present a knowledge extraction schema, which can acquire superclass information from the knowledge graph, and compute superclass semantic relations between different categories. We then introduce a novel soft label supervised contrastive loss to help extract discriminative superclass features from images so that the superclass relation can be captured by these features. A novel model architecture that is jointly trained by images and superclass information has been proposed. The model encodes image features that minimize the cross-entropy loss at the category level, while it also extracts the superclass feature that minimizes the soft label contrastive loss at the superclass level. Experimental results demonstrate that the proposed approach achieves competitive results on different datasets.