Algorithms for Collaborative Machine Learning: Data Sharing and Model Sharing Perspectives

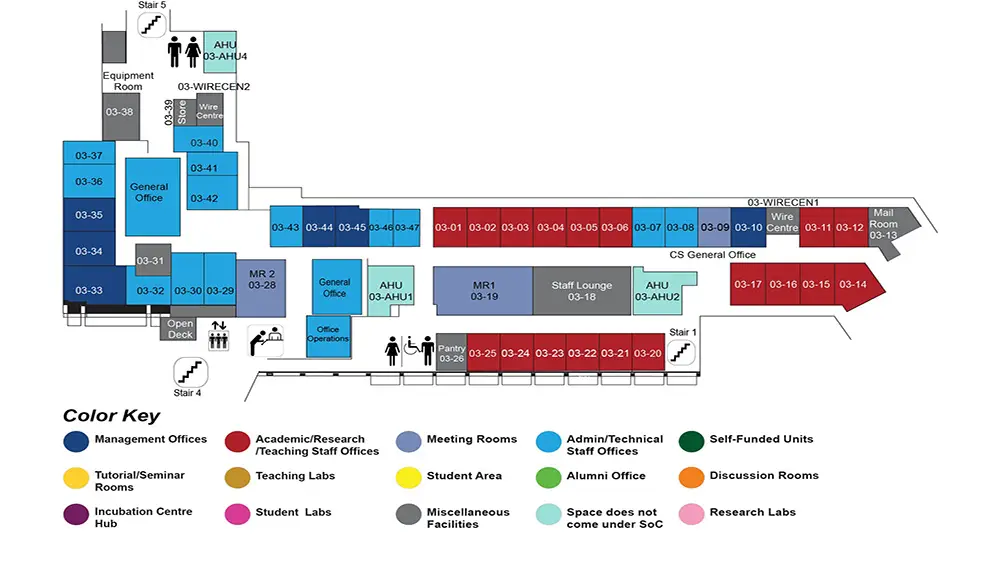

COM1 Level 3

MR1, COM1-03-19

Abstract:

The last decade has witnessed unprecedented advances in machine learning (ML), especially in deep learning. Deep neural networks possess remarkable generalization power, surpassing human-level performance on many tasks. These advances in ML crucially rely on the availability of massive and high-quality datasets. Since more data often leads to better generalization performance, it would be beneficial for multiple parties to aggregate their data and jointly train an ML model. This is known as the collaborative machine learning (CML) framework. Despite its early success, various social, economic, and legal considerations associated with sharing personal or proprietary data hinder collaborative machine learning. The recent commoditization of private data has led to the emergence of personal data ownership, which advocates for data owners to have control over the use of their data and receive compensation in return.

The first work studies CML in relation to personal data ownership and addresses the challenge of data procurement from self-interested and strategic data owners. Monetary compensation is provided to data owners in exchange for their contributed data. We consider a scenario where data owners cannot fabricate data but may misreport their costs to maximize their profit. Our objective is to develop mechanisms that incentivize truthful reporting of costs while optimizing predictive performance. The proposed mechanisms draw inspiration from economic principles, game theory, and mechanism design to create a mutually beneficial situation where data owners are motivated to share their data in exchange for fair compensation.

In addition to personal data ownership, growing concerns over privacy and security have hindered different parties from sharing their data. As an alternative, parties may be more willing to share machine learning models that contain distilled, useful information from the data, without revealing the architecture or parameters of their pre-trained "black-box" model. This line of research is known as model fusion (MF), which explores how parties should share pre-trained models and how to collectively learn a single ML model using these shared models. Despite recent advances, there are still important learning scenarios in which existing algorithms cannot be readily applied. We identify such important scenarios and propose a novel MF framework and algorithm for each of them.

The second work investigates model fusion in a black-box and multi-task setting, in which each party's black-box model is pre-trained to solve a different yet related task. To address this multi-task challenge, we develop a new fusion paradigm that represents each expert as a distribution over a spectrum of predictive prototypes, which are isolated from task-specific information encoded within the prototype distribution. The task-agnostic prototypes can then be reintegrated to generate a new model that solves a new task encoded with a different prototype distribution. The fusion and generalization performance of the proposed framework is demonstrated empirically on several real-world benchmark datasets.

The third work examines model fusion in the context of personalized learning. Production systems operating on a growing domain of analytic services often require generating warm-start solution models for emerging tasks with limited data. One potential approach to address this warm-start challenge is to adopt meta-learning to generate a base model that can be adapted to solve unseen tasks with minimal fine-tuning. This however requires the training processes of previous solution models of existing tasks to be synchronized. This is not possible if these models were pre-trained separately on private data owned by different entities at different timeframes and cannot be synchronously re-trained. To accommodate such scenarios, we develop a new personalized learning framework that synthesizes customized models for unseen tasks via the fusion of independently pre-trained models of related tasks. We establish PAC-Bayes style performance guarantees for the proposed framework and demonstrate its effectiveness on both synthetic and real datasets.

In summary, this thesis contributes to the field of collaborative machine learning from two distinct perspectives. The first perspective focuses on data-centric CML, where the thesis proposes novel mechanisms that incentivize data sharing among participating parties. The second perspective of this thesis revolves around model-centric CML, where a principled collaborative intelligence framework is developed for a group of heterogeneous learning parties. Together, the results and findings of this thesis address many contemporary challenges of collaborative machine learning, including data ownership, privacy, and security concerns, and have the potential to facilitate the mainstream adoption of collaborative approaches in machine learning.