Creating More Effective Text-Based Computer-Delivered Health Messaging

Dr Brian Lim Youliang, Associate Professor, School of Computing

COM1 Level 3

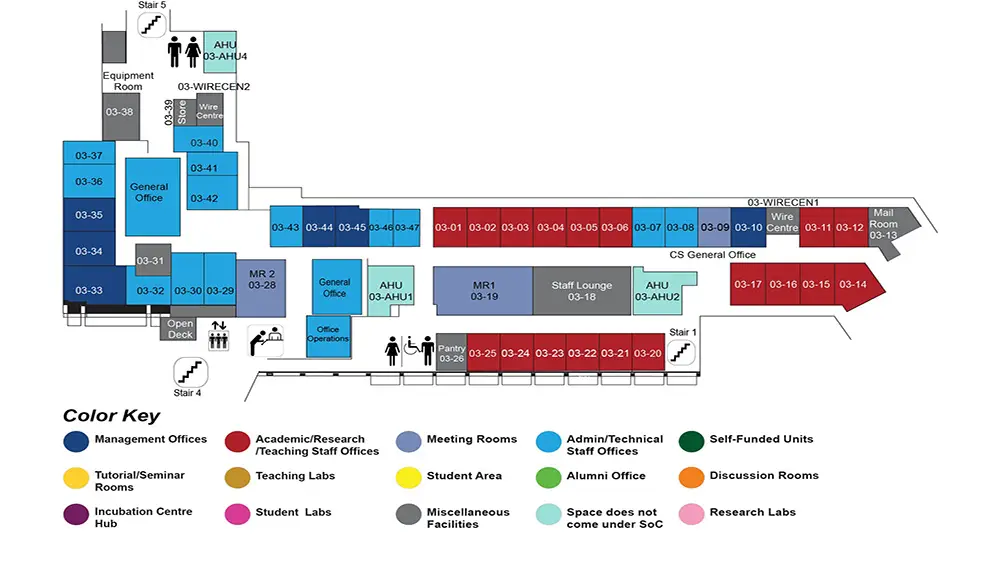

MR1, COM1-03-19

Abstract:

The ubiquity of smartphones has led to an increase in on demand healthcare being supplied. For example, people can share their illness-related experiences with others similar to themselves, and healthcare experts can offer advice for better treatment and care for remediable, terminal and mental illnesses. As well as this human-to-human communication, there has been an increased use of human-to-computer digital health messaging, such as chatbots. These can prove advantageous as they offer synchronous and anonymous feedback without the need for a human conversational partner. However, there are many subtleties involved in human conversation that a computer agent may not properly exhibit. For example, there are various conversational styles, etiquettes, politeness strategies or empathic responses that need to be chosen appropriately for the conversation. Encouragingly, computers are social actors (CASA) posits that people apply the same social norms to computers as they would do to people. On from this, previous studies have focused on applying conversational strategies to computer agents to make them embody more favourable human characteristics. However, if a computer agent fails in this regard it can lead to negative reactions from users. Therefore, in this dissertation we describe a series of studies we carried out to lead to more effective human-to-computer digital health messaging.

In our first study, we use the crowd to write diverse motivational messages to encourage people to exercise. Yet, it is not a simple case of asking the crowd to write messages, as people may write similar ideas leading to duplicated and redundant messages. To combat this, previous work has shown people example ideas to direct them to less commonly thought of ideas. However, these studies often relied on manually labelling and selecting example ideas. Therefore, we developed Directed Diversity, a system which uses word embeddings to automatically and scalably select collectively diverse examples to show to message writers. In a series of user studies we asked people to write messages either using examples selected by Directed Diversity, random examples, or no examples. We evaluated the diversity of messages using a selection of diversity metrics from different domains, human evaluations, and expert evaluations, and found that our method led to more diverse messages compared to the baseline conditions. Additionally, subjective evaluations of the messages found that directed diversity generated more informative and equally motivating messages compared to the baseline conditions.

Our second study investigates the effect of a health chatbot’s conversational style on the quality of response given by a user. The tone and language used by a chatbot can influence user perceptions and the quality of information sharing. This is especially important in the health domain where detailed information is needed for diagnosis. In two user studies on Amazon Mechanical Turk, we investigated the effect of a chatbot's conversational style (formal vs casual) on user perceptions, likelihood to disclose, and quality of user utterances. In general, results indicate the benefits of using a formal conversational style within the health domain. In the first user study, 187 users were asked for their likelihood to disclose either their income level, credit score, or medical history to a chatbot to assist finding life insurance. When the chatbot asked for medical history, users perceived a formal conversation style as more competent and appropriate. In the second user study, 156 users discussed their dental flossing behaviour with a chatbot which used either a formal or casual conversational style. We found that users who do not floss every day wrote more specific and actionable user utterances when the chatbot used a formal conversational style.

In our final study, we investigate the format used by a chatbot when referencing a user's utterance from a previous chatting session. Our motivation for this study is driven in part by the Personalisation-Privacy Paradox: a tension between providing personalised services to users to improve their experience, and providing less personalised services so as not to seem invasive to users. To explore this trade-off, we investigated how a chatbot references previous conversations with a user and its effects on a user’s privacy concerns and perceptions. In a three-week longitudinal between-subjects study, 169 participants talked to a chatbot that either, (1-None): did not explicitly reference previous user utterances, (2-Verbatim): referenced previous utterances verbatim, or (3-Paraphrase): used paraphrases to reference previous utterances. Participants found Verbatim and Paraphrase chatbots more intelligent and engaging. However, the Verbatim chatbot also raised privacy concerns with participants. Upon study completion, we conducted semi-structured interviews with 15 participants to gain insights such as to why people prefer certain conditions or had privacy concerns. These findings can help designers choose an appropriate form of referencing previous user utterances and inform in the design of longitudinal dialogue scripting.

In summary, we have researched how to create more effective digital health interventions starting from generating health messages, to choosing an appropriate formality of messaging, and finally to formatting messages which reference a user's previous utterances.