A New Perspective For Large-Scale Autonomous Navigation

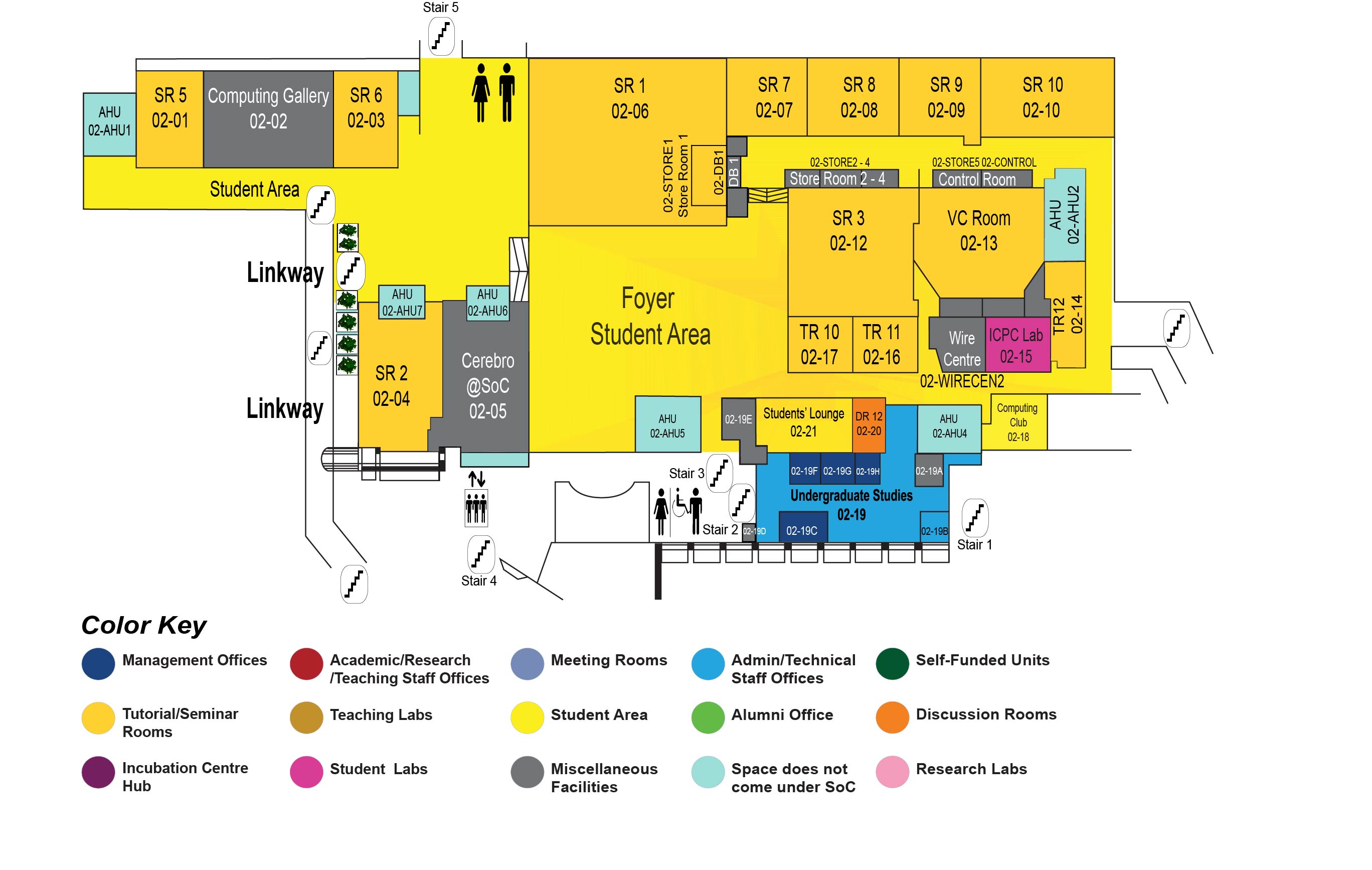

COM1 Level 2

Cerebro, COM1-02-05

Abstract:

This thesis provides a new system architecture, algorithms, implementation, and experimental evaluation of large-scale autonomous navigation in a complex, highly variable environment, with only coarse prior information, i.e., a floorplan and RGB camera sensors, without the need for accurate localizations.

Autonomous driving has been a dream for decades of human beings. Despite the rapid success of deep learning in perception and control, it is still far from human-level performance in people’s daily lives. Two fundamental challenges are forbidding autonomous navigation from achieving human-level performance. The traditional navigation system requires a highly accurate map of the working environment and highly accurate localization w.r.t. the map. However, it is a chicken and egg dilemma. Without a precise map, how do we get an accurate localization? On the other hand, how do we generate a precise map in an unknown environment without accurate localization?

This thesis tries to re-visit the autonomous navigation problem in a different aspect. We can decouple the autonomous navigation problem as high-level guidance and low-level navigation skills. We argue that local navigation skills are highly repeatable, like human drivers learn driving skills before being licensed. Those learned skills can help the human driver to go anywhere in the world following the traffic rules. Why won’t robots have similar skills to navigate anywhere following specific rules?

In our first work, we answer one question: How can a delivery robot navigate reliably to a destination in a new office building with minimal prior information? To tackle this challenge, we introduce a two-level hierarchical approach, which integrates model-free deep learning and model-based path planning. At the low level, a neural network motion controller, the intention-net, is trained end-to-end to provide robust local navigation—the intention-net maps images from a single monocular camera and "intentions" directly to robot controls. At the high level, a path planner uses a crude map, i.e., a 2-D floor plan, to compute a path from the robot’s current location to the goal. The planned route provides intentions to the intention net. Preliminary experiments suggest that the learned motion controller is robust against perceptual uncertainty, and by integrating with a path planner, it generalizes effectively to new environments and goals.

Secondly, we find that low-level motion controllers must handle partial observability due to the limited sensor coverage and dynamic environment changes. We further propose to train a neural network (NN) controller for local navigation via imitation learning. To tackle complex visual observations, we extract multi-scale spatial representations through CNNs. To tackle partial observability, we aggregate multi-scale spatial information over time and encode it in LSTMs. We use a separate memory module for each behavior mode to learn multimodal behaviors. Importantly, we integrate the multiple neural network modules into a unified controller that achieves robust performance for visual navigation in complex, partially observable environments. We implemented the controller on the quadrupedal Spot robot and evaluated it on three challenging tasks: adversarial pedestrian avoidance, blind-spot obstacle avoidance, and elevator riding. The experiments show that the proposed NN architecture significantly improves navigation performance.

Thirdly, there would be a compound error with imitation learning using behavior cloning. To tackle this challenge, we integrate inverse reinforcement learning (IRL) with our "intention" design to learn a better controller. An inverse reinforcement learning (IRL) agent learns to act intelligently by observing expert demonstrations and learning the expert’s underlying reward function. We present Context-Hierarchy IRL(CHIRL), a new IRL algorithm that exploits the context to scale up IRL and learn the reward functions of complex behaviors. CHIRL models the context hierarchically as a directed acyclic graph; it represents the reward function as a corresponding modular deep neural network that associates each network module with a node of the context hierarchy. The context hierarchy and the modular reward representation enable data sharing across multiple contexts and context-specific state abstraction, significantly improving learning performance. CHIRL naturally connects with hierarchical task planning when the context hierarchy represents subtask decomposition. It enables the incorporation of the prior knowledge of causal dependencies of subtasks. It makes it capable of solving large complex tasks by decoupling them into several subtasks and conquering each subtask to solve the original task. Experiments on benchmark tasks, including a large-scale autonomous driving task in the CARLA simulator, show promising results in scaling up IRL for tasks with complex reward functions.

Lastly, we develop a large-scale autonomous navigation system in a multi-terrain and multi-floor complex environment without the need to pre-scan the whole environment. Using existing floorplans and GPS maps, we design a two-level map system with topologic and topometric at the high level. We use our designed neural network controller at the low level to learn the repeatable navigation skills conditioned on global guidance. Our system has been successfully deployed on the Boston spot robots and can navigate autonomously on the NUS campus. It has been running autonomously for more than 100 kilometers since February 2021.