Mobile Multitasking with Dynamic Information on Smart Glasses: Techniques and Tools to Facilitate Video Learning while Walking

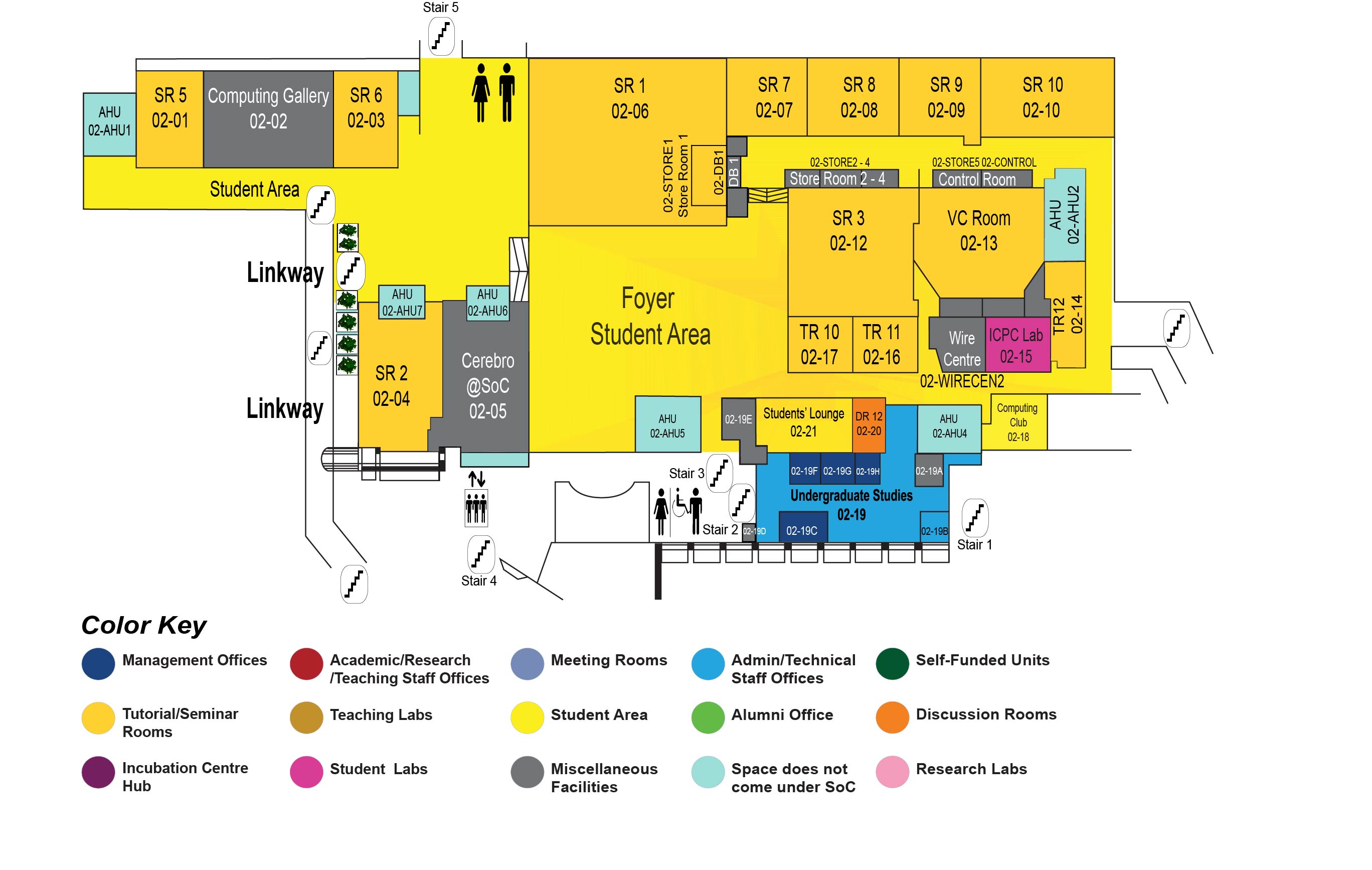

COM1 Level 2

SR9, COM1-02-09

Abstract:

Multitasking with digital information has become a necessity in our day-to-day lives, so much so that we engage in it even while we are walking. Recent research suggests that the emergent smart glasses (Optical See-Through Head-Mounted Displays or OST-HMDs) platform can be a better alternative to smartphones for such mobile multitasking: their see-through display and heads-up wearability allows them to facilitate tasks such as reading and editing static textual information while walking.

In this thesis, we explore how OST-HMDs can facilitate the use of dynamic video-based information while walking. In particular, we focus on the task of learning from lecture videos while walking. Our initial research showed that existing videos need to be adapted to support this type of learning on OST-HMDs. Hence, in our first work, we investigated the impact of dynamic drawing, a text presentation style used in lecture videos for its learning and engagement benefits. A systematic analysis of the factors of this style, namely the font style and motion of text appearance, showed that displaying the entire word at once using a typeface font is better for learning performance and is especially effective in walking situations.

In our second work, we conducted a controlled study on information presentation techniques to improve the balance of users' attention and reduce their cognitive load when using OST-HMDs. Our findings led to the development of the LSVP (Layered Serial Visual Presentation) style, which incorporates properties like sequentiality and strict data persistence. We also compared the LSVP style on OST-HMDs to traditional video styles on smartphones and found that OST-HMDs improved learning scores and walking speed in navigation tasks.

Finally, to facilitate the adaptation of lecture videos for viewing on OST-HMDs, we developed VidAdapter, a tool that aims to improve the efficiency of the adaptation process. VidAdapter does this by automatically extracting meaningful elements from the video, allowing users to easily rearrange these elements both spatially and temporally, and streamlining the process of modifying their visual appearance. We evaluated the tool with experienced and novice video editing users and found that it is strongly preferred over traditional tools and can improve the efficiency of the adaptation process by over 60% on average.

Overall, our results demonstrate the feasibility of using OST-HMDs for mobile multitasking with dynamic information. We identify effective ways of presenting dynamic video content to enable such multitasking and build artifacts to help facilitate such multitasking in practice.