Visual Relation Driven Video Question Answering

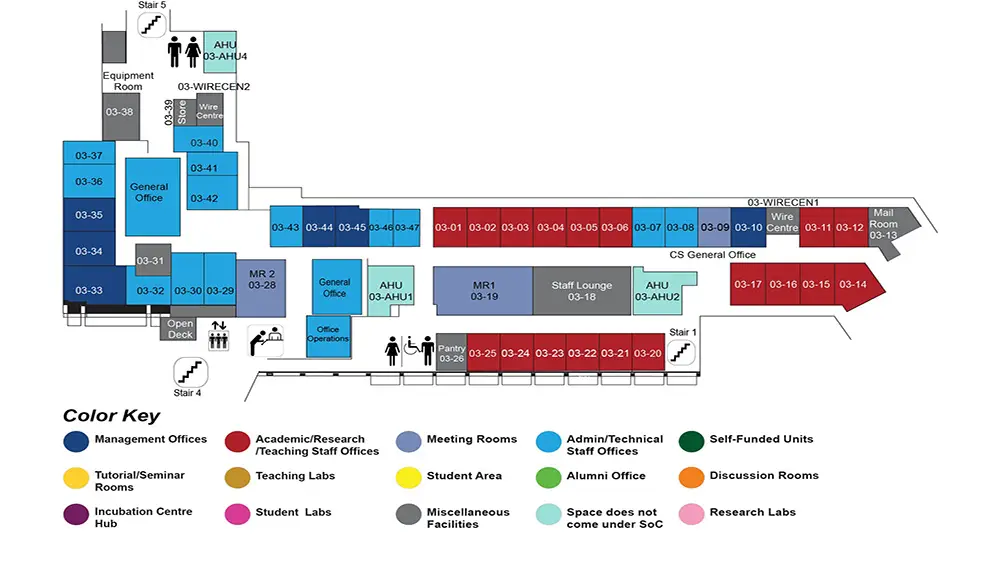

COM1 Level 3

MR1, COM1-03-19

Abstract:

It has been a long-term goal to develop Artificial Intelligence (AI) systems that can demonstrate their understanding of the dynamic visual world by responding to the natural language queries in the context of videos that directly reflect our physical surroundings. Video Question Answering (VideoQA), as a typical testbed to evaluate such AI capability, has thus received much attention. While works have continually shown progress, most of the advances to date are made in answering questions that challenge the recognition or superficial description of the video contents; the problem of answering questions related to visual relation reasoning is much less explored. Visual relations which weave together the video elements (e.g., objects, actions, and activities), enable holistic and in-depth video understanding that goes beyond coarse recognition to fine-grained reasoning, or even to explanation of intentions and goals. Thus, this thesis proposes to study visual relation driven VideoQA, by focusing on four works including the construction of a benchmark dataset and the development of three visual graph models.

First, as there is a lack of an effective VideoQA dataset that features inference of real-world visual relations for question answering, our first effort is to collect and curate such a dataset on top of our existing visual relation dataset. The dataset's uniqueness is two-fold: 1) it emphasizes the comprehension of the causal and temporal relations between different actions, and 2) it features real-world object interactions in space-time. Along with the dataset, we provide comprehensive baselines and analyses as well as heuristic observations to facilitate future studies.

Second, to approach the solution for relation driven VideoQA, our first attempt is to explicitly ground the relations in videos. Given a textual relation triplet (e.g., ), the task aims at spatio-temporally localizing the visual subject and objects in the paired videos. Our intuition is that relations constitute the most informative parts of the textual queries. By grounding the relations, one can largely ground the query in the videos, which would facilitate reasoning over the grounded video contents to derive the correct answers. To ground the relations, we propose to model a video as a spatio-temporal region graph and collaboratively optimize two sequences of regions over the graph through relation attending and reconstruction. The pair of region sequences that give the maximal reconstruction accuracy is returned as grounding results.

Third, while the spatio-temporal region graph is effective in capturing the spatial and semantic relations, it focuses on grounding only a single relation. Also, it neglects the hierarchical and compositional relations between the video elements. Such weaknesses impede its application in real-world QA scenarios. To address these problems, we need to align the video elements at different granularity levels with the corresponding linguistic concepts in the language queries, and enable joint reasoning over multiple kinds of relations. To accomplish the goal, we propose to build video as a query-conditioned hierarchical graph. The graph explicitly captures the hierarchical and compositional relations that can be used to reason and aggregate the video elements at different granularity levels for multi-granular question answering.

Finally, both the spatio-temporal region graph and hierarchical conditional graph construct visual graphs at static frames, while the temporal relations are coarsely modelled over the pooled graph representations. Such approaches cannot effectively capture the temporal dynamics of the objects and their relations within local video clips. To tackle the problem, we propose to model video as a hierarchical dynamic visual graph which exploits the transformer architectures to learn the temporal dynamics of the video elements at both local and global scopes. Additionally, to alleviate the data scarcity issue, we perform contrastive learning of the video graph transformer model and pretrain it on a large-scale weakly-paired video-text dataset via self-supervision.

To summarize, this thesis proposes to study visual relation driven VideoQA. We contribute the first manually annotated VideoQA dataset that is rich in real-world visual relations. We then approach the solutions by mainly proposing three effective visual graph models that focus on capturing the visual relations from multiple aspects of spatial, temporal (both local and global), hierarchical and compositional scopes. The proposed methods cover learning from limited data with strong supervision by incorporating structure priors and learning from vast amount of Web data with self-supervised pretraining.