Enhancing Deep Learning with Symbolic Domain Knowledge

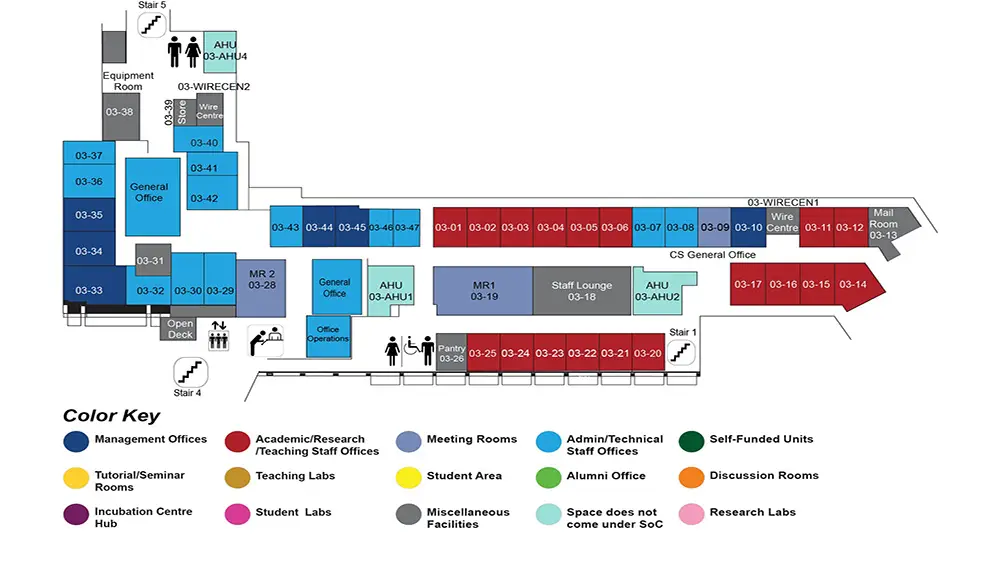

COM1 Level 3

MR1, COM1-03-19

Abstract:

Deep neural networks have brought significant advances to a variety of tasks in machine learning and artificial intelligence. However, despite their effectiveness and flexibility, deep models have two drawbacks: low data efficiency and a lack of robustness.

Firstly, deep neural networks often require large amounts of training data, limiting their application in situations where data is not as readily available, such as in the medical domain and imitation learning, where expert knowledge and demonstrations are typically expensive and difficult to procure. In many real-world situations, symbolic domain knowledge is often available in addition to data, such as expert knowledge from doctors and high-level structured task information in imitation learning. However, leveraging symbolic domain knowledge into deep neural networks in a scalable and effective manner remains a challenging open problem.

The first part of this thesis aims to improve the data efficiency of deep neural networks by incorporating symbolic domain knowledge. More specifically, we focus on propositional logic and linear temporal logic for sequence learning tasks, which are unambiguous, expressive, and interpretable by human beings. We propose logic graph embedding frameworks, Logic Embedding Network with Semantic Regularization (LENSR) and Temporal-Logic Embedded Automata Framework (T-LEAF), which take propositional logic and linear temporal logic as inputs, respectively. LENSR and T-LEAF project logic formulae (and assignments) onto a manifold via graph neural networks. Their objective is to minimize distances between the embeddings of formulae and satisfying assignments in a shared latent embedding space, which will then guide the training of the target deep learning models. We have demonstrated its effectiveness in visual relation prediction, sequential human action recognition, and imitation learning tasks.

Secondly, recent work has shown that deep neural networks are vulnerable to adversarial attacks, in which minor perturbations of input signals cause models to behave inappropriately and unexpectedly. Humans, on the other hand, appear robust to these particular sorts of input variations. We posit that this part of robustness stems from accumulated knowledge about the world.

In the second part of the thesis, we propose to leverage prior knowledge to defend against adversarial attacks in RL settings using a framework we call Knowledge-based Policy Recycling (KPR). Different from previous defense methods such as adversarial training and robust learning, KPR incorporates domain knowledge over a set of auxiliary tasks and learns relations among them from interactions with the environment via a Graph Neural Network (GNN). Moreover, KPR can use any relevant policy as an auxiliary policy and, importantly, does not assume access or information regarding the adversarial attack. Empirically, KPR results in policies that are more robust to various adversarial attacks in Atari games and a simulated Robot Foodcourt environment.