Effective and Efficient Federated Learning on Non-IID Data

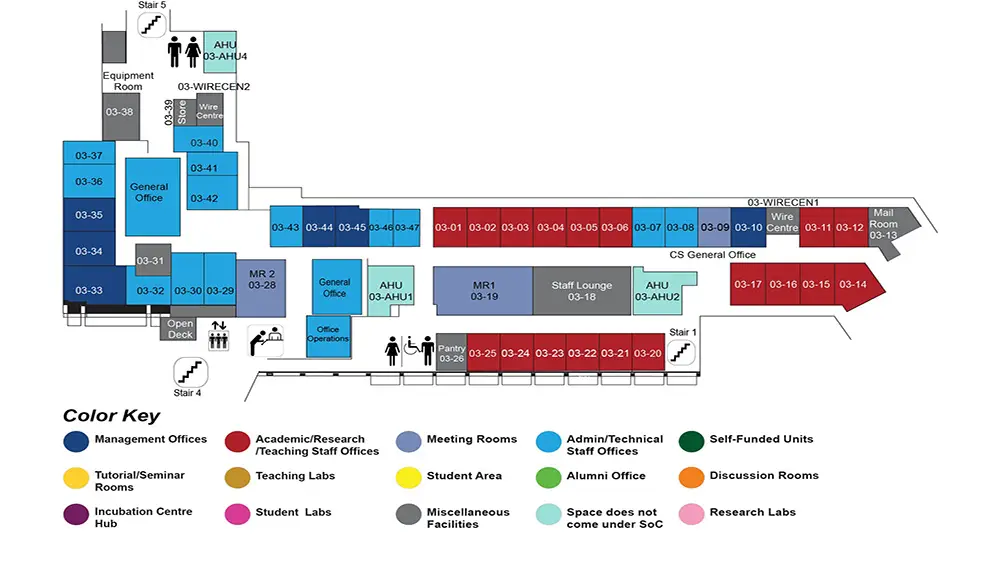

COM1 Level 3

MR1, COM1-03-19

Abstract:

Machine learning is data-hungry. However, data are usually distributed in multiple places, and they cannot be transferred to a single server to train a machine learning model due to privacy regulations and communication constraints. Federated Learning (FL) has been an emerging solution to enable multiple parties (i.e., mobile devices and hospitals) collaboratively solve a machine learning problem without exchanging the local data. In practice, the data distributions of different parties are usually non-independent and identically distributed (i.e., non-IID), which significantly affects the accuracy of FL.

Firstly, there lacks an experimental study on systematically understanding existing FL studies on non-IID data, as these studies have very rigid data partitioning strategies among parties, which are hardly representative and thorough. To help researchers better understand and study the non-IID data setting in federated learning, we propose comprehensive data partitioning strategies to cover the typical non-IID data cases. Moreover, we conduct extensive experiments to study the effect of non-IID data in FL. Through the benchmark, we identify non-IID data as a key challenge in FL.

Secondly, from our benchmark, existing FL studies fail to achieve high performance in image datasets with deep learning models on non-IID data. To increase the effectiveness of FL on non-IID data, we propose model-contrastive federated learning (MOON). MOON is a simple and effective federated learning framework. The key idea of MOON is to utilize the similarity between model representations to correct the local training of individual parties, i.e., conducting contrastive learning in model-level. Our extensive experiments show that MOON significantly outperforms the other state-of-the-art federated learning algorithms on various image classification tasks.

Thirdly, while gradient boosting decision trees (GBDTs) have become very successful, federated GBDTs have not been well exploited. Existing studies are not efficient or effective enough for practical use. They suffer either from the inefficiency due to the usage of costly data transformations such as secret sharing and homomorphic encryption, or from the low model accuracy due to differential privacy designs. We propose SimFL, a practical federated GBDT framework by utilizing the similarity between local data to capture the global data distribution during local training. While the computation overhead in the training process is kept low, SimFL can significantly improve the predictive accuracy compared with the other

Finally, as there lacks a proper system for the deployment of federated decision trees, we develop a fast, efficient, and secure tree-based FL system named FedTree. FedTree supports horizontal and vertical federated training of GBDTs with a rich set of privacy protection techniques such as homomorphic encryption, secure aggregation, and differential privacy. With the computation and communication optimization, FedTree can achieve over 100x speedup compared with an existing FL system, while achieving the same model accuracy as centralized training.