Improving Usability of Machine Learning Model Explanations - Experiments with Visual and Verbal Cognitive Load

Dr Mohan Kankanhalli, Provost'S Chair Professor, School of Computing

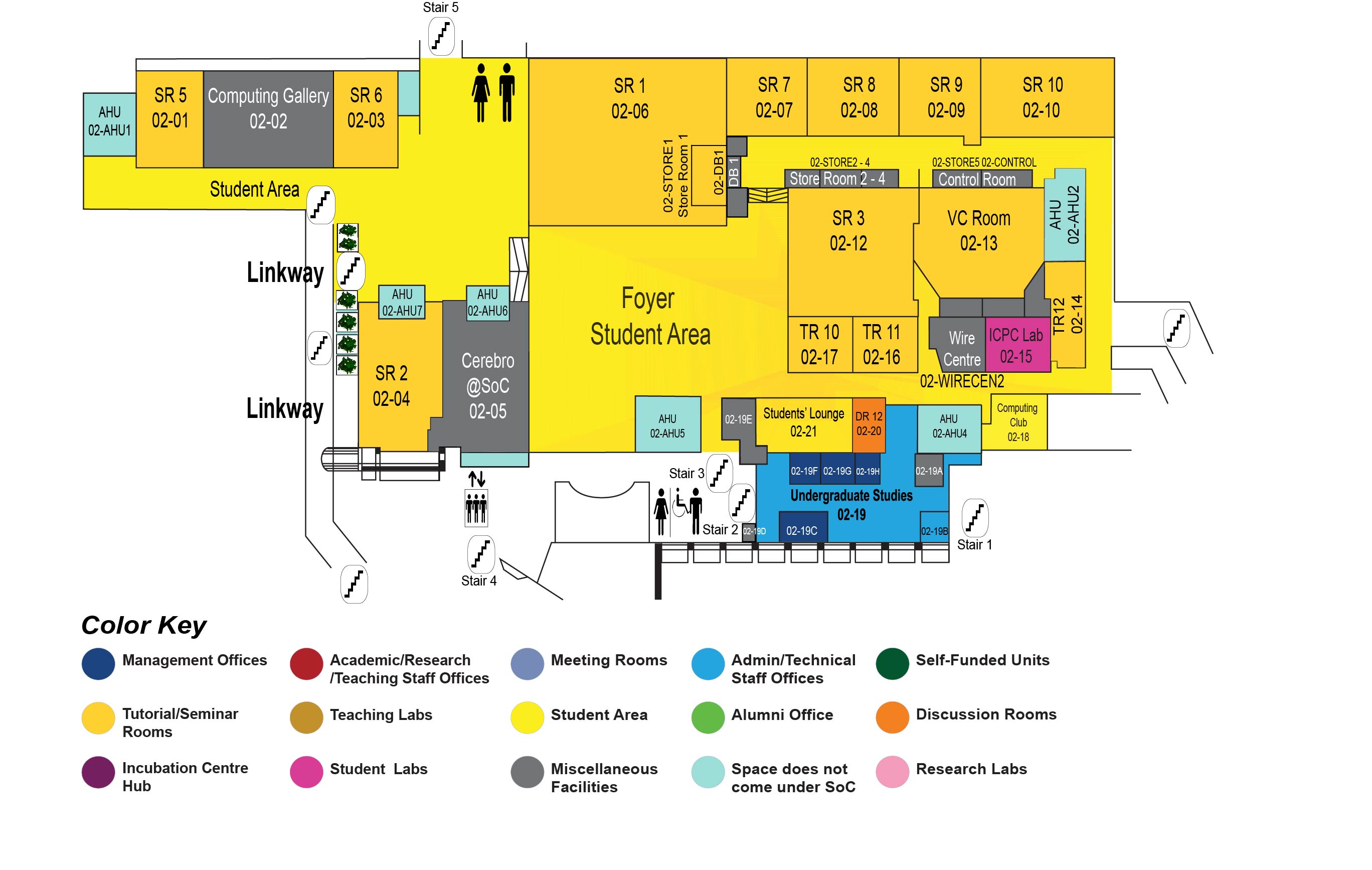

COM1 Level 2

SR10, COM1-02-10

Abstract:

Recent breakthroughs in machine learning has led to the widespread adoption of Artificial Intelligence (AI) in many spheres of human activity. These systems are highly accurate and can make decisions based on a multitude of factors from large volumes of data. Consequently, they are often too complex and opaque for users to understand. This has led to widespread calls to make these systems explainable and for users to retain control. As a result, research in eXplainable Artificial Intelligence (XAI) has seen a flurry of activity, resulting in many new interpretable machine learning models and explanation techniques. However, it is not clear how useful these explanations are for non-expert end users. In this thesis we investigate how to improve the usability of explanations through theory-driven integration of human factor requirements into modelling, specifically that of visual and verbal cognitive load.

First, we conduct a semi-automated literature review of over 12,000 papers relating to explanations or explainable systems using topic modelling, co-occurrence and network analysis to map the research space of diverse domains, such as algorithmic accountability, interpretable machine learning, context-awareness, cognitive psychology, and software learnability. Through our analysis we reveal fading and burgeoning trends in explainable systems and identify domains that are intricately connected or mostly isolated. This helps us propose an agenda for HCI research and call for closer collaboration between domains and communities needed for developing rigorous and usable XAI.

Second, we select one of the human factors uncovered through our literature analysis i.e., cognitive load and propose a framework for modelling it with Generalized Additive Models (GAMs). Drawing from cognitive psychology theories in graph comprehension, we formalize readability as visual cognitive chunks to measure and moderate the cognitive load in explanation visualizations. We present Cognitive-GAM (COGAM) to generate explanations with desired cognitive load and accuracy by combining expressive nonlinear GAMs with simpler sparse linear models. We calibrated visual cognitive chunks with reading time in a user study, characterized the trade-off between cognitive load and accuracy for four datasets in simulation studies, and evaluated COGAM against baselines with users. We found that COGAM can decrease cognitive load without decreasing accuracy or increase accuracy without increasing cognitive load.

Third, we present CaptionCOGAM which selects salient visual information from graphs of COGAM and verbalizes them with extensible templates to automatically generate captions that balance verbal cognitive load with human interpretability. We quantify verbal cognitive load in terms of the number of graph propositions and sentence complexity, and quantify human interpretability in terms of the accuracy of reconstructing a graph visualization from its caption and accuracy of answering counterfactual questions. We conducted quantitative simulations to characterize the relationship between verbal cognitive load and human interpretability. We conducted qualitative co-design interviews to investigate how users used captions, what they considered as important to describe, and additional demands for intelligibility in answering counterfactual questions. We found that adding captions moderated in verbal cognitive load may improve interpretability, but more rigorous modelling and controls are required for further evaluation. COGAM and CaptionCOGAM together provide a unified framework to characterize the criteria of explanation parsimony, visual and verbal cognitive loads, and human interpretability

In summary, we tackle the problem of improving usability of XAI through a theory-driven integration of human factors. This is an example of drawing from understanding of human reasoning to inform XAI design. First, we explicitly modelled a new requirement for XAI by identifying a human factor or usability issue (in our case, cognitive load) and studying its constituent components relevant to explanations (graphs and visual chunks, captions and verbal chunks). Second, our explicit mathematical definition allowed us to objectively simulate the outcome of providing for the new requirement. Third, beyond using standard measures of explanation effectiveness, we define new measures to specifically evaluate explanations with respect to the targeted explanation quality (in our case, cognitive load). Our three-step method -1) mathematical modelling, 2) simulation and 3) evaluation with users provides a theoretical framework to help researchers to develop and evaluate human-centric XAI. Our method can be applied to other factors such as plausibility or memorability. Our framework and empirical measurement instruments for cognitive load is a starting point to rigorous assessment of the human interpretability of explainable AI.