Reproducibility Meets Computational Complexity

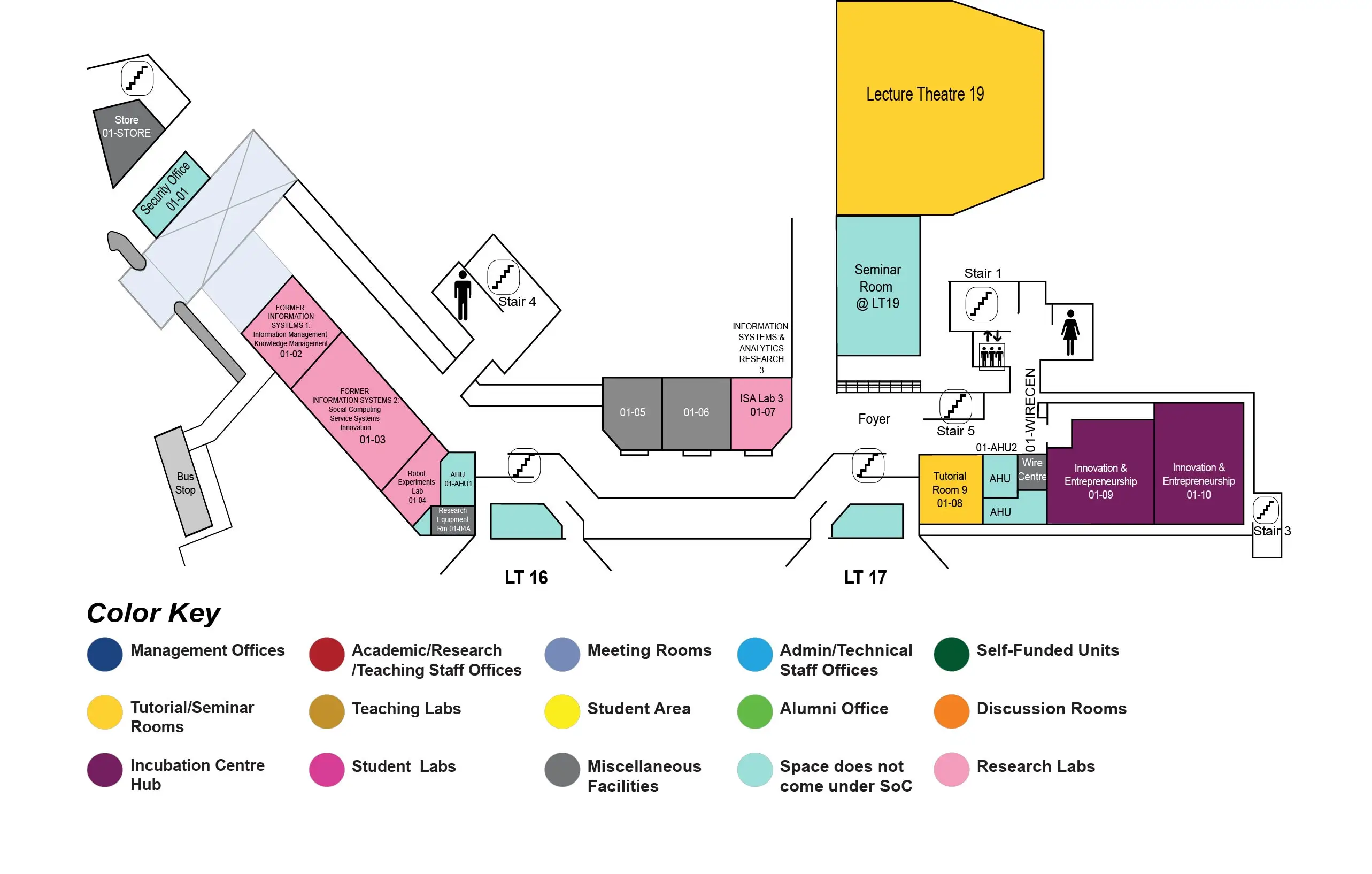

COM2 Level 1

SR@LT19 and via zoom (Hybrid)

Abstract:

Randomized algorithms play a central role in modern computing. For many computational tasks, there are randomized algorithms that are simple and more efficient than their deterministic counterparts. Moreover, for several application areas including computations over massive data sets, distributed computing, and cryptography, randomization is a necessity. Despite their wide applicability, a serious drawback of randomized computations, as opposed to the deterministic ones, is their {\em lack of reproducibility}: two different runs of the same randomized algorithm can produce two different valid outputs. Thus a significant question is: can we design randomized algorithms for important computational tasks that are also reproducible?

In this talk, I will introduce {\em pseudo-determinism}, a notion that formalizes reproducibility in randomized algorithms, and show how the task of designing pseudo-deterministic algorithms is fundamentally related to certain well-investigated issues in computational complexity theory. This is joint work with Peter Dixon, Aduri Pavan, and Jason Vander Woude.

Biodata:

Vinodchandran Variyam is a Professor in the School of Computing, University of Nebraska-Lincoln. Prof. Variyam is broadly interested in computer science topics wherever there is a fundamental efficiency concern. Concretely, his research spans multiple areas of core computer science topics including computational complexity theory, machine learning, and large data management. His contributions to complexity theory have played a significant role in advancing the state-of-the-art in several topics including derandomization, circuit complexity, space-limited computations, and Kolmogorov complexity.