Deep Spatial Understanding of Human and Animal Poses

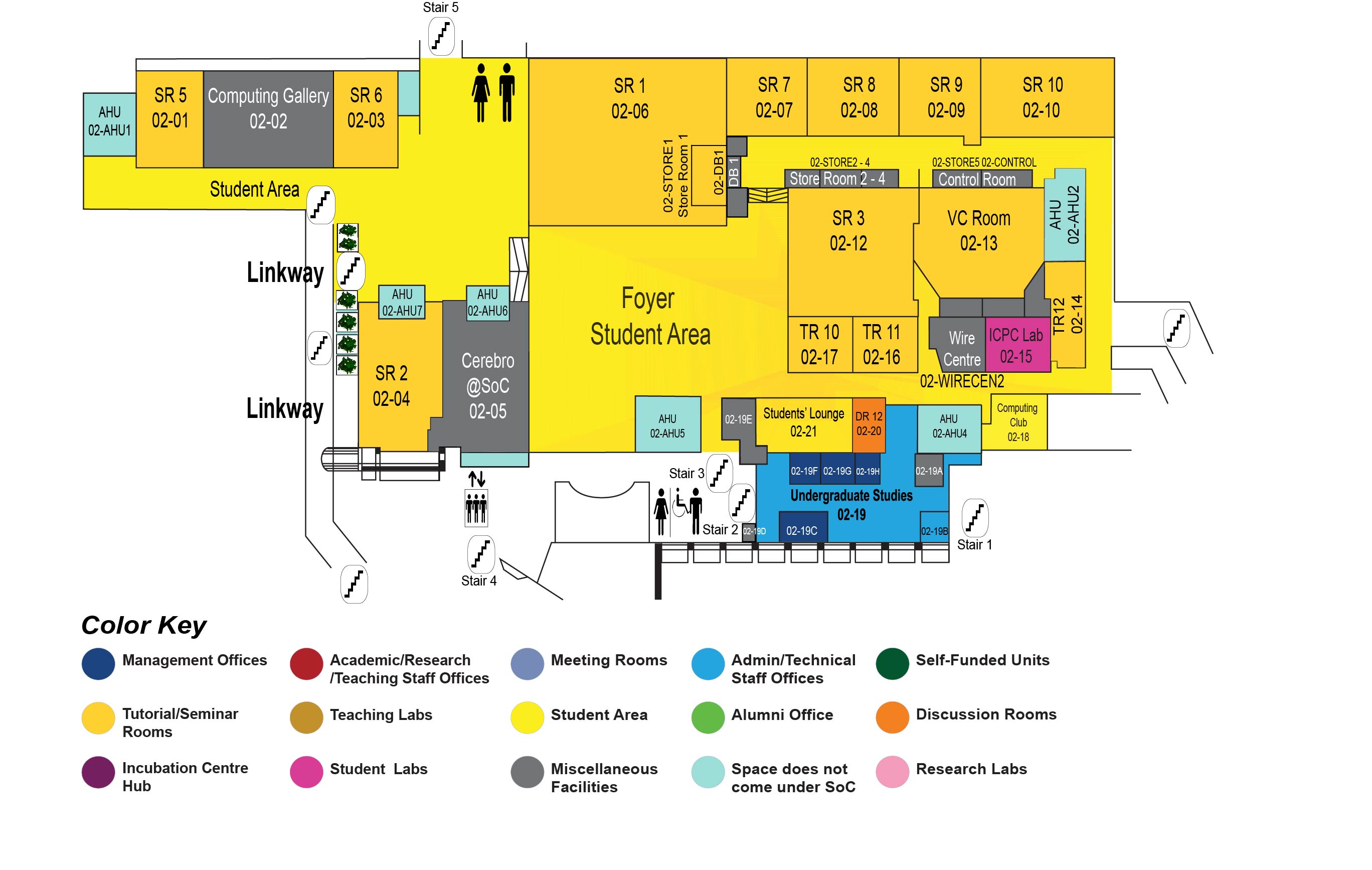

COM1 Level 2

SR1, COM1-02-06

Abstract:

In this thesis, we focus on understanding human and animal poses from a monocular image, which has wide applications in autonomous driving, virtual reality, surveillance, zoology, \etc. We first tackle the 3D human pose estimation task, where we aim to locate semantic keypoints in the 3D space from an input image. Existing deep learning based approaches generate a single 3D pose for each input. However, recovering 3D information from a single RGB image is an inverse problem because of the depth ambiguity and occluded joints. In view of this, we design a mixture density network to generate multiple hypotheses for the inverse 2D-to-3D problem. In contrast to existing deep learning approaches which minimize a mean square error based on an unimodal Gaussian distribution, our method is able to generate multiple feasible hypotheses of 3D pose based on a multimodal mixture-of-Gaussians. In addition to the ambiguity problem, another challenge in 3D human pose estimation is the lack of 3D annotations. It is difficult to obtain ground truth 2D-to-3D correspondences especially for outdoor images. To overcome this, we further propose a weakly supervised approach to generate multiple 3D pose hypotheses.

We then explore the human motion prediction task. This is a more high-level understanding of human poses, where we forecast the future poses based on current observations. The main challenge of this task is how to capture both spatial and temporal correlations of human motions effectively. To this end, we present a novel approach for human motion modeling based on convolutional neural networks (CNN). The hierarchical structure of CNN allows it to better capture long-term correlations in the motion sequence compared to existing RNN based models.

The next task we have tackled is animal pose estimation, which is relatively under-explored but equally important compared to human pose estimation. The main challenge for this task is the lack of well-labeled animal pose data. Currently, not many animal images with 2D keypoint annotations exist and it is almost impossible to collect 3D pose annotations for animals in the wild. In view of this, we first propose an unsupervised domain adaptation approach for 2D animal pose estimation. We transfer knowledge from the labeled synthetic domain to the unlabeled real domain by making use of pseudo labels for the real images. To solve the noisy pseudo label problem, we introduce a pseudo label updating strategy, which gradually updates the pseudo labels with more accurate ones during training.

We further work on 3D animal pose and shape estimation, where the 3D animal pose and shape are recovered simultaneously from an input image. Most of the existing works rely on the SMAL model since the low-dimensional SMAL parameters make it easier for deep networks to learn the high-dimensional animal meshes. However, the SMAL model is learned from scans of toy animals with limited pose and shape variations, and thus may not be able to represent highly varying real animals well. To mitigate this problem, we combine the SMAL-based representation with a vertex-based representation and regress 3D animal meshes in a coarse-to-fine manner. This combination makes our approach benefit from both model-based and model-free representations.