Fundamentals of Stream Data Processing with Apache Beam and Google Cloud Dataflow

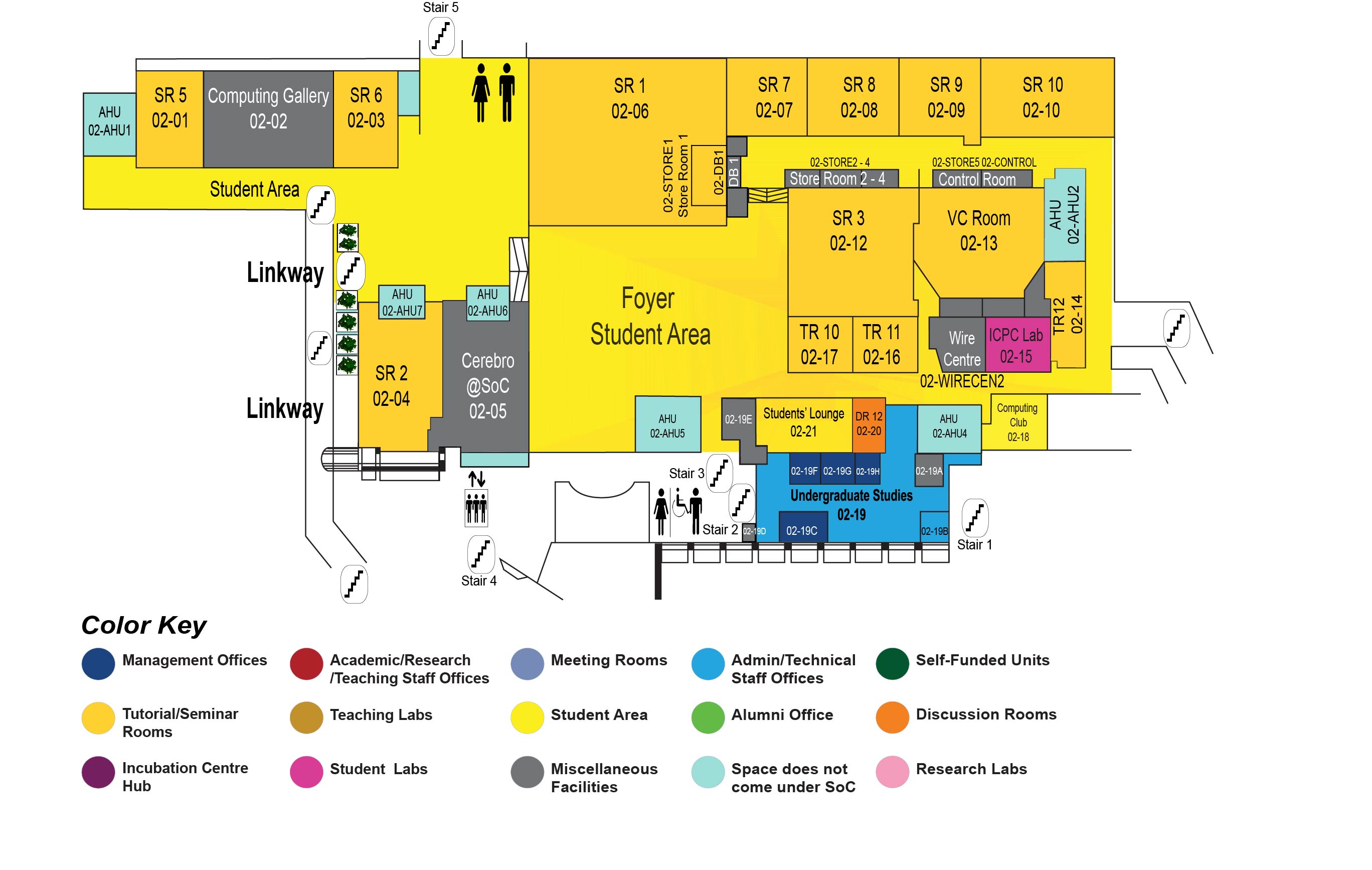

COM1 Level 2

Video Conference Room, COM1-02-13

Abstract:

This talk presents a unified model for streaming and batch data processing. We start from the history of large-scale data processing systems at Google and their evolution towards higher levels of abstraction culminating in the Beam programming model. We delve into the fundamentals of out-of-order unbounded data stream processing and we show how Beam's powerful abstractions for reasoning about time greatly simplify this complex task. Beam provides a model that allows developers to focus on the four important questions that must be answered by any stream processing pipeline:

- *What* results are being calculated,

- *Where* in event time they are calculated,

- *When* in processing time they are materialized, and

- *How* refinements of results relate.

We discuss how these key questions and abstractions enable important properties of correctness, expressive power, composability, flexibility, and modularity. Furthermore, by cleanly separating these questions from runtime characteristics, Beam programs become portable across multiple runtime environments, both proprietary, such as Google Cloud Dataflow, and open-source, such as Flink, Spark, Samza, etc. We close with a presentation of the execution model and show how the Google Cloud Dataflow service optimizes and orchestrates the distributed execution and autoscaling of a Beam pipeline.

Biodata:

Cosmin Arad is a staff software engineer at Google where he focuses on large-scale data analytics systems and infrastructure for internal and external users. Cosmin spent more than four years as the technical lead for autotuning, autoscaling compute resources, elasticity and dynamic work rebalancing in Google's Data Processing Languages & Systems group, which is responsible for Google's contributions to Apache Beam, Google Cloud Dataflow, and internal data processing tools like Flume, MapReduce, and MillWheel.

Before joining Google, Cosmin was a researcher in the area of Distributed Computing at the Swedish Institute of Computer Science. He received his PhD degree in 2013 from KTH, The Royal Institute of Technology in Stockholm, Sweden. Cosmin is broadly interested in distributed systems, distributed algorithms, programming models for distributed computing, data-intensive computing, scalable storage, fault-tolerance, concurrency, consistency models, software design and programming languages.