Multi-View Learning Using Dependency Models For Medical Decision Making

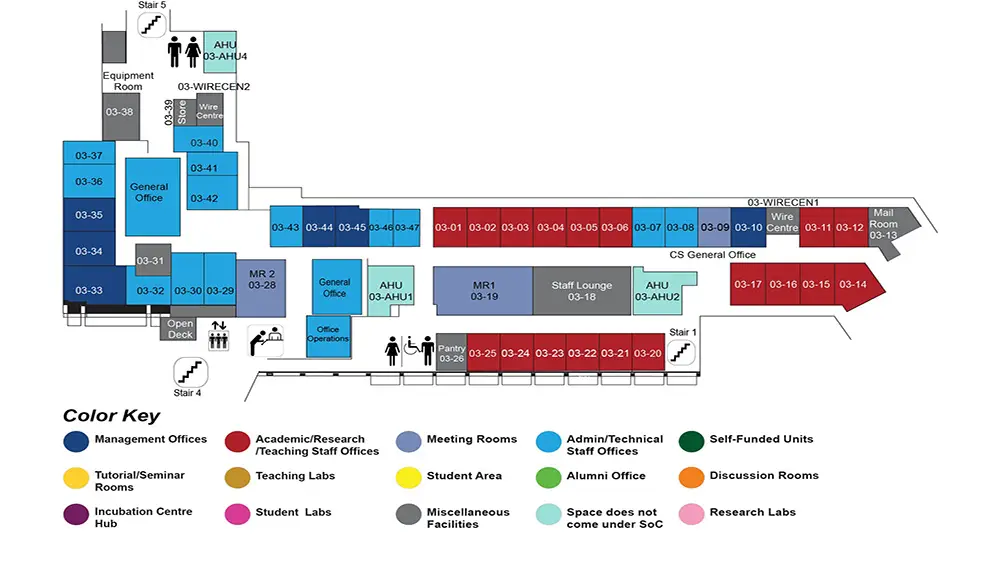

COM1 Level 3

MR1, COM1-03-19

Abstract:

Data fusion involves the integration of multiple sources of data, possibly collected in different modalities, that describe the same entity/phenomenon. Groups of features extracted from each data source present distinct views of information about the entity. Though the views may differ, combining the complementary information provides a fuller picture and better insights in analytic tasks. Moreover, there are statistical dependencies between the views as they originate from the same entity.

Dementia is a broad spectrum of age-related neurodegenerative disorders that severely affect a person's cognitive capabilities, rendering them incapable of performing activities of daily living. Early detection of this incurable disease allows clinicians to administer timely interventions that might delay its progression. Multiple procedures, such as brain imaging, cognitive tests, etc., are used to obtain bio-/clinical markers for the diagnosis of dementia. Various other factors from the individual's background, including genotype, demographics to lifestyle, are believed to be linked to dementia.

The state-of-the-art multi-view dementia models exploit the correlations between the markers to enable supervised predictions for disease detection, prognosis, etc. However, probabilistic or other types of dependencies that can explain the interactions and significance of several parameters and factors relevant to the medical domain need to be studied for better interpretation of the results by clinicians. A fusion approach can learn the correlations constrained with domain-driven causal considerations shared among heterogeneous marker and background views and support multi-task predictions in dementia management.

We propose a hierarchical probabilistic graphical model that incorporates the semantics of correlational and causal dependence, uncertainty, and complementarity in heterogeneous multi-view medical data. Our disease model considers two generic types of views: contributors (e.g., genotypic variables) that 'contribute' to the cognitive condition, and indicators (e.g., diagnostic imaging) that 'indicate' the presence of dementia.

We conduct an exploratory analysis to show the inadequacy of a single diagnostic modality and examine the challenges in multi-view learning from heterogeneous biomedical data for the tasks in dementia management. We contribute three incremental approaches to the multi-view interpretation of dementia. First, we design a two-level latent space modeling approach to fuse multiple views of imaging markers at higher levels of abstraction that include view-specific and shared dependencies. Second, we present a methodology to incorporate domain knowledge into the definition of dependencies between views of markers, background data, and the disease. As a step towards 'Explainable Artificial Intelligence (XAI),' we visualize and interpret the dependencies as contributing to or indicating the disease. Finally, guided by domain knowledge, we propose a unifying approach that expresses multi-view dependencies as results of the latent consensus between concepts extracted from data. For this, we develop a hierarchical model of the internal health state of an individual that captures the structural, statistical, and probabilistic dependencies among the views.

Promising empirical results on benchmark and real-world datasets show that our work enables multi-view learning of subject-related information to support clinical decision making. Our multi-view multi-task disease model simultaneously predicts the dementia stage and severity with improved performance over the state-of-the-art and better visualization of view and feature-level interactions.