Spoken Dialogue Comprehension and Summarization

AS6 Level 5

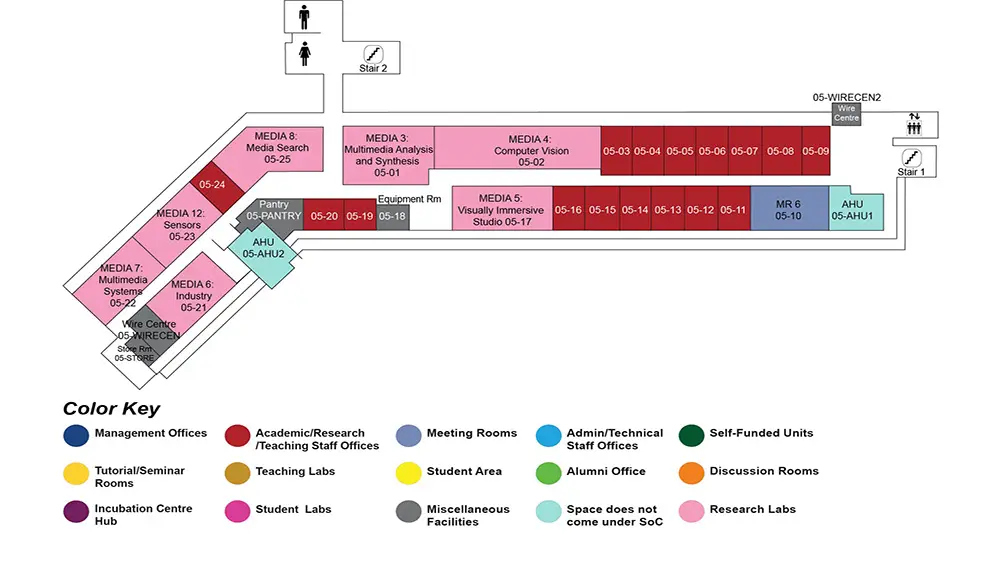

MR6, AS6-05-10

Abstract:

Despite the avid interest and development of dialogue systems, there are still many technical challenges to address before conversational AI technology can be adopted widely with ease. In this work, we share some of our recent endeavors for filling this technological gap. Much work in natural language processing has focused on developing computational models for retrieving, extracting, and modeling documents, yet such approaches might not always readily port over to dialogue processing, as the linguistic characteristics of documents and spoken dialogues are intrinsically distinct: while document passages are one-way communications between the writer and the reader, spoken conversations are dynamic information exchanges between at least two speakers, where communication is conducted through a much more spontaneous and effortless means (talking instead of writing), resulting in natural, common yet disfluent spoken characteristics such as thinking aloud, false starts, speaker interruption, self-contradiction, backchanneling, and topic drift. The key information about a certain topic is often at the sub-utterance level, scattered and spanned across multiple utterances and turns from different speakers, leading to low information density, diffuse topic coverage, and intra-utterance topic shift. Such challenges are further complicated by the lack of large-scale well-annotated linguistic resources for spoken conversations. In this work, we demonstrate how linguistically strategic data augmentation frameworks can enable end-to-end neural modeling of dialogue comprehension. In addition, we show how proposed neural architectures that consider dialogue turns and topic segments in spoken conversations can enhance dialogue comprehension and summarization capabilities, enabling targeted applications in healthcare, journalism, and education

Biodata:

Nancy F. Chen received her Ph.D. from MIT and Harvard in 2011.

She worked at MIT Lincoln Laboratory on her Ph.D. research in multilingual speech processing. She is currently leading research efforts in conversational AI with applications to healthcare, education, journalism, and finance at the Institute for Infocomm Research (I2R), A*STAR. Dr. Chen led a cross-continent team for low-resource spoken language processing, which was one of the top performers in the NIST Open Keyword Search Evaluations (2013-2016), funded by the IARPA Babel program. Dr. Chen is a senior IEEE member, an elected member of the IEEE Speech and Language Technical Committee (2016-2018, 2019-2021), associate editor of IEEE Signal Processing Letters (2019-2021) and the guest editor for the special issue of "End-to-End Speech and Language Processing" in the IEEE Journal of Selected Topics in Signal Processing (2017). Dr. Chen has received numerous awards, including Best Paper at APSIPA ASC (2016), the Singapore MOE Outstanding Mentor Award (2012), the Microsoft-sponsored IEEE Spoken Language Processing Grant (2011), and the NIH Ruth L.

Kirschstein National Research Award (2004-2008). In addition to her academic endeavors, Dr. Chen has also consulted for various companies ranging from startups to multinational corporations in the areas of emotional intelligence (Cogito Health), speech recognition (Vlingo, acquired by Nuance), and defense and aerospace (BAE Systems).