Faults at Scale: What New Bugs Live in the Cloud and How to Exterminate Them

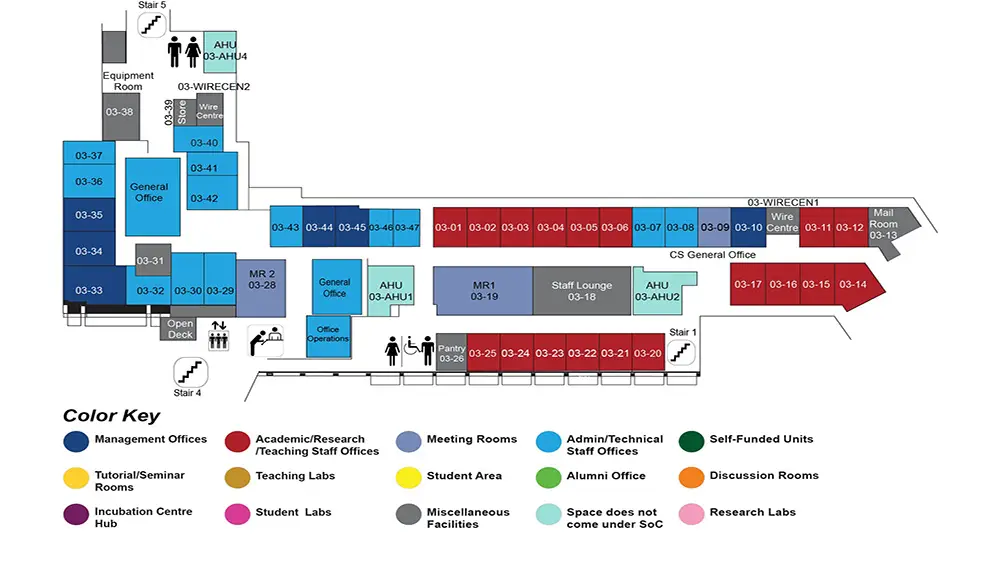

COM1 Level 3

MR1, COM1-03-19

Abstract:

As more data and computation move from local to cloud environments, datacenter distributed systems have become a dominant backbone for many modern applications. However, the complexity of cloud-scale hardware and software ecosystems has outpaced existing testing, debugging, and verification tools.

I will describe several classes of new bugs that surface in large-scale datacenter distributed systems: (1) distributed concurrency bugs, caused by non-deterministic timings of distributed events such as message arrivals as well as multiple crashes and reboots; (2) tail-performance faults that surface in the presence of "limping" hardware or heavy contention that can cause cascades of performance failures; and (3) scalability faults, latent faults that are scale dependent, typically only surface in large-scale deployments (100+ nodes) but not necessarily in small/medium-scale deployments. These findings are based on our long, large-scale cloud bug and outage studies (3000+ bugs and 500+ outages). I will present our various approaches in combating these bugs/faults.

Biodata:

Haryadi S. Gunawi is an Associate Professor in the Department of Computer Science at the University of Chicago where he leads the UCARE research group (UChicago systems research on Availability, Reliability, and Efficiency). He received his Ph.D. in Computer Science from the University of Wisconsin, Madison in 2009. He was a postdoctoral fellow at the University of California, Berkeley from 2010 to 2012. His current research focuses on cloud computing reliability and new storage technology. He has won numerous awards including NSF CAREER award, NSF Computing Innovation Fellowship, Google Faculty Research Award, NetApp Faculty Fellowships, and Honorable Mention for the 2009 ACM Doctoral Dissertation Award.

His research focus is in improving dependability of storage and cloud computing systems in the context of (1) performance stability, wherein he is interested in building storage and distributed systems that are robust to latency tails and "limping" hardware, and (2) reliability and scalability, wherein he is interested in combating concurrency and scalability bugs in cloud-scale distributed systems, and (3) interactions of machine learning and systems, specifically how machine learning techniques can address operating/storage system problems.