Bandit-Style Algorithms for Wireless Network Selection

Dr Tan Kian Lee, Tan Sri Runme Shaw Senior Professor, School of Computing

COM1 Level 3

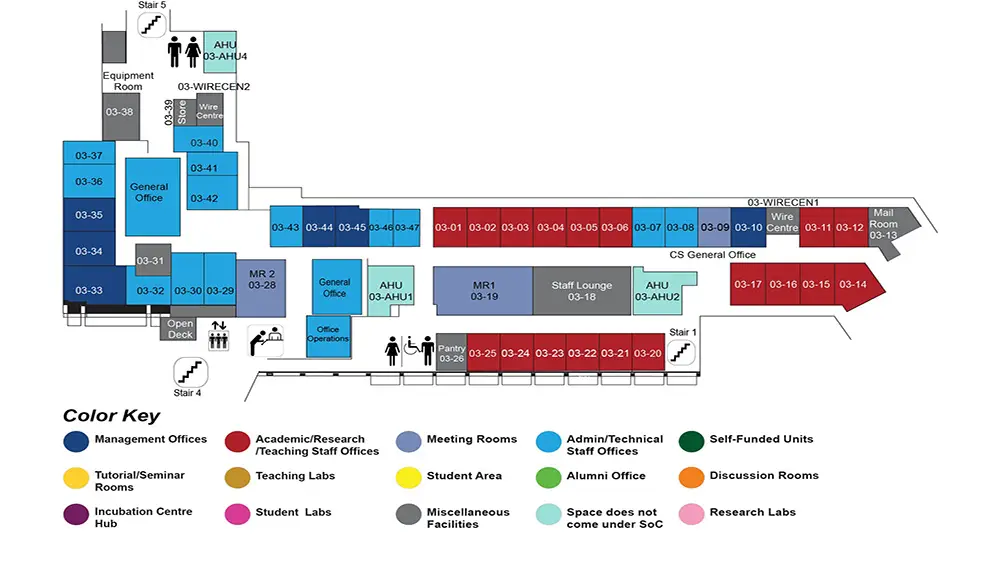

MR1, COM1-03-19

Abstract:

Multi-armed bandit problem has been studied in several settings and various bandit algorithms, with impressive theoretical properties, have been proposed. EXP3 is a leading bandit algorithm for the adversarial bandit setting, i.e., a setting where an adversary has control over the rewards from the actions. It is fully decentralized and is regret-minimizing, i.e., as time elapses it performs nearly as well as always selecting the best fixed action in hindsight. When applied in a multiplayer setting, it is known to converge to a Nash equilibrium. These good properties suggest that it should be an excellent solution for distributed resource selection problems. However, its real-world performance lags far behind.

We develop bandit-style algorithms that perform significantly better in practice, without compromising on the good theoretical properties of existing bandit algorithms. We demonstrate that multi-armed bandit algorithms can play an important role in distributed resource selection problems, when practical concerns are addressed. As a motivating example, we focus on the wireless network selection problem. Mobile devices often have several wireless networks at their disposal. While choosing the right one is vital for good performance, it is non-trivial. A decentralized solution remains a challenge. Given that these devices operate in a dynamic environment, it is more challenging to achieve an optimal solution.

First, we propose Smart EXP3, a fully decentralized bandit-style algorithm, with good theoretical and practical performance. We prove that it is regret-minimizing, and retains the convergence property of EXP3. We give an upper bound on the number of network switches. We rely on simulation using synthetic data, trace-driven simulation, controlled experiments and in-the-wild experiments to thoroughly evaluate Smart EXP3. Results show that it performs significantly better than EXP3 in practice and allows mobile devices to effectively learn and adapt in a dynamic wireless network environment, by relying solely on their own observations. In real-world experiments, it can achieve 18% faster download when compared to naive greedy.

Second, we develop Co-Bandit, that allows devices to occasionally share their observations and forward feedback received from neighbors; hence, feedback may be received with a delay. Mobile devices perform network selection based on their own observations and feedback from neighbors. Cooperation help them speed up each other's rate of learning. We prove that Co-Bandit is regret-minimizing and retains the convergence property of multiplicative weight update algorithms with full information. Through simulation, we show that a very small amount of information, even with a delay, is adequate to nudge each other to select the right network and yield significantly faster stabilization at the optimal state.

Overall, we contribute a set of features that can improve the practical performance of bandit algorithms, without affecting their good theoretical properties.