Improving Robustness and Predictive Uncertainty Estimation of DNN Classifiers

Dr Lee Mong Li, Professor, School of Computing

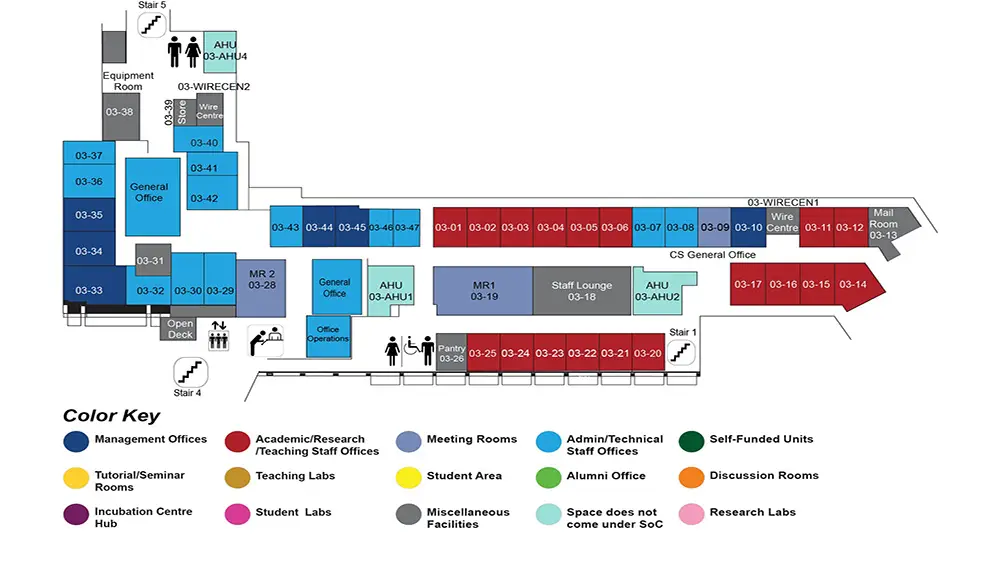

COM1 Level 3

MR1, COM1-03-19

Abstract:

A machine learning model needs to be robust, interpretable and should be able to warn the users when it is not confident about its predictions. However, despite of achieving impeccable success of deep neural networks (DNNs), their vulnerability towards adversarial attacks and overconfident predictions have raised the question of how reliably can we use these models? This thesis aims to address these issues to improve their reliability for real world applications.

Existing systems either focus to achieve robustness or to improve predictive uncertainty estimation. Defenses against adversarial attacks typically provide robustness only to a specific perturbation type such as a small $\ell_p$ boundary.

However, they offer no guarantees to other attack types. Further, current state-of-the-art adversarially trained defense mechanisms are computationally expensive to train as they need to produce strong adversaries at each training iteration.

In contrast to standard DNNs, RBF based DNNs are typically robust to adversarial examples, however at the cost of lower accuracy. We first intend to study the generative properties of a deep RBF network, called Normal Similarity Network (NSN). We present a sampling algorithm, called NSN-Gen, to generate and reconstruct images from any

layer of our proposed NSN. We show that, NSN-Gen can be applied to NSN for several computer vision applications such as image generation, styling and image reconstruction from occluded images.

We adopt the sampling algorithm of NSN to propose a novel defense model, called RBF-CNN, that can be efficiently trained to improve the robustness for any $\ell_{p\geq 1}$.Instead of using deep RBF networks, RBF-CNN utilizes a layer of radial basis function kernels and a reconstruction layer to mitigate adversarial noises as well as to maintain the high accuracy for clan test data. We prove that the match scores obtained from the RBF kernels are bounded for any $\ell_{p\geq 1}$ perturbations. We then show that for any $\ell_{p\geq 1}$ perturbations, the RBF and reconstruction layer can mitigate the adversarial noise.

Our RBF-CNNs are found to be robust against any small $\ell_1$, $\ell_2$ and $\ell_{\infty}$ perturbations for MNIST and CIFAR10. RBF-CNN outperforms the state-of-the-art Madry defenses against $\ell_{\infty}$-bounded attacks in terms of robust accuracy.In terms of time efficiency, the best RBF-CNN models can be trained at more than 4.5x and 12x faster for MNIST and CIFAR10 respectively compared to the adversarially trained models.

While a robust classifier can defend against adversarial attacks, they are still always tend to predict with high confidence, even when miss-classifying or for unknown inputs from different distributions. Towards this, we aim to improve the predictive uncertainty estimation of RBF-CNNs along with identifying the source of their uncertainty.