Quality Mesh Generation on GPU

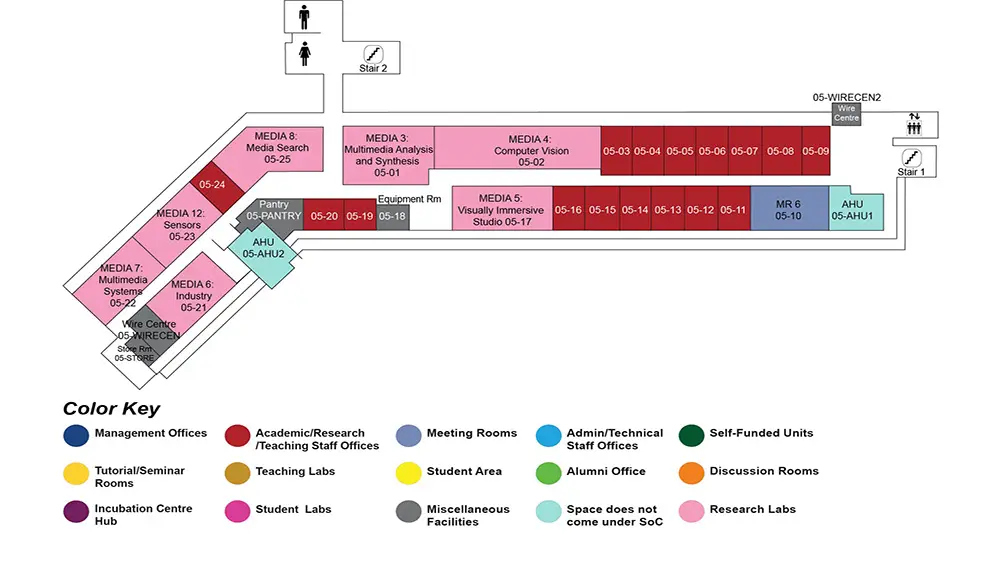

AS6 Level 5

MR6, AS6-05-10

Abstract:

Mesh generation is one important computation step in many engineering and scientific applications such as finite element, interpolation, GIS, path planning, etc. The input to mesh generation is usually a set of points P, a set of line segments L where each is formed with endpoints in P, and a set of faces where each has boundaries defined with line segments in L. These sets are the abstraction of important features of the domain to generate an output mesh conforming to them and with the augmentation of new points, called Steiner points, for subsequent processing. In general, output has to meet some quality measures such as no small angles in triangles of the mesh, the aspect ratios of the triangles or tetrahedra of the mesh are to be bounded etc. And, it is desirable not to use excessively many Steiner points, as a large output mesh means in general a long computation time needed for subsequent processing.

There are good algorithms and software developed in the past 30 years to address mesh generation in 2D and 3D. Notably, the Triangle software by Shewchuk, the TetGen software by Hang, and the CGAL software library. In 2D, the input is termed planar straight line graph (PSLG), while in 3D, piecewise linear complex (PLC). These algorithms usually add Steiner point one by one to refine a mesh until a quality one is reached. However, the increase in the input size can lead such software to take close to hours to complete, even with the most powerful PC available to date. This is undesirable to many, especially interactive, applications. We thus were curious what might be possible with the inexpensive, parallel computing power of GPU that could launch thousands of threads to concurrently refine a mesh to a quality one.

Our review of prior work shows that there was no particularly successful attempt in adapting GPU to compute quality meshes. Though there are reports on such works, for the past 15 years of flourishing development in GPU, none have provided convincing evidence of correct answer nor good performance for mesh generation with PSLG or PLC. We thus started exploring GPU for mesh generation a few years ago. It was clear from the early days of this research that a good GPU algorithm would not be a direct porting from a CPU algorithm. In particular, it is non-obvious on how to achieve regularized work and localized data computation that are important in exploiting the potential of GPU hardware. At a glance, one may attempt refining a mesh by simply inserting Steiner points concurrently with thousands of GPU threads to gain good speedup. This, however, is problematic as independent, concurrent and uncoordinated insertions can lead to redundant ones and also to cast serious doubt on whether the approach can ever terminate in finite time.

With the above review and challenges in mind, we started the research with an in-depth study on the 2D Delaunay refining problem. We learn that the Triangle software is the fastest CPU Delaunay mesh generator which partially unifies the algorithms of Ruppert and of Chew to produce the quality mesh with no angle smaller than an input angle theta. In Ruppert's algorithm, one needs to compute all the segments that are encroached by a point (i.e. the point is inside the circumcircles of the line segments), while in Chew's algorithm, one needs to search for all points encroaching a segment. Both types of encroachment would be inefficient when done naively with GPU threads due to unbalance workload among them. Our effort has found a way to avoid unbalance workload by resolving all encroachments indirectly through Delaunay property coupled with a so-called flip-flop algorithm. Our implementation is shown to run from a few times to an order of magnitude faster than the Triangle software. It is also proven to terminate with a finite output size for an input PSLG with no angle small than 60 degrees and theta less or equal to 20.7 degrees. In addition, we notice meshes generated by our algorithm are of similar sizes to those by Triangle.

With the good result on the 2D refinement with GPU, we moved on to examine 3D refinement problem. In 3D, we learned that the so-called constrained Delaunay Triangulation (CDT) of a given PLC may not exist unless all segments of the PLC are strongly Delaunay. One can do so with Steiner points added to line segments to generate a CDT efficiently. The problem thus becomes how fast one could generate a quality CDT from a given CDT. Our review of prior work shows that TetGen is the best but still can take a long time to complete its computation. This provided good justification to once again explore the use of GPU. The obvious challenge is to realize the insertion of multiple Steiner points concurrently and efficiently. But, the useful mechanism of the flip-flop algorithm in 2D is no longer workable in 3D as parallel flipping is not known to reach all possible triangulations. Our effort has found a way to utilize thousands of GPU threads to concurrently compute so-called cavities of varying sizes while taking good care of workload balancing to then add multiple Steiner points to realize a good speedup. Its implementation shows that it can be an order of magnitude faster than TetGen while using similar amounts of Steiner points to produce triangulations of comparable good quality and with the guarantee of termination.

With the successes and experiences gained from the study of the above two problems in 2D and 3D, we are ready to investigate other refinement problems. One such problem comes to our attention is the so-called approximate Delaunay refinement problem implemented in the CGAL software. The output mesh for this problem is not strictly conforming to the input PLC. Rather, it is a Delaunay triangulation that approximates the input domain boundary by a new boundary triangulation using the notion of restricted Delaunay triangulation. This means that all input points are represented by mesh vertices, all input line segments are approximated by sequences of mesh edges, and all input faces (surfaces) are approximated by the union of mesh triangles, with some tolerance. We are curious how to address this problem using GPU and what kind of speedup is possible when compared to CGAL running in CPU. We look forward to developing a good GPU software for this problem.