Signal processing methods for sound recognition

AS6 Level 5

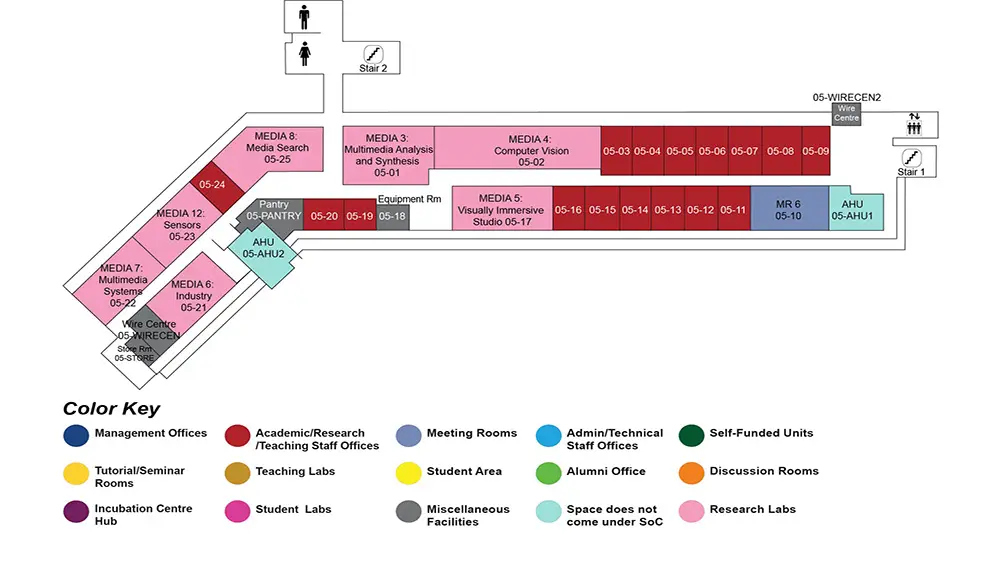

MR6, AS6-05-10

Abstract:

Audio analysis -also called machine listening- involves the development of algorithms capable of extracting meaningful information from audio signals such as speech, music, or environmental sounds. Sound recognition, also called sound event detection, is a core problem in machine listening and is still considered to be an open problem in the case of recognizing multiple overlapping sounds in the presence of complex noisy environments. Applications of sound recognition are numerous, including but not limited to smart homes/smart cities, ambient assisted living, biodiversity assessment, security/surveillance and audio archive management amongst others. In this talk I will present current research on methods for sound recognition in complex acoustic environments, using elements from signal processing and machine learning theory. The talk will also cover ongoing research community efforts for public evaluation of sound recognition methods, as well as a discussion on the limitations of current audio analysis technologies and on identifying promising directions for future machine listening research.

Biodata:

Emmanouil Benetos is Senior Lecturer and Royal Academy of Engineering Research Fellow at the School of Electronic Engineering and Computer Science of Queen Mary University of London (QMUL) and Turing Fellow at The Alan Turing Institute, UK. He holds a BSc (2005) and MSc (2007) in Informatics from the Aristotle University of Thessaloniki, Greece. After receiving his PhD in Electronic Engineering at QMUL (2012), he joined City, University of London as University Research Fellow (2013-14). His research interests include signal processing and machine learning methods for audio analysis, as well as applications of these methods to music information retrieval and environmental sound analysis, having authored/co-authored over 90 papers in the aforementioned fields. He has been primary investigator and co-investigator for several audio-related research projects funded by the EPSRC, AHRC, RAEng, European Commission, SFI, and the industry. He co-organized the 2013 and 2016 IEEE Challenges on Detection and Classification of Acoustic Scenes and Events (DCASE 2013 and 2016) and he is Associate Editor for the EURASIP Journal on Audio, Speech, and Music Processing. Website: http://www.eecs.qmul.ac.uk/~emmanouilb/