Regularization at Ease for Deep Learning Applications

COM1 Level 3

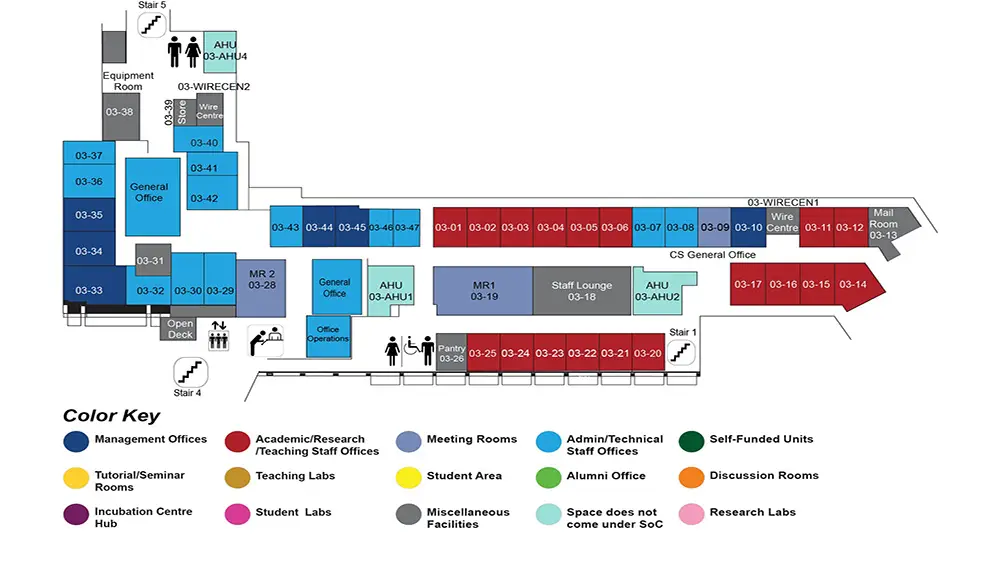

MR1, COM1-03-19

Abstract:

Deep Learning and Machine Learning models have recently been shown to be effective in many real world applications. While these models achieve increasingly better predictive performance, their structures have also become much more complex. A common and difficult problem for complex models is overfitting. Regularization is used to penalize the complexity of the model in order to avoid overfitting. However, in most learning frameworks, regularization function is usually set as some hyper parameters, and therefore the best setting is difficult to find.

In this proposal, we focus on the regularization for deep learning models. Firstly, we propose an adaptive regularization method which effectively learns the best regularization function adaptively according to the model parameters. Secondly, we propose a knowledge driven regularization that incorporates external knowledge learned from external corpora via Latent Dirichlet Allocation(LDA). For both adaptive and knowledge driven regularization methods, we develop an effective update algorithm which integrates Expectation Maximization (EM) with Stochastic Gradient Descent (SGD). In order to improve efficiency, we design a lazy update algorithm and a sparse update algorithm to reduce the computational costs. We validate the effectiveness of our regularization methods through an extensive experimental study over standard benchmark datasets and different kinds of deep learning/machine learning models. The results illustrate that our proposed adaptive regularization method and knowledge driven regularization method achieve significant improvement over their respective baseline methods.

Additionally, since both adaptive and knowledge-driven regularization methods are flexible and general to different kinds of models, we integrate them into GEMINI, a large end-to-end healthcare data analytics software stack. In the third piece of work, we extend the knowledge driven regularization to hidden layers of the deep learning models by borrowing the idea of residual networks.