A STUDY OF ILLUMINANT ESTIMATION AND GROUND TRUTH COLORS FOR COLOR CONSTANCY

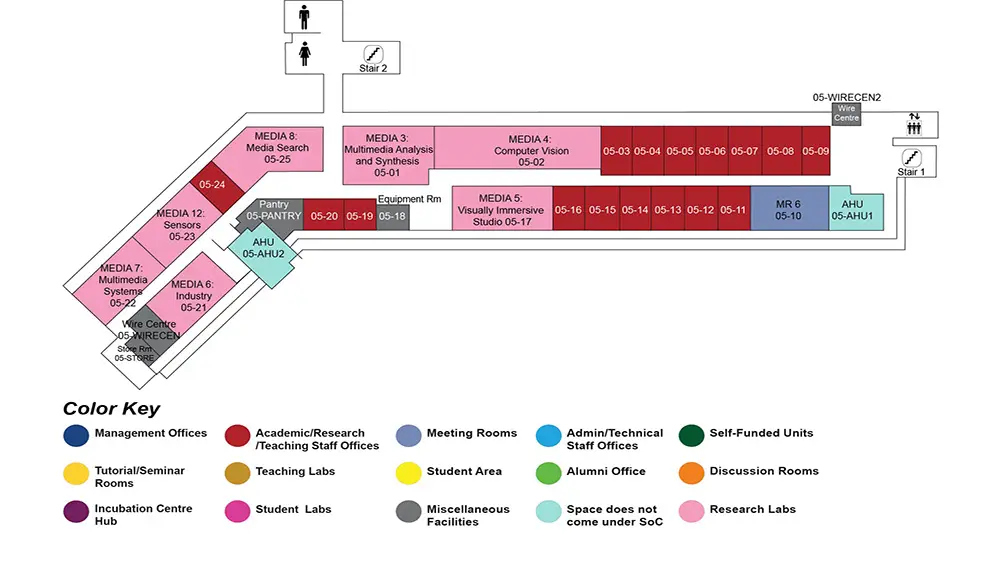

AS6 Level 5

MR6, AS6-05-10

ABSTRACT:

This thesis examines methods for illumination estimation and color correction for color constancy. The thesis first overviews the problem of computational color constancy by describing mathematical models used in prior work. We then discuss commonly used metrics to evaluate color constancy algorithms and existing available datasets.

This is followed by describing two works we have done for illuminant estimation. The first is a statistics-based method that estimates the illumination based on an images color distribution. This work starts by questioning the role of spatial image statistics commonly used on color constancy methods and their relation to color distribution. Specifically, we show that the spatial information (e.g. image gradient) serves as a proxy in providing information on the shape of the color distribution. Based on this finding, we propose a method to derive similar results directly from the color distribution without the need for computing spatial information. This method has the performance on par or superior to complex learning-based method. This finding led us to a second illumination estimation method, in particular a learning-based method that relies on simple color distribution features. To the best of our knowledge, the proposed learning framework produces the best illuminant estimation result to date but provides a computational efficiency similar to statistics-based method.

The last part of this thesis is to examine the color correction of an image after the illumination has been estimated. Most methods rely on a diagonal 3*3 correction model often attributed to the von Kries model for human color constancy. This diagonal model can only ensure that neutral colors in an image are corrected, thus allowing "white-balancing", but not true color correction. The fundamental problem in applying full color correction is the inability to establish ground truth colors in camera-specific color spaces for evaluating corrected images. We describe how to overcome this limitation by obtaining ground truth colors from the ColorChecker charts that can be used to re-purpose images in existing datasets and show how to modify existing algorithms to perform better image correction.