Cache Management Algorithms: Single And Networked Caches

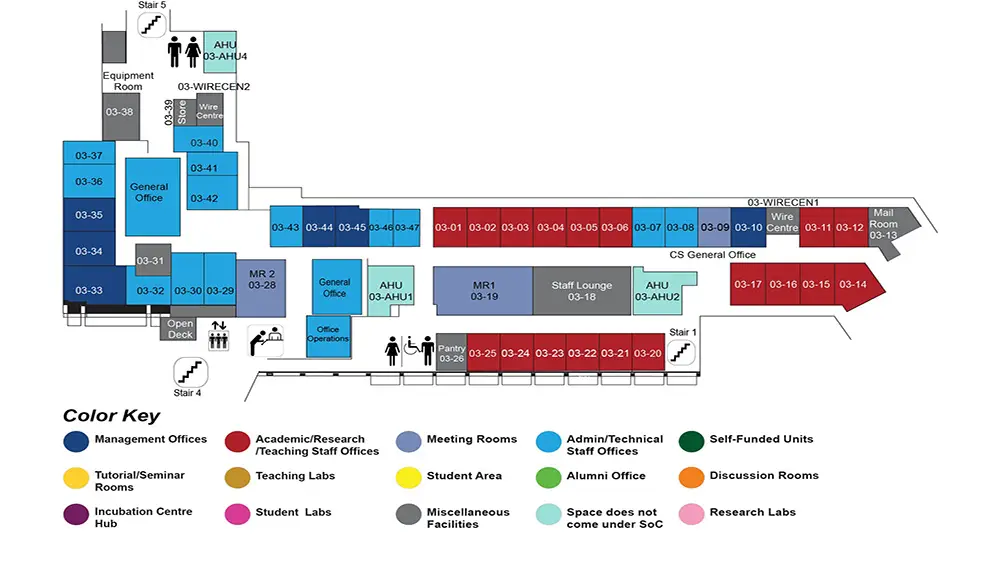

COM1 Level 3

MR1, COM1-03-19

Abstract:

The majority of computing systems such as database management systems, operating systems, web proxies and possibly future routers use a fast memory, cache, to accelerate their data retrieval processes and consequently reduce the completion time of their tasks. For several decades, many works have been conducted with the goal of increasing the cache hit ratio. Due to the advantages of using cache, the network of caches is used many years ago in hierarchical and distributed caching, later in en-route caching and recently as the common part of all Information Centric Networking (ICN) proposals for the future Internet architecture. In the network of caches, obtaining high overall hit ratio, more important than only having a high hit ratio in a single cache, is reduced by the filtering effect. The filtering effect happens where a cache is managed in correlation with other caches inside a network of caches. The correlation among caches is due to communicating among caches with the objective of serving the requests. A cache filters the requests that generate cache-hits and passes the requests that generate cache-misses. These filtering and passing change the pattern of requests such that another cache is hardly able to obtain a high hit ratio from the missed requests. Therefore, a networked cache management policy should address the filtering effect problem in addition to obtaining a high hit ratio. Moreover, a cache management policy which is able able to obtain high hit ratio and address the filter effect, cannot obtain high overall high hit ratio without coordination due to redundancy. Moreover, the coordination schemes with high communication or processing overhead are not applicable for many applications such as ICN network.

The objective of this thesis is to propose the light weight coordination schemes for ICN network to obtain high overall hit ratios by addressing the filtering problem and managing the redundancy. We use two different approaches to reach our goal. In the first approach, we design a cache management policy to address the filtering effect problem. Then, we improve the performance of our policy in terms of hit ratio. Finally, we introduce our coordinated scheme integrated with our improved policy. In the second approach, we design a simple caching algorithm to obtain high hit ratio for a single cache. Then, we tackle the filtering effect problem through coordinating among caches and combining the finding of the first approach.

The first approach starts by proposing a new cache management algorithm called two-state policy to address the filter effect problem. The two-state policy manages a cache such that: i) the cache obtains a high hit ratio and, ii) the missed requests from the cache can be used by other caches to obtain a high hit ratio. From a network of caches perspective, the two-state policy provides the opportunity of obtaining the high hit ratios for other caches by introducing a new type of filtering. From a single cache perspective, we prove that the two-state policy obtains a hit ratio same as LRU under Independent Reference Model (IRM) assumption. In addition, we improve the adaptation property of two-state policy for the network with large RTTs through a reservation mechanism. However, the two-state policy still suffers from one-timer contents (pollution). To solve this pollution, we generalize the two-state policy to an n-state policy. In addition, the n-state obtains hit ratio higher than two-state while the n-state inherits the advantages of two-state such as solving the filter effect problem.

We complete our first approach by proposing a coordinated protocol which is integrated with n-state policy. We present two versions of our scheme: coordinated two-state with reservation (CO2S) and coordinated three-state with reservation (CO3S). CO2S and CO3S use the advantages of two-state (solving filtering effect and thrashing) and three-state (solving filtering effect, thrashing and pollution) and also manage the redundancy. Consequently, our schemes obtain high overall hit ratio by achieving high hit ratio at both edge and core routers. This leads to bringing the popular content close to consumers, decreasing the content download time and decreasing the redundant packet transmissions in the network. Moreover, our schemes decrease the eviction rate in caches which may lead to reducing the energy consumption. CO3S obtains higher overall hit ratio compared to CO2S. On the other hand, CO2S obtains overall hit ratio comparable with other schemes but decreases the eviction rate up to four orders of magnitude. The implementation of our schemes is simple and done through piggybacking information in extra integer fields of requests and content packets.

Our second approach starts by introducing CAP, a cache management policy which addresses all of the caching problems for a single cache and is the base a new coordinated scheme called COCAP. CAP introduces a class of replacement policies by dividing the cache into two variable sized segments managed independently. Among all of the combinations of the applicable replacement policies for the two segments, the combination of Random policy for missed content and no-operation for hit content solves all of the caching problems for a single cache. In addition, our trace based simulations show that CAP obtains the hit ratio pretty close to the state of the art for a single cache. Moreover, the time complexity of CAP is constant and it does not impose memory overhead.

Our second approach gets completed by proposing a coordinated scheme based on CAP, COCAP, for a network of caches. COCAP solves thrashing and pollution problems using CAP and tackles the filter effect through coordination. COCAP coordination has two characteristics: i) using freezing/updating idea from two-state ii) managing the network of caches as a virtual cache. The virtual cache size enables COCAP to adaptively manage network of caches based on the ratio of cache size to the total number of content. Using the idea of virtual cache, COCAP manages the redundancy by bringing the popular contents close to consumers.

To summarize, we proposed two coordinated caching schemes: CO3S and COCAP. When the ratio of cache size to the total number of content (cache ratio) is very small (the case in ICN), both COCAP and CO3S perform well by obtaining high hit ratio in both edge and core routers, decreasing the content download time and reducing the redundant packet transmissions in the network. Moreover, COCAP outperforms the CO3S by increasing the cache ratio due to the COCAP flexibility to cache ratio achieved by virtual cache size.